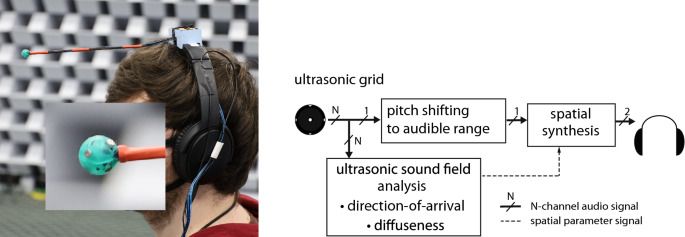

In principle, any pitch-shifting technique may be employed, provided that the frequency-dependent parameters analysed from the ultrasonic sound-field are mapped correctly to the frequency scale of the pitch-shifted signal. Since the spatial parameters are averaged over frequency in the currently employed configuration of the device, the frequency mapping is not required in this case. Instead, each time frame of the pitch shifted signal is spatialised according to a frequency-averaged direction. The pitch-shifting technique used for the application targeted in this article should be capable of large pitch-shifting ratios, while also operating within an acceptable latency. Based on these requirements, the phase-vocoder approach15,16 was selected for the real-time rendering in this study, due to its low processing latency and acceptable signal quality with large pitch-shifting ratios. However, the application of other pitch-shifting methods is also demonstrated with recordings processed off-line and described in the Results section.

In summary, the proposed processing approach permits frequency-modified signals to be synthesised with plausible binaural and monaural cues, which may subsequently be delivered to the listener to enable the localisation of ultrasonic sound sources. Furthermore, since the super-hearing device turns with the head of the listener, and the processing latency of the device was constrained to 44 ms, the dynamic cues should also be preserved. Note that the effect of processing latency has been previously studied in the context of head-tracked binaural reproduction systems, where it has been found that a system latency above 50–100 ms can impair the spatial perception17,18. Therefore, it should be noted that a trade-off must be made between: attaining high spatial image and timbral quality (which are improved through longer temporal windows and a higher level of overlapping) and having low processing latency (which relies on shorter windows and reduced overlapping). The current processing latency has been engineered so that both the spatial image and audio quality after pitch-shifting, as determined based on informal listening, remain reasonably high.

One additional advantage of the proposed approach is that only a single signal is pitch shifted, which is inherently more computationally efficient than pitch-shifting multiple signals; as would be required by the three alternative suggestions described in the Introduction section. Furthermore, the imprinting of the spatial information onto the signal only after pitch-shifting, ensures that the directional cues reproduced for the listener are not distorted by the pitch-shifting operation. The requirements for the size of microphone array are also less stringent compared to the requirements for an Ambisonics-based system. In this work, an array with a diameter of 11 mm was employed, which has a spatial aliasing frequency of approximately 17 kHz. This therefore prohibits the use of Ambisonics for the ultrasonic frequencies with the present array. By contrast, the employed spatial parameter analysis can be conducted above the spatial aliasing frequency; provided that the geometry of the array is known and that the sensors are arranged uniformly on the sphere.