Not everything about glass is clear. How its atoms are arranged and behave, in particular, is startlingly opaque.

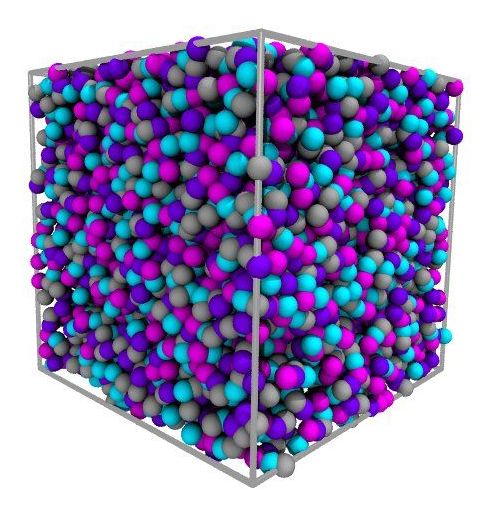

The problem is that glass is an amorphous solid, a class of materials that lies in the mysterious realm between solid and liquid. Glassy materials also include polymers, or commonly used plastics. While it might appear to be stable and static, glass’ atoms are constantly shuffling in a frustratingly futile search for equilibrium. This shifty behavior has made the physics of glass nearly impossible for researchers to pin down.

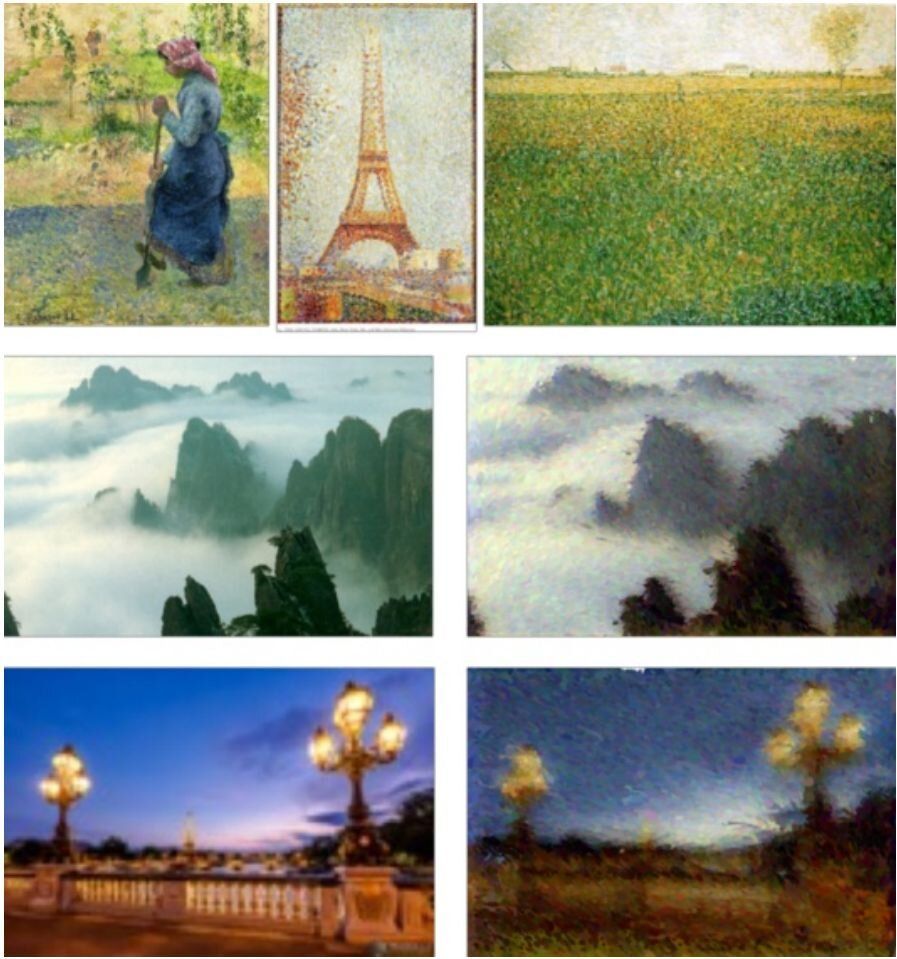

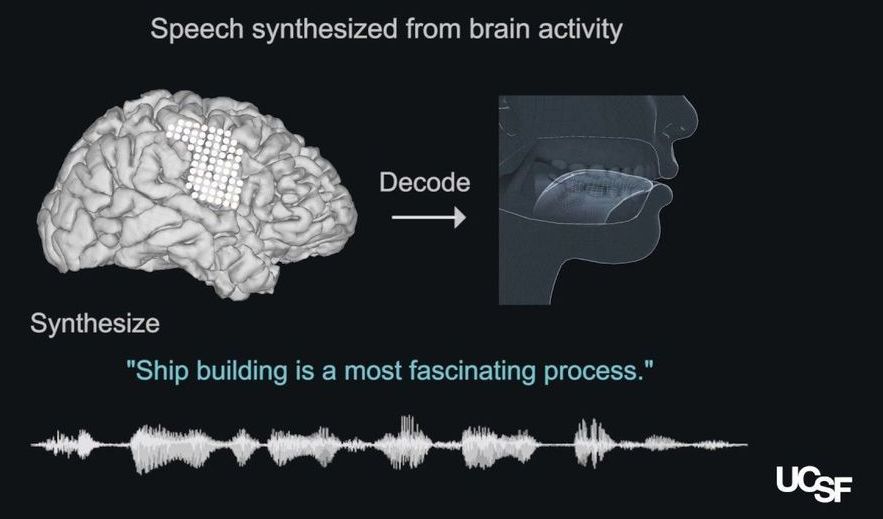

Now a multi-institutional team including Northwestern University, North Dakota State University and the National Institute of Standards and Technology (NIST) has designed an algorithm with the goal of giving polymeric glasses a little more clarity. The algorithm makes it possible for researchers to create coarse-grained models to design materials with dynamic properties and predict their continually changing behaviors. Called the “energy renormalization algorithm,” it is the first to accurately predict glass’ mechanical behavior at different temperatures and could result in the fast discovery of new materials, designed with optimal properties.