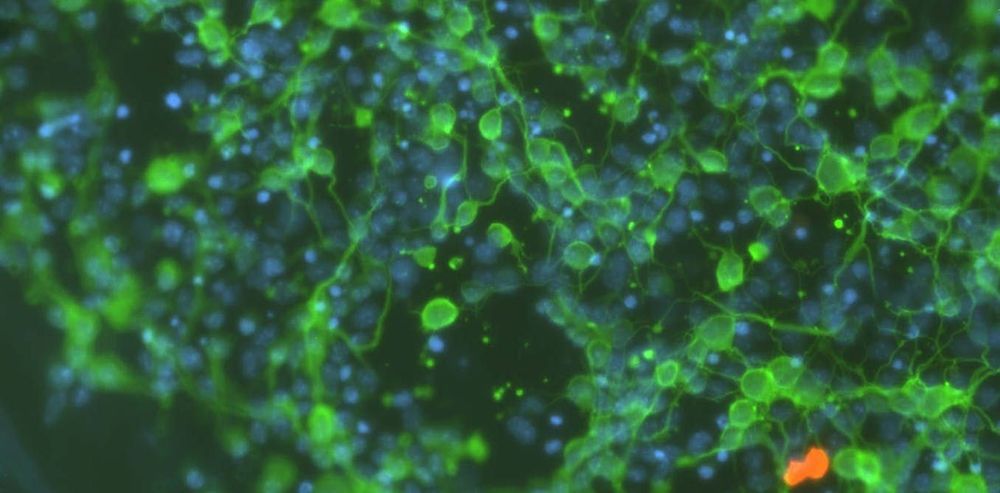

A team at Flinders University in South Australia has developed a new vaccine believed to be the first human drug in the world to be completely designed by artificial intelligence (AI).

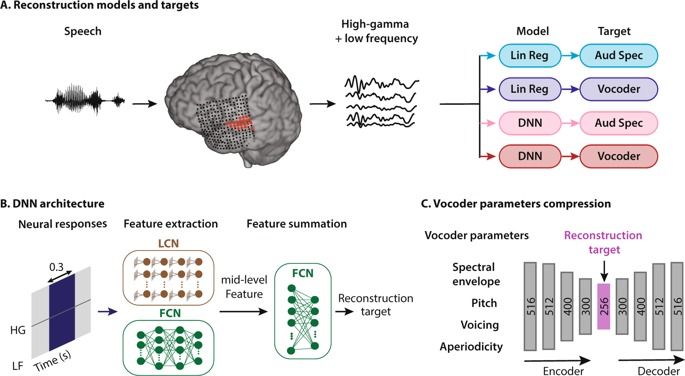

While drugs have been designed using computers before, this vaccine went one step further being independently created by an AI program called SAM (Search Algorithm for Ligands).

Flinders University Professor Nikolai Petrovsky who led the development told Business Insider Australia its name is derived from what it was tasked to do: search the universe for all conceivable compounds to find a good human drug (also called a ligand).