Wave function represents the quantum state of an atom, including the position and movement states of the nucleus and electrons. For decades researchers have struggled to determine the exact wave function when analyzing a normal chemical molecule system, which has its nuclear position fixed and electrons spinning. Fixing wave function has proven problematic even with help from the Schrödinger equation.

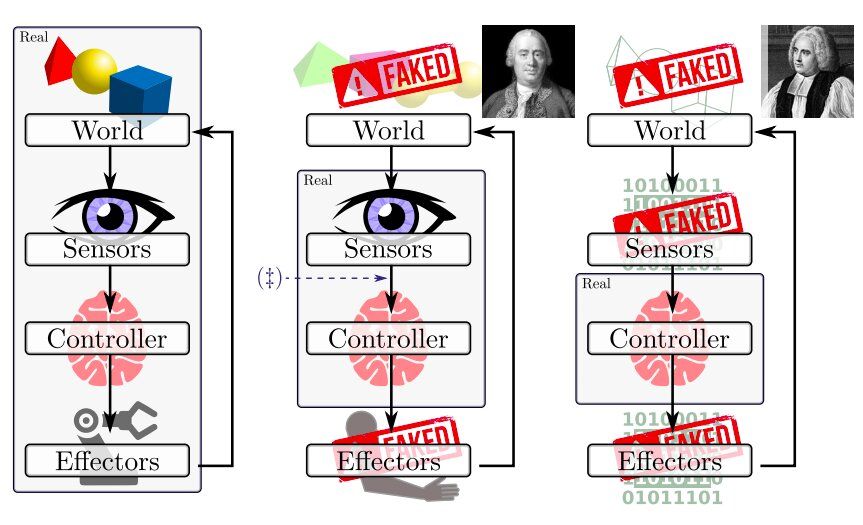

Previous research in this field used a Slater-Jastrow Ansatz application of quantum Monte Carlo (QMC) methods, which takes a linear combination of Slater determinants and adds the Jastrow multiplicative term to capture the close-range correlations.

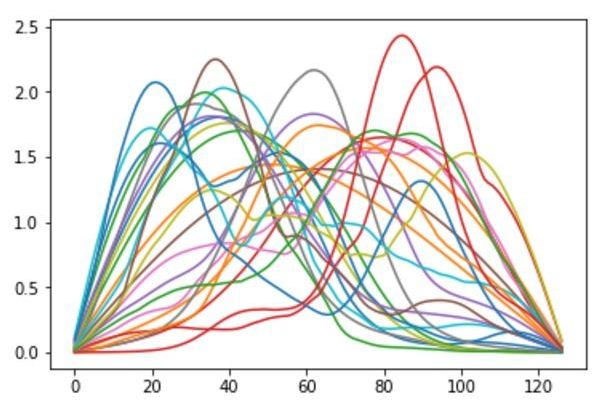

Now, a group of DeepMind researchers have brought QMC to a higher level with the Fermionic Neural Network — or Fermi Net — a neural network with more flexibility and higher accuracy. Fermi Net takes the electron information of the molecules or chemical systems as inputs and outputs their estimated wave functions, which can then be used to determine the energy states of the input chemical systems.