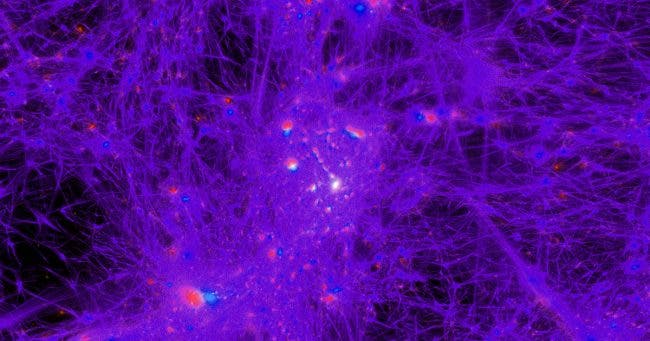

A black hole, at least in our current understanding, is characterized by having “no hair,” that is, it is so simple that it can be completely described by just three parameters, its mass, its spin and its electric charge. Even though it may have formed out of a complex mix of matter and energy, all other details are lost when the black hole forms. Its powerful gravitational field creates a surrounding surface, a “horizon,” and anything that crosses that horizon (even light) cannot escape. Hence the singularity appears black, and any details about the infalling material are also lost and digested into the three knowable parameters.

Astronomers are able to measure the masses of black holes in a relatively straightforward way: watching how matter moves in their vicinity (including other black holes), affected by the gravitational field. The charges of black holes are thought to be insignificant since positive and negative infalling charges are typically comparable in number. The spins of black holes are more difficult to determine, and both rely on interpreting the X-ray emission from the hot inner edge of the accretion disk around the black hole. One method models the shape of the X-ray continuum, and it relies on good estimates of the mass, distance, and viewing angle. The other models the X-ray spectrum, including observed atomic emission lines that are often seen in reflection from the hot gas. It does not depend on knowing as many other parameters. The two methods have in general yielded comparable results.

CfA astronomer James Steiner and his colleagues reanalyzed seven sets of spectra obtained by the Rossi X-ray Timing Explorer of an outburst from a stellar-mass black hole in our galaxy called 4U1543-47. Previous attempts to estimate the spin of the object using the continuum method resulted in disagreements between papers that were considerably larger than the formal uncertainties (the papers assumed a mass of 9.4 solar-masses and a distance of 24.7 thousand light-years). Using careful refitting of the spectra and updated modeling algorithms, the scientists report a spin intermediate in size to the previous ones, moderate in magnitude, and established at a 90% confidence level. Since there have been only a few dozen well confirmed black hole spins measured to date, the new result is an important addition.