A quintillion calculations a second. That’s one with 18 zeros after it. It’s the speed at which an exascale supercomputer will process information. The Department of Energy (DOE) is preparing for the first exascale computer to be deployed in 2021. Two more will follow soon after. Yet quantum computers may be able to complete more complex calculations even faster than these up-and-coming exascale computers. But these technologies complement each other much more than they compete.

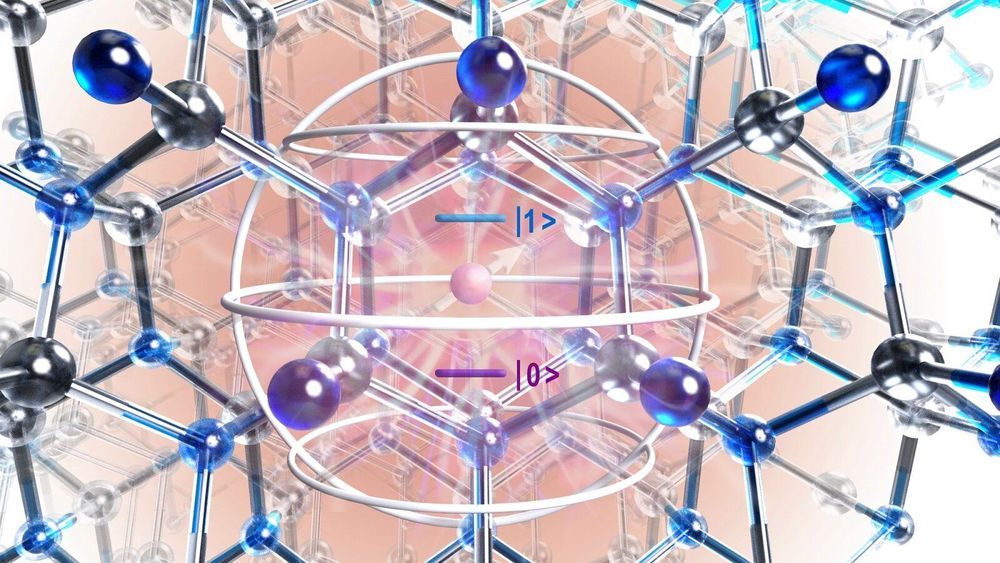

It’s going to be a while before quantum computers are ready to tackle major scientific research questions. While quantum researchers and scientists in other areas are collaborating to design quantum computers to be as effective as possible once they’re ready, that’s still a long way off. Scientists are figuring out how to build qubits for quantum computers, the very foundation of the technology. They’re establishing the most fundamental quantum algorithms that they need to do simple calculations. The hardware and algorithms need to be far enough along for coders to develop operating systems and software to do scientific research. Currently, we’re at the same point in quantum computing that scientists in the 1950s were with computers that ran on vacuum tubes. Most of us regularly carry computers in our pockets now, but it took decades to get to this level of accessibility.

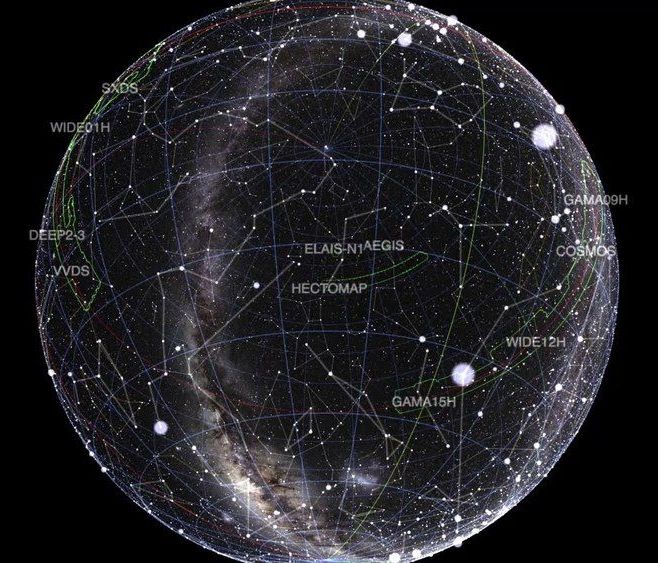

In contrast, exascale computers will be ready next year. When they launch, they’ll already be five times faster than our fastest computer —Summit, at Oak Ridge National Laboratory’s Leadership Computing Facility, a DOE Office of Science user facility. Right away, they’ll be able to tackle major challenges in modeling Earth systems, analyzing genes, tracking barriers to fusion, and more. These powerful machines will allow scientists to include more variables in their equations and improve models’ accuracy. As long as we can find new ways to improve conventional computers, we’ll do it.