Unless you’re a physicist or an engineer, there really isn’t much reason for you to know about partial differential equations. I know. After years of poring over them in undergrad while studying mechanical engineering, I’ve never used them since in the real world.

But partial differential equations, or PDEs, are also kind of magical. They’re a category of math equations that are really good at describing change over space and time, and thus very handy for describing the physical phenomena in our universe. They can be used to model everything from planetary orbits to plate tectonics to the air turbulence that disturbs a flight, which in turn allows us to do practical things like predict seismic activity and design safe planes.

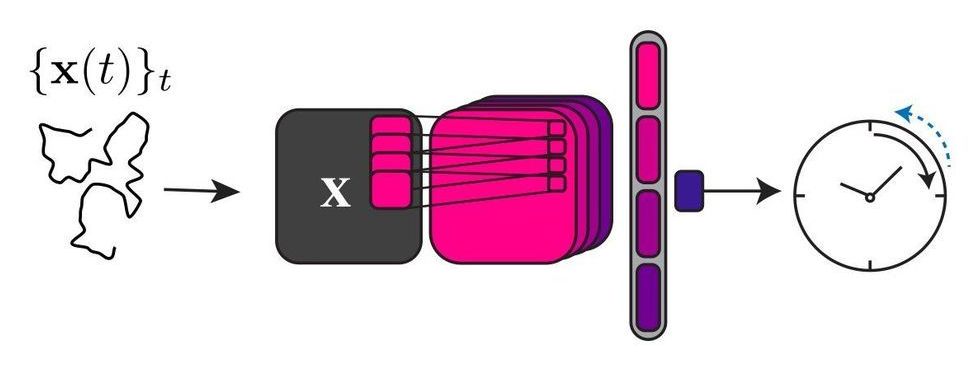

The catch is PDEs are notoriously hard to solve. And here, the meaning of “solve” is perhaps best illustrated by an example. Say you are trying to simulate air turbulence to test a new plane design. There is a known PDE called Navier-Stokes that is used to describe the motion of any fluid. “Solving” Navier-Stokes allows you to take a snapshot of the air’s motion (a.k.a. wind conditions) at any point in time and model how it will continue to move, or how it was moving before.