Strong AI will make excellent scientists.

Calculating the most influential scientific equations is no easy task. But these five certainly rank in the top tier.

A research group working at Uppsala University has succeeded in studying ‘translation factors’ – important components of a cell’s protein synthesis machinery – that are several billion years old. By studying these ancient ‘resurrected’ factors, the researchers were able to establish that they had much broader specificities than their present-day, more specialized counterparts.

In order to survive and grow, all cells contain an in-house protein synthesis factory. This consists of ribosomes and associated translation factors that work together to ensure that the complex protein production process runs smoothly. While almost all components of the modern translational machinery are well known, until now scientists did not know how the process evolved.

The new study, published in the journal Molecular Biology and Evolution, took the research group led by Professor Suparna Sanyal of the Department of Cell and Molecular Biology on an epic journey back into the past. A previously published study used a special algorithm to predict DNA sequences of ancestors of an important translation factor called elongation factor thermo-unstable, or EF-Tu, going back billions of years. The Uppsala research group used these DNA sequences to resurrect the ancient bacterial EF-Tu proteins and then to study their properties.

A robotic artist powered by AI algorithms has created realistic self-portraits that question the limits of artificial intelligence and what it means to be human.

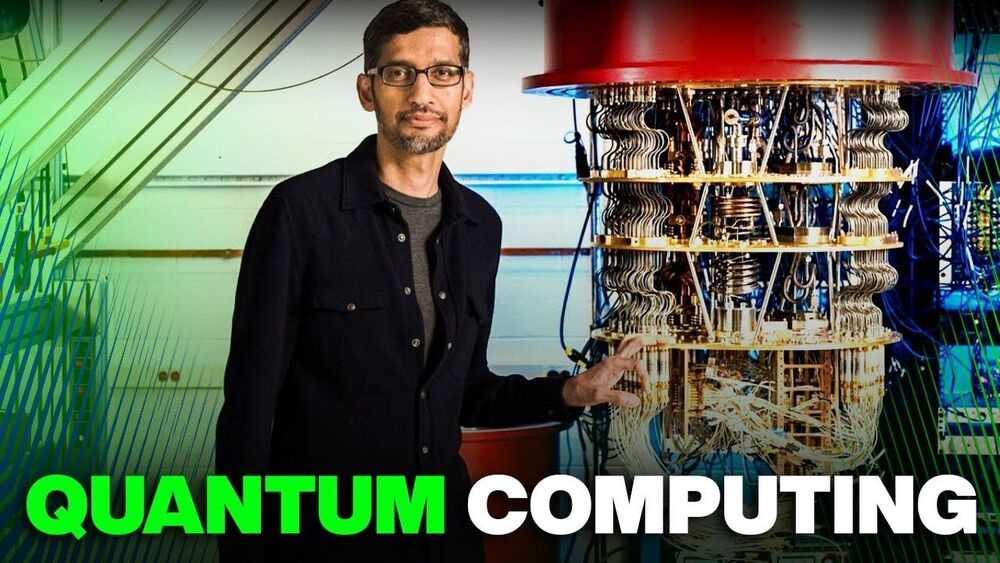

Keep watching to look at three of the most fantastic quantum breakthroughs that bring liberation and freedom to the world of science today! Subscribe to Futurity for more videos.

#quantum #quantumcomputing #google.

As we advance as a species, there are a lot of things that once seemed impossible a century ago that are now a reality. It’s called evolving. For example, there was a time when most people believed the earth was flat. Then Eratosthenes came onto the scene and proved that the world is round.

At the time, it was groundbreaking. But today, quantum mechanics rules the roost. This school of physics deals with the physical realm on the scale of atoms and electrons; thus making many of the equations in classical mechanics useless. With that being said, let’s take a look at three of the most amazing quantum breakthroughs that are bringing liberation and freedom to the world of science today!

We kick things off with a team of Chinese scientists claiming to have constructed a quantum computer that has the ability to perform certain computations almost 100 trillion times faster than the world’s most advanced supercomputer.

The breakthrough sheds light on quantum computational advantage—which is also famously known as quantum supremacy. But it’s become a hotly-contested tech race between Chinese researchers and some of the largest US tech corporations such as Amazon, Google, and Microsoft.

For example, Google announced in 2019 that it had constructed the first quantum computer that was able to perform a computation in under 200 seconds.

In 2018, Cornell researchers built a high-powered detector that, in combination with an algorithm-driven process called ptychography, set a world record by tripling the resolution of a state-of-the-art electron microscope.

As successful as it was, that approach had a weakness. It only worked with ultrathin samples that were a few atoms thick. Anything thicker would cause the electrons to scatter in ways that could not be disentangled.

Now a team, again led by David Muller, the Samuel B. Eckert Professor of Engineering, has bested its own record by a factor of two with an electron microscope pixel array detector (EMPAD) that incorporates even more sophisticated 3D reconstruction algorithms.

An international research team analyzed a database of more than 1000 supernova explosions and found that models for the expansion of the Universe best match the data when a new time dependent variation is introduced. If proven correct with future, higher-quality data from the Subaru Telescope and other observatories, these results could indicate still unknown physics working on the cosmic scale.

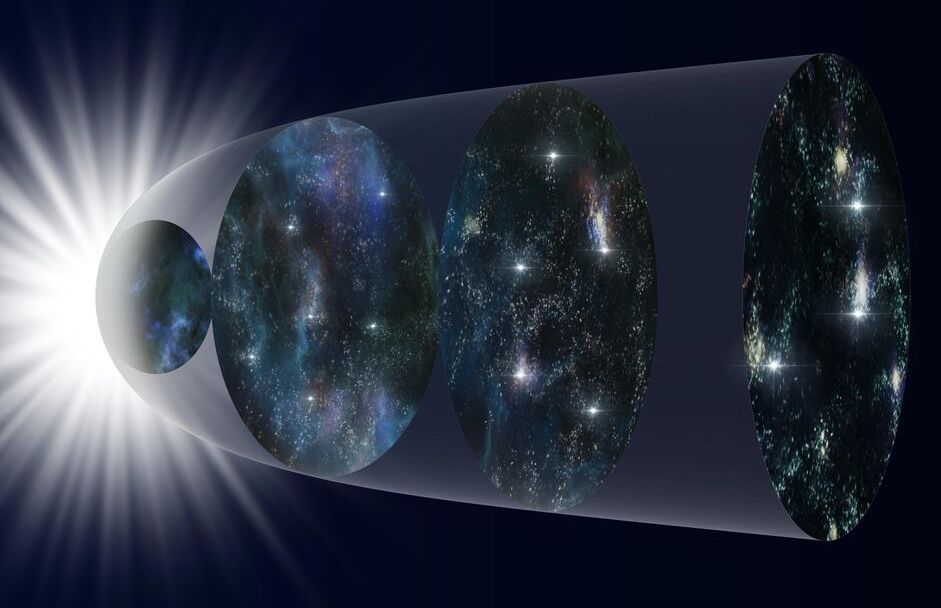

Edwin Hubble’s observations over 90 years ago showing the expansion of the Universe remain a cornerstone of modern astrophysics. But when you get into the details of calculating how fast the Universe was expanding at different times in its history, scientists have difficulty getting theoretical models to match observations.

To solve this problem, a team led by Maria Dainotti (Assistant Professor at the National Astronomical Observatory of Japan and the Graduate University for Advanced Studies, SOKENDAI in Japan and an affiliated scientist at the Space Science Institute in the U.S.A.) analyzed a catalog of 1048 supernovae which exploded at different times in the history of the Universe. The team found that the theoretical models can be made to match the observations if one of the constants used in the equations, appropriately called the Hubble constant, is allowed to vary with time.

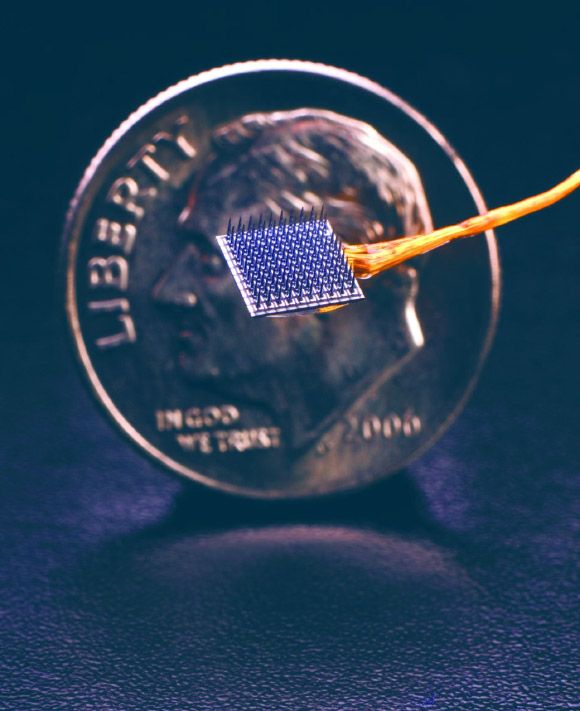

Researchers with the BrainGate Collaboration have deciphered the brain activity associated with handwriting: working with a 65-year-old (at the time of the study) participant with paralysis who has sensors implanted in his brain, they used an algorithm to identify letters as he attempted to write them; then, the system displayed the text on a screen; by attempting handwriting, the participant typed 90 characters per minute — more than double the previous record for typing with a brain-computer interface.

So far, a major focus of brain-computer interface research has been on restoring gross motor skills, such as reaching and grasping or point-and-click typing with a computer cursor.

Machine learning algorithms have gained fame for being able to ferret out relevant information from datasets with many features, such as tables with dozens of rows and images with millions of pixels. Thanks to advances in cloud computing, you can often run very large machine learning models without noticing how much computational power works behind the scenes.

But every new feature that you add to your problem adds to its complexity, making it harder to solve it with machine learning algorithms. Data scientists use dimensionality reduction, a set of techniques that remove excessive and irrelevant features from their machine learning models.

Dimensionality reduction slashes the costs of machine learning and sometimes makes it possible to solve complicated problems with simpler models.

| by TOM O’CONNOR — SHAOLIN’S FINEST REPORTER.

“Of course, we are supporters,” a Hezbollah spokesperson told Newsweek. “But I don’t think they’re in need of our people. The numbers are available. All the rockets and capabilities are in the hands of the resistance fighters in Palestine.”

Hezbollah leadership also felt there was more to come.

In remarks recently aired by the group’s affiliated Al Manar outlet, Hezbollah Executive Council head Hashem Safieddine said “the resistance today outlines the equations of victory and the upcoming conquests, and the spirit of resistance is manifested in Gaza today, in Jerusalem and the West Bank, and all of Palestine is witnessing resistance today.”

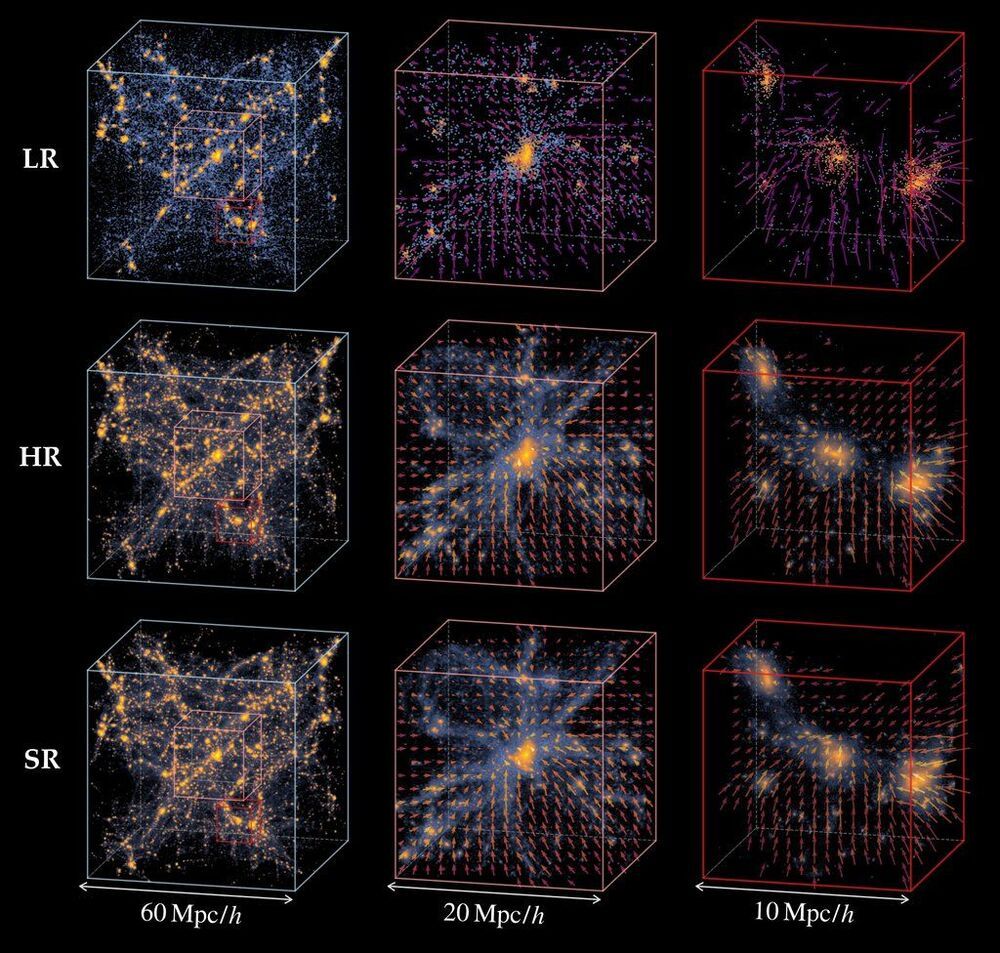

Cosmologists love universe simulations. Even models covering hundreds of millions of light years can be useful for understanding fundamental aspects of cosmology and the early universe. There’s just one problem – they’re extremely computationally intensive. A 500 million light year swath of the universe could take more than 3 weeks to simulate… Now, scientists led by Yin Li at the Flatiron Institute have developed a way to run these cosmically huge models 1000 times faster. That 500 million year light year swath could then be simulated in 36 minutes.

Older algorithms took such a long time in part because of a tradeoff. Existing models could either simulate a very detailed, very small slice of the cosmos or a vaguely detailed larger slice of it. They could provide either high resolution or a large area to study, not both.

To overcome this dichotomy, Dr. Li turned to an AI technique called a generative adversarial network (GAN). This algorithm pits two competing algorithms again each other, and then iterates on those algorithms with slight changes to them and judges whether those incremental changes improved the algorithm or not. Eventually, with enough iterations, both algorithms become much more accurate naturally on their own.