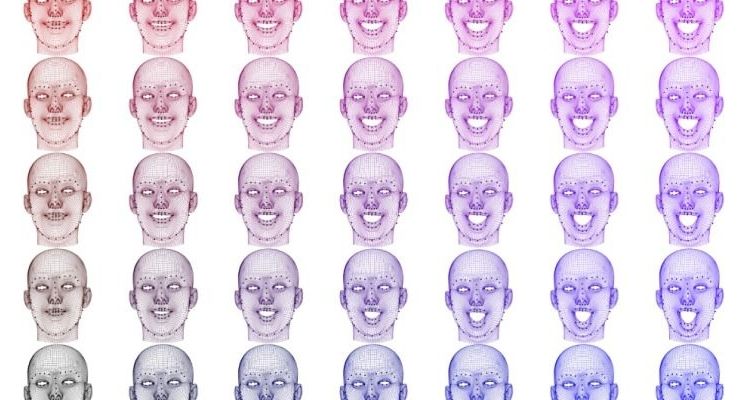

As moviemaking becomes as much a science as an art, the moviemakers need ever-better ways to gauge audience reactions. Did they enjoy it? How much… exactly? At minute 42? A system from Caltech and Disney Research uses a facial expression tracking neural network to learn and predict how members of the audience react, perhaps setting the stage for a new generation of Nielsen ratings.

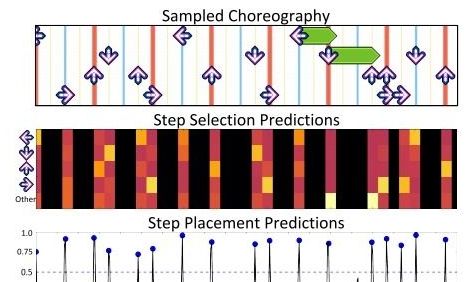

The research project, just presented at IEEE’s Computer Vision and Pattern Recognition conference in Hawaii, demonstrates a new method by which facial expressions in a theater can be reliably and relatively simply tracked in real time.

It uses what’s called a factorized variational autoencoder — the math of it I am not even going to try to explain, but it’s better than existing methods at capturing the essence of complex things like faces in motion.

Read more