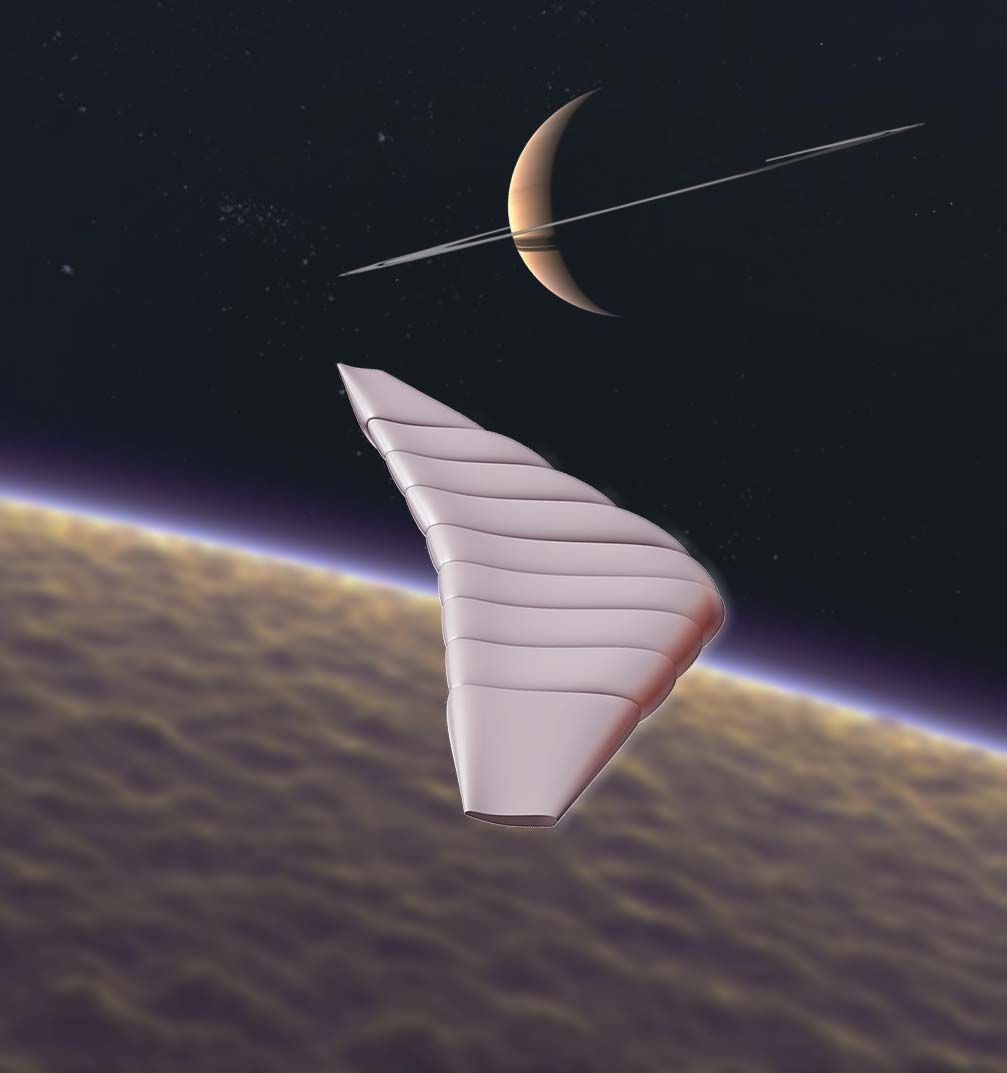

“As the long-running Cassini mission enters its last year at Saturn, NASA is moving forward with an early-stage technology study to send a drone to its moon Titan.”

“As the long-running Cassini mission enters its last year at Saturn, NASA is moving forward with an early-stage technology study to send a drone to its moon Titan.”

More update on controlling drones with BMI.

Using wireless interface, operators control multiple drones by thinking of various tasks.

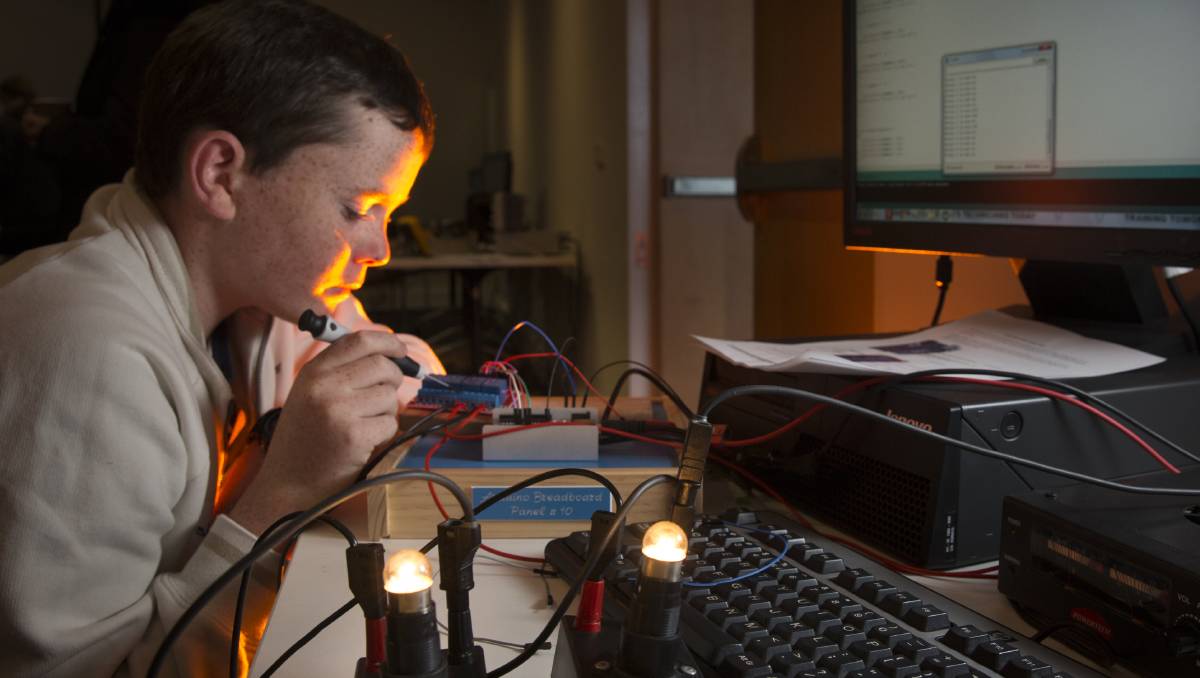

A researcher at Arizona State University has discovered how to control multiple robotic drones using the human brain.

A controller wears a skull cap outfitted with 128 electrodes wired to a computer. The device records electrical brain activity. If the controller moves a hand or thinks of something, certain areas light up.

Nice read by Microsoft on their BMI efforts.

I have been reading a lot about brain interfaces and that the Tesla S can be summoned with the brain and that people have started having competitions with drones controlled by brain waves. I have recently acquired an Emotiv Insight® as shown in Figure 1 and have been doing some testing with it.

Figure 1, brain interface Emotiv Insight® Microsoft Azure

It really does work pretty good. I was able to get it up and running in less than an hour and accessing the online tool (cpanel.emotivinsight.com/BTLE) allowed me to fit the device on my head making sure all the sensors were connected to my brain, as shown in Figure 2.

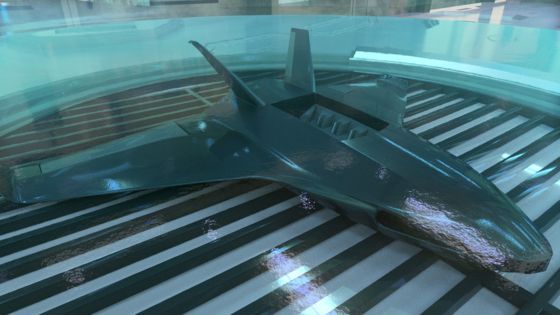

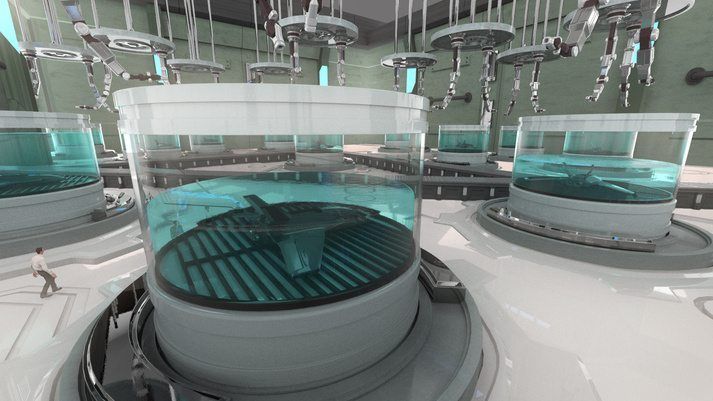

BAE systems and a professor at Glasgow University have revealed a way to really grow drones with an advanced form of chemical 3D printing.

The news has already swept the mainstream news sites, even though this is little more than a theoretical exercise right now. Professor Lee Cronin, the man behind the concept, freely admits that he has a mountain to climb to turn this dream into a reality.

The video, then, which depicts a pair of printer heads laying the absolute basics in a vat before the drone literally grows from almost nothing, is really a pipe dream right now.

During a disaster situation, first responders benefit from one thing above anything else: accurate information about the environment that they are about to enter. Having foreknowledge of specific building layouts, the locations of impassable obstacles, fires or chemical spills can often be the only thing between life or death for anyone trapped inside. Currently first responders need to rely on their own experience and observations, or possibly a drone sent in ahead of them sending back an unreliable 2D video feed. Unfortunately neither option is optimal, and sadly many victims in a disaster situation will likely perish before they are discovered or the area is deemed safe enough to be entered.

But a team at the Defense Advanced Research Projects Agency (DARPA) has developed technology that can offer first responders the option of exploring a disaster area without putting themselves in any risk. Virtual Eye is a software system that can capture and transmit video feed and convert it into a real time 3D virtual reality experience. It is made possible by combining cutting-edge 3D imaging software, powerful mobile graphics processing units (GPUs) and the video feed from two cameras, any two cameras. This allows first responders — soldiers, firefighters or anyone really — the option of walking through a real environment like a room, bunker or any enclosed area virtually without needing to physically enter.

It sounds like an idea for a science fiction film, but here in the UK scientists and engineers are spending time and money to see if they can do exactly that.

British warplanes are already flying with parts made from a 3D printer. Researchers are already using that same technology to build drones.

The military advantage is obvious — building equipment quickly and close to the battlefield — without long waits and long supply chains — gives you an enormous advantage over any enemy.