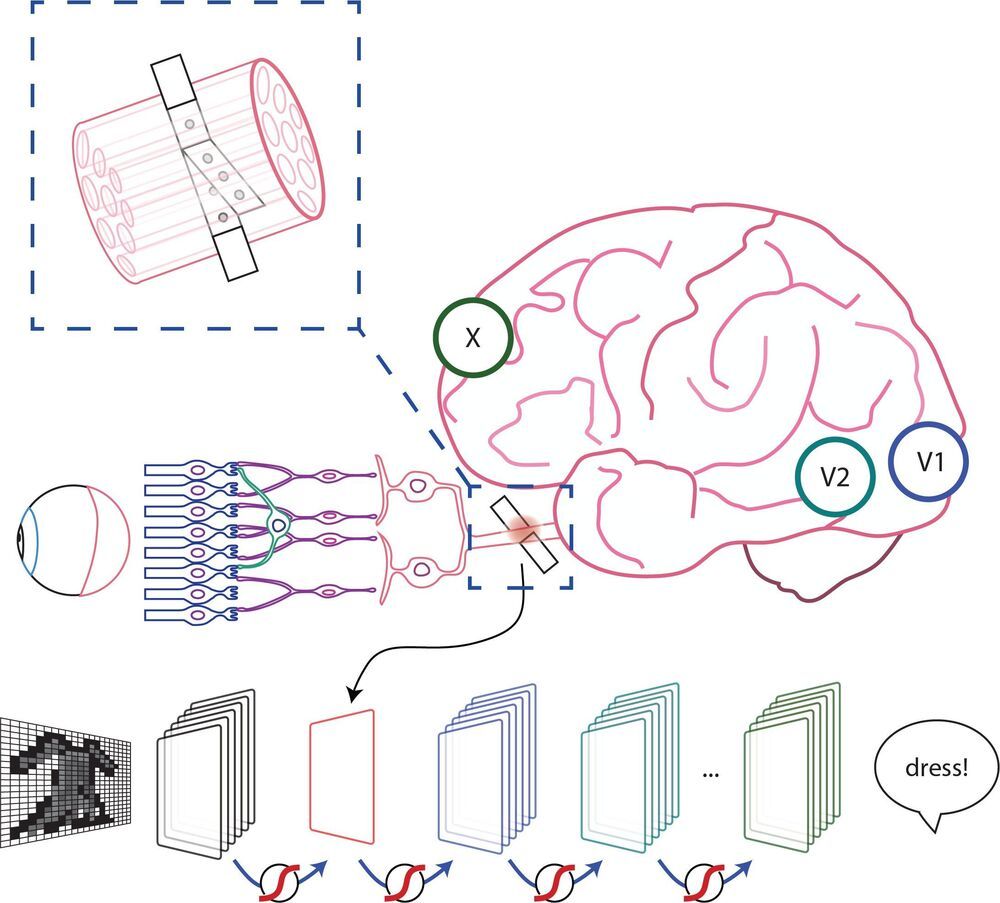

Stimulation of the nervous system with neurotechnology has opened up new avenues for treating human disorders, such as prosthetic arms and legs that restore the sense of touch in amputees, prosthetic fingertips that provide detailed sensory feedback with varying touch resolution, and intraneural stimulation to help the blind by giving sensations of sight.

Scientists in a European collaboration have shown that optic nerve stimulation is a promising neurotechnology to help the blind, with the constraint that current technology has the capacity of providing only simple visual signals.

Nevertheless, the scientists’ vision (no pun intended) is to design these simple visual signals to be meaningful in assisting the blind with daily living. Optic nerve stimulation also avoids invasive procedures like directly stimulating the brain’s visual cortex. But how does one go about optimizing stimulation of the optic nerve to produce consistent and meaningful visual sensations?

Now, the results of a collaboration between EPFL, Scuola Superiore Sant’Anna and Scuola Internazionale Superiore di Studi Avanzati, published today in Patterns, show that a new stimulation protocol of the optic nerve is a promising way for developing personalized visual signals to help the blind–that also take into account signals from the visual cortex. The protocol has been tested for the moment on artificial neural networks known to simulate the entire visual system, called convolutional neural networks (CNN) usually used in computer vision for detecting and classifying objects. The scientists also performed psychophysical tests on ten healthy subjects that imitate what one would see from optic nerve stimulation, showing that successful object identification is compatible with results obtained from the CNN.

“We are not just trying to stimulate the optic nerve to elicit a visual perception,” explains Simone Romeni, EPFL scientist and first author of the study. “We are developing a way to optimize stimulation protocols that takes into account how the entire visual system responds to optic nerve stimulation.”

“The research shows that you can optimize optic nerve stimulation using machine learning approaches. It shows more generally the full potential of machine learning to optimize stimulation protocols for neuroprosthetic devices,” continues Silvestro Micera, EPFL Bertarelli Foundation Chair in Translational Neural Engineering and Professor of Bioelectronics at the Scuola Superiore Sant’Anna.