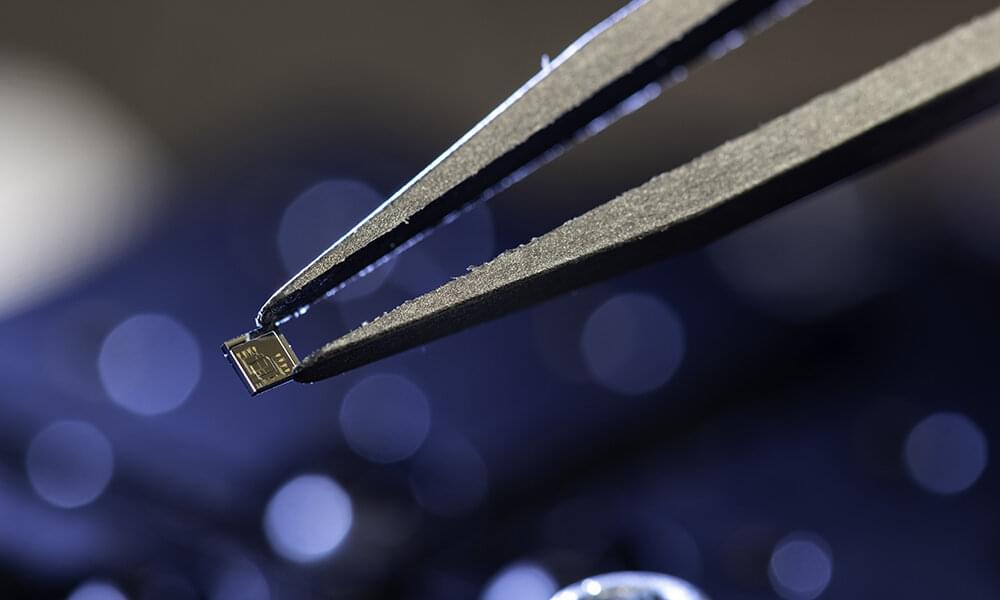

Now that crypto miners and their scalping ilk have succeeded in taking all of our precious GPU stock, it appears they’re now setting their sights on one more thing gamers cherish: the AMD CPU supply. According to a report in the UK’s Bitcoin Press, part of the reason it’s so hard to find a current-gen AMD CPU for sale anywhere is because of a crypto currency named Raptoreum that uses the CPU to mine instead of an ASIC or a GPU. Apparently, its mining is sped up significantly by the large L3 cache embedded in CPUs such as AMD Ryzen, Epyc, and Threadripper.

Raptoreum was designed as an anti-ASIC currency, as they wanted to keep the more expensive hardware solutions off their blockchain since they believed it lowered profits for everyone. To accomplish this they chose the Ghostrider mining algorithm, which is a combination of Cryptonite and x16r algorithms, and thew in some unique code to make it heavily randomized, thus its preference for L3 cache.

In case you weren’t aware, AMD’s high-end CPUs have more cache than their competitors from Intel, making them a hot item for miners of this specific currency. For example, a chip like the Threadripper 3990X has a chonky 256MB of L3 cache, but since that’s a $5,000 CPU, miners are settling for the still-beefy Ryzen chips. A CPU like the Ryzen 5900X has a generous 64MB of L3 cache compared to just 30MB on Intel’s Alder Lake CPUs, and just 16MB on Intel’s 11th-gen chips. Several models of AMD CPUs have this much cache too, not just the flagship silicon, including the previous-gen Ryen 9 3900X CPU. The really affordable models, such as the 5800X, have just 32MB of L3 cache, however.