In the early days of the space race of the 1960s, NASA used satellites to map the geography of the moon. A better understanding of its geology, however, came when men actually walked on the moon, culminating with Astronaut and Geologist Harrison Schmitt exploring the moon’s surface during the Apollo 17 mission in 1972.

Image credit: Scientific American

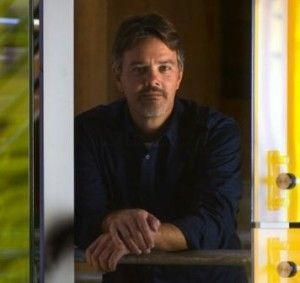

In the modern era, Dr. Gregory Hickock is one neuroscientist who believes the field of neuroscience is pursuing comparable advances. While scientists have historically developed a geographic map of the brain’s functional systems, Hickock says computational neuroanatomy is digging deeper into the geology of the brain to help provide an understanding of how the different regions interact computationally to give rise to complex behaviors.

“Computational neuroanatomy is kind of working towards that level of description from the brain map perspective. The typical function maps you see in textbooks are cartoon-like. We’re trying to take those mountain areas and, instead of relating them to labels for functions like language, we’re trying to map them on — and relate them to — stuff that the computational neuroscientists are doing.”

Hickok pointed to a number of advances that have already been made through computational neuroanatomy: mapping visual systems to determine how the visual cortex can code information and perform computations, as well as mapping neurally realistic approximations of circuits that actually mimic motor control, among others. In addition, researchers are building spiking network models, which simulate individual neurons. Scientists use thousands of these neurons in simulations to operate robots in a manner comparable to how the brain might perform the job.

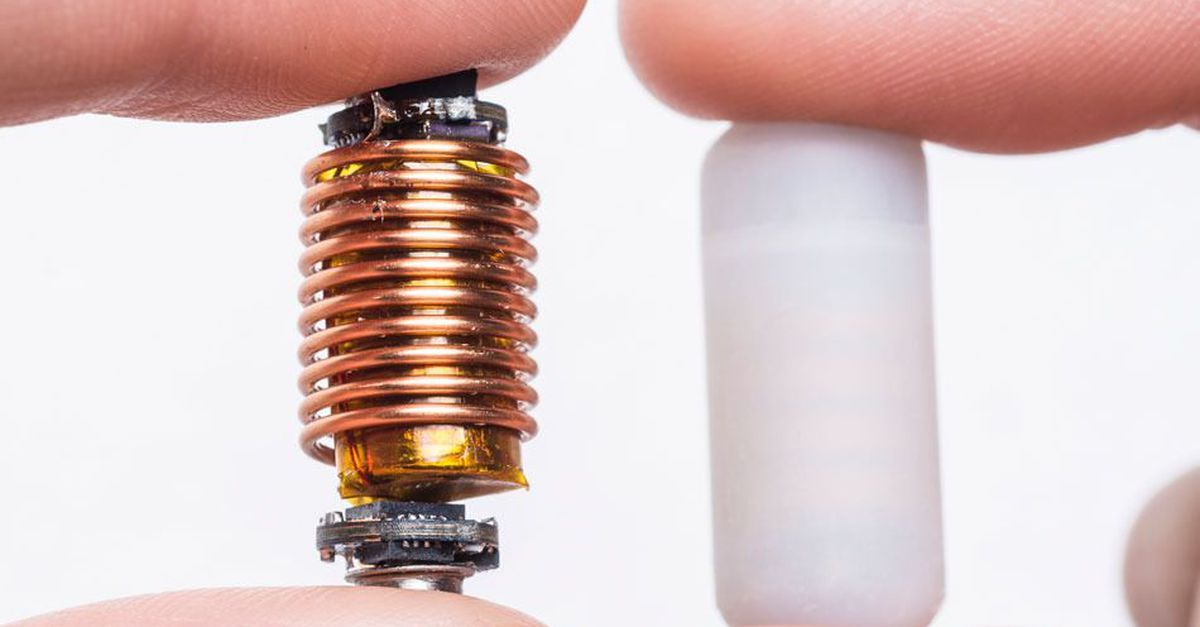

That research is driving more innovation in artificial intelligence, says Gregory. For example, brain-inspired models are being used to develop better AI systems for stores of information or retrieval of information, as well as in automated speech recognition systems. In addition, this sort of work can be used to develop better cochlear implants or other sorts of neural-prostheses, which are just starting to be explored.

“In terms of neural-prostheses that can take advantage of this stuff, if you look at patterns and activity in neurons or regions in cortex, you can decode information from those patterns of activity, (such as) motor plans or acoustic representation,” Hickok said. “So it’s possible now to implant an electrode array in the motor cortex of an individual who is locked in, so to speak, and they can control a robotic arm.”

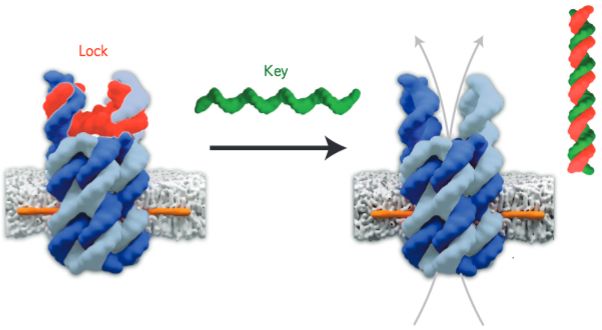

More specifically, Hickok is interested in applying computational neuroanatomy to speech and language functions. In some cases where patients have lost the ability to produce fluid speech, he states that the cause is the disconnection of still-intact brain areas that are no longer “talking to each other”. Once we understand how these circuits are organized and what they’re doing computationally, Gregory believes we might one day be able to insert electrode arrays and reconnect those brain areas as a form of rehabilitation.

As he looks at the future applications in artificial intelligence, Hickok says he expects continued development in neural-prostheses, such as cochlear implants, artificial retinas, and artificial motor control circuits. The fact that scientists are still trying to simulate how the brain does its computations is one hurdle; the “squishy” nature of brain matter seems to operate differently than the precision developed in digital computers.

Though multiple global brain projects are underway and progress is being made (Wired’s Katie Palmer gives a succinct overview), Gregory emphasizes that we’re still nowhere close to actually re-creating the human mind. “Presumably, this is what evolution has done over millions of years to configure systems that allow us to do lots of different things and that is going to (sic) take a really long time to figure out,” he said. “The number of neurons involved, 80 billion in the current estimate, trillions of connections, lots and lots of moving parts, different strategies for coding different kinds of computations… it’s just ridiculously complex and I don’t see that as something that’s easily going to give up its secrets within the next couple of generations.”