“As part of the BBC’s Intelligent Machines season, Google’s Eric Schmidt has penned an exclusive article on how he sees artificial intelligence developing, why it is experiencing such a renaissance and where it will go next.”

Although it was made in 1968, to many people, the renegade HAL 9000 computer in the film 2001: A Space Odyssey still represents the potential danger of real-life artificial intelligence. However, according to Mathematician, Computer Visionary and Author Dr. John MacCormick, the scenario of computers run amok depicted in the film – and in just about every other genre of science fiction – will never happen.

“Right from the start of computing, people realized these things were not just going to be crunching numbers, but could solve other types of problems,” MacCormick said during a recent interview with TechEmergence. “They quickly discovered computers couldn’t do things as easily as they thought.”

While MacCormick is quick to acknowledge modern advances in artificial intelligence, he’s also very conscious of its ongoing limitations, specifically replicating human vision. “The sub-field where we try to emulate the human visual system turned out to be one of the toughest nuts to crack in the whole field of AI,” he said. “Object recognition systems today are phenomenally good compared to what they were 20 years ago, but they’re still far, far inferior to the capabilities of a human.”

To compensate for its limitations, MacCormick notes that other technologies have been developed that, while they’re considered by many to be artificially intelligent, don’t rely on AI. As an example, he pointed to Google’s self-driving car. “If you look at the Google self-driving car, the AI vision systems are there, but they don’t rely on them,” MacCormick said. “In terms of recognizing lane markings on the road or obstructions, they’re going to rely on other sensors that are more reliable, such as GPS, to get an exact location.”

Although it may not specifically rely on AI, MacCormick still believes that with new and improved algorithms emerging all the time, self-driving cars will eventually become a very real part of our daily fabric. And the incremental gains being achieved to make real AI systems won’t be limited to just self-driving cars. “One of the areas where we’re seeing pretty consistent improvement is translation of human languages,” he said. “I believe we’re going to continue to see high quality translations between human languages emerging. I’m not going to give a number in years, but I think it’s doable in the middle term.”

Ultimately, the uses and applications of artificial intelligence will still remain in the hands of their creators, according to MacCormick. “I’m an unapologetic optimist. I don’t think AIs are going to get out of control of humans and start doing things on their own,” he said. “As we get closer to systems that rival humans, they will still be systems that we have designed and are capable of controlling.”

That optimistic outlook would seemingly put MacCormick at odds with the views of the potential dangers of AI that have been voiced recently by the likes of Elon Musk, Stephen Hawking and Bill Gates. However, MacCormick says he agrees with their point that the ethical ramifications of artificial intelligence should be considered and guidance protocols developed.

“Everyone needs to be thinking about it and cooperating to be sure that we’re moving in the right direction,” MacCormick said. “At some point, all sorts of people need to be thinking about this, from philosophers and social scientists to technologists and computer scientists.”

MacCormick didn’t mince words when he cited the area of AI research where those protocols are most needed. The most obvious sub-field where protocols need to be in place, according to MacCormick, is military robotics. “As we become capable of building systems that are somewhat autonomous and can be used for lethal force in military conflicts, then the entire ethics of what should and should not be done really changes,” he said. “We need to be thinking about this and try to formulate the correct way of using autonomous systems.”

In the end, MacCormick’s optimistic view of the future, and the positive potentials of artificial intelligence, beams through clouds of uncertainty. “I like to take the optimistic view that we’ll be able to continue building these things and making them into useful tools that aren’t the same as humans, but have extraordinary capabilities,” MacCormick said. “And we can guide them and control them and use them for positive benefit.”

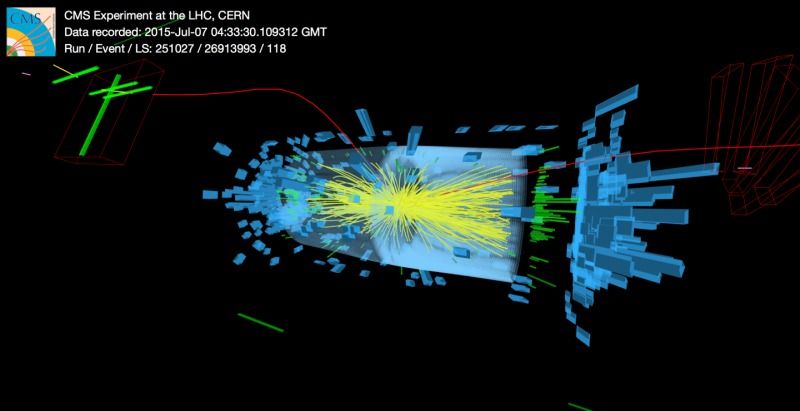

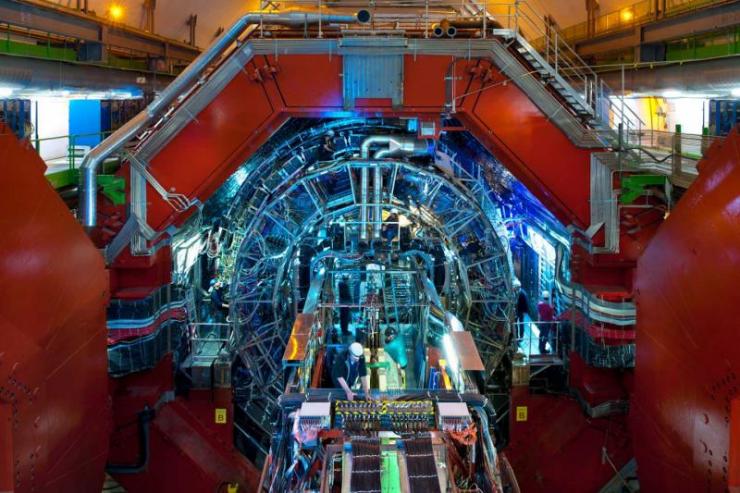

July, 2015; as you know.. was the all systems go for the CERNs Large Hadron Collider (LHC). On a Saturday evening, proton collisions resumed at the LHC and the experiments began collecting data once again. With the observation of the Higgs already in our back pocket — It was time to turn up the dial and push the LHC into double digit (TeV) energy levels. From a personal standpoint, I didn’t blink an eye hearing that large amounts of Data was being collected at every turn. BUT, I was quite surprised to learn at the ‘Amount’ being collected and processed each day — About One Petabyte.

Approximately 600 million times per second, particles collide within the (LHC). The digitized summary is recorded as a “collision event”. Physicists must then sift through the 30 petabytes or so of data produced annually to determine if the collisions have thrown up any interesting physics. Needless to say — The Hunt is On!

The Data Center processes about one Petabyte of data every day — the equivalent of around 210,000 DVDs. The center hosts 11,000 servers with 100,000 processor cores. Some 6000 changes in the database are performed every second.

[youtube_sc url=“https://youtu.be/0mgXNgD3JFU?list=PL7DEC46BD7058D7BB”]

With experiments at CERN generating such colossal amounts of data. The Data Center stores it, and then sends it around the world for analysis. CERN simply does not have the computing or financial resources to crunch all of the data on site, so in 2002 it turned to grid computing to share the burden with computer centres around the world. The Worldwide LHC Computing Grid (WLCG) – a distributed computing infrastructure arranged in tiers – gives a community of over 8000 physicists near real-time access to LHC data. The Grid runs more than two million jobs per day. At peak rates, 10 gigabytes of data may be transferred from its servers every second.

By early 2013 CERN had increased the power capacity of the centre from 2.9 MW to 3.5 MW, allowing the installation of more computers. In parallel, improvements in energy-efficiency implemented in 2011 have led to an estimated energy saving of 4.5 GWh per year.

Image: CERN

[youtube_sc url=“https://youtu.be/jDC3-QSiLB4”]

PROCESSING THE DATA (processing info via CERN)> Subsequently hundreds of thousands of computers from around the world come into action: harnessed in a distributed computing service, they form the Worldwide LHC Computing Grid (WLCG), which provides the resources to store, distribute, and process the LHC data. WLCG combines the power of more than 170 collaborating centres in 36 countries around the world, which are linked to CERN. Every day WLCG processes more than 1.5 million ‘jobs’, corresponding to a single computer running for more than 600 years.

The data flow from all four experiments for Run 2 is anticipated to be about 25 GB/s (gigabyte per second)

In July, the LHCb experiment reported observation of an entire new class of particles:

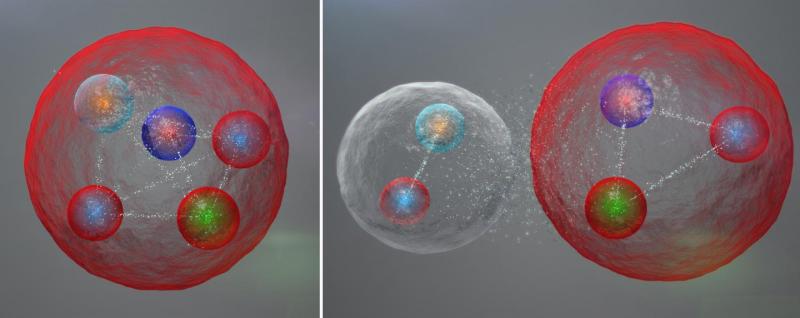

Exotic Pentaquark Particles (Image: CERN)

Possible layout of the quarks in a pentaquark particle. The five quarks might be tightly bound (left). The five quarks might be tightly bound. They might also be assembled into a meson (one quark and one anti quark) and a baryon (three quarks), weakly bound together.

The LHCb experiment at CERN’s LHC has reported the discovery of a class of particles known as pentaquarks. In short, “The pentaquark is not just any new particle,” said LHCb spokesperson Guy Wilkinson. “It represents a way to aggregate quarks, namely the fundamental constituents of ordinary protons and neutrons, in a pattern that has never been observed before in over 50 years of experimental searches. Studying its properties may allow us to understand better how ordinary matter, the protons and neutrons from which we’re all made, is constituted.”

[youtube_sc url=“https://youtu.be/02uOm5Kl5ls”]

Our understanding of the structure of matter was revolutionized in 1964 when American physicist Murray Gell-Mann proposed that a category of particles known as baryons, which includes protons and neutrons, are comprised of three fractionally charged objects called quarks, and that another category, mesons, are formed of quark-antiquark pairs. This quark model also allows the existence of other quark composite states, such as pentaquarks composed of four quarks and an antiquark.

Until now, however, no conclusive evidence for pentaquarks had been seen.

Earlier experiments that have searched for pentaquarks have proved inconclusive. The next step in the analysis will be to study how the quarks are bound together within the pentaquarks.

“The quarks could be tightly bound,” said LHCb physicist Liming Zhang of Tsinghua University, “or they could be loosely bound in a sort of meson-baryon molecule, in which the meson and baryon feel a residual strong force similar to the one binding protons and neutrons to form nuclei.” More studies will be needed to distinguish between these possibilities, and to see what else pentaquarks can teach us!

August 18th, 2015

CERN Experiment Confirms Matter-Antimatter CPT Symmetry

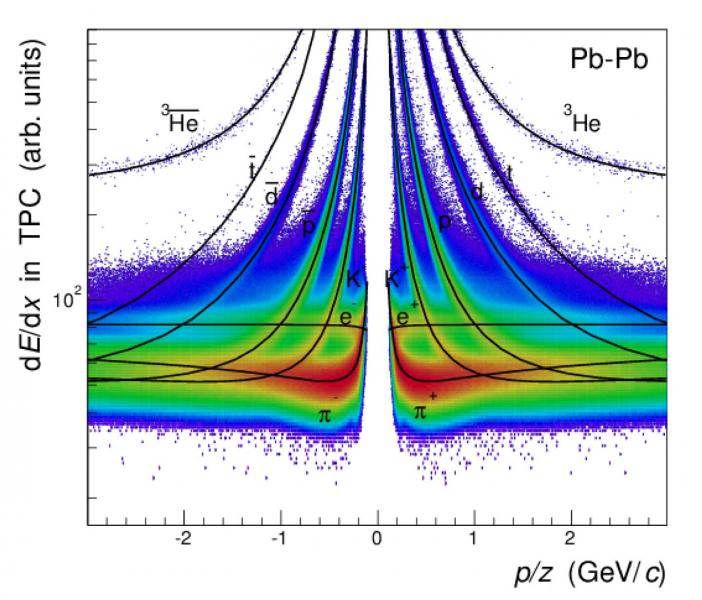

For Light Nuclei, Antinuclei (Image: CERN)

Days after scientists at CERN’s Baryon-Antibaryon Symmetry Experiment (BASE) measured the mass-to-charge ratio of a proton and its antimatter particle, the antiproton, the ALICE experiment at the European organization reported similar measurements for light nuclei and antinuclei.

The measurements, made with unprecedented precision, add to growing scientific data confirming that matter and antimatter are true mirror images.

Antimatter shares the same mass as its matter counterpart, but has opposite electric charge. The electron, for instance, has a positively charged antimatter equivalent called positron. Scientists believe that the Big Bang created equal quantities of matter and antimatter 13.8 billion years ago. However, for reasons yet unknown, matter prevailed, creating everything we see around us today — from the smallest microbe on Earth to the largest galaxy in the universe.

Last week, in a paper published in the journal Nature, researchers reported a significant step toward solving this long-standing mystery of the universe. According to the study, 13,000 measurements over a 35-day period show — with unparalleled precision – that protons and antiprotons have identical mass-to-charge ratios.

The experiment tested a central tenet of the Standard Model of particle physics, known as the Charge, Parity, and Time Reversal (CPT) symmetry. If CPT symmetry is true, a system remains unchanged if three fundamental properties — charge, parity, which refers to a 180-degree flip in spatial configuration, and time — are reversed.

The latest study takes the research over this symmetry further. The ALICE measurements show that CPT symmetry holds true for light nuclei such as deuterons — a hydrogen nucleus with an additional neutron — and antideuterons, as well as for helium-3 nuclei — two protons plus a neutron — and antihelium-3 nuclei. The experiment, which also analyzed the curvature of these particles’ tracks in ALICE detector’s magnetic field and their time of flight, improve on the existing measurements by a factor of up to 100.

IN CLOSING..

A violation of CPT would not only hint at the existence of physics beyond the Standard Model — which isn’t complete yet — it would also help us understand why the universe, as we know it, is completely devoid of antimatter.

UNTIL THEN…

ORIGINAL ARTICLE POSTING via Michael Phillips LinkedIN Pulse @ http://goo.gl/ApdTL6

Quoted: “Sometimes decentralization makes sense.

Filament is a startup that is taking two of the most overhyped ideas in the tech community—the block chain and the Internet of things—and applying them to the most boring problems the world has ever seen. Gathering data from farms, mines, oil platforms and other remote or highly secure places.

The combination could prove to be a powerful one because monitoring remote assets like oil wells or mining equipment is expensive whether you are using people driving around to manually check gear or trying to use sensitive electronic equipment and a pricey a satellite internet connection.

Instead Filament has built a rugged sensor package that it calls a Tap, and technology network that is the real secret sauce of the operation that allows its sensors to conduct business even when they aren’t actually connected to the internet. The company has attracted an array of investors who have put $5 million into the company, a graduate of the Techstars program. Bullpen Capital led the round with Verizon Ventures, Crosslink Capital, Samsung Ventures, Digital Currency Group, Haystack, Working Lab Capital, Techstars and others participating.

“This cluster of technologies is what enables the Taps to perform some pretty compelling stunts, such as send small amounts of data up to 9 miles between Taps and keep a contract inside a sensor for a year or so even if that sensor isn’t connected to the Internet. In practical terms, that might mean that the sensor in a field gathering soil data might share that data with other sensors in nearby fields belonging to other farmers based on permissions the soil sensor has to share that data. Or it could be something a bit more complicated like a robotic seed tilling machine sensing that it was low on seed and ordering up another bag from inventory based on a “contract” it has with the dispensing system inside a shed on the property.

The potential use cases are hugely varied, and the idea of using a decentralized infrastructure is fairly novel. Both IBM and Samsung have tested out using a variation of the blockchain technology for storing data in decentralized networks for connected devices. The idea is that sending all of that data to the cloud and storing it for a decade or so doesn’t always make economic sense, so why not let the transactions and accounting for them happen on the devices themselves?

That’s where the blockchain and these other protocols come in. The blockchain is a great way to store information about a transaction in a distributed manner, and because its built into the devices there’s no infrastructure to support for years on end. When combined with mesh radio technologies such as TMesh it also becomes a good way to build out a network of devices that can communicate with each other even when they don’t have connectivity.”

Read the Article, and watch the Video, here > http://fortune.com/2015/08/18/filament-blockchain-iot/

“In one sense, Page and Brin are just formalizing an arrangement that has evidently existed at Google for the past several years—the two of them at the helm of a company largely occupied with seeking out new and strange areas of innovation. The bet, it seems, is that this arrangement will improve the chances that Page and Brin’s unconventional investments will pan out—and that, if they don’t, the rest of the company will be better insulated from its founders’ mistakes. Until then, Sundar Pichai can focus on the boring, plodding business of actually making money.”

Quoted: “Traditional law is a form of agreement. It is an agreement among people and their leaders as to how people should behave. There are also legal contracts between individuals. These contracts are a form of private law that applies to the participants. Both types of agreement are enforced by a government’s legal system.”

“Ethereum is both a digital currency and a programming language. But it is the combination of these ingredients that make it special. Since most agreements involve the exchange of economic value, or have economic consequences, we can implement whole categories of public and private law using Ethereum. An agreement involving transfer of value can be precisely defined and automatically enforced with the same script.”

“When viewed from the future, today’s current legal system seems downright primitive. We have law libraries — buildings filled with words that nobody reads and whose meaning is unclear, even to courts who enforce them arbitrarily. Our private contracts amount to vague personal promises and a mere hope they might be honored.

For the first time, Ethereum offers an alternative. A new kind of law.”

Read the article here > http://etherscripter.com/what_is_ethereum.html

Quoted: “IBM’s first report shows that “a low-cost, private-by-design ‘democracy of devices’ will emerge” in order to “enable new digital economies and create new value, while offering consumers and enterprises fundamentally better products and user experiences.” “According to the company, the structure we are using at the moment already needs a reboot and a massive update. IBM believes that the current Internet of Things won’t scale to a network that can handle hundreds of billions of devices. The operative word is ‘change’ and this is where the blockchain will come in handy.”

Read the article here > https://99bitcoins.com/ibm-believes-blockchain-elegant-solution-internet-of-things/

Hosted by the IEEE Geoscience and Remote Sensing Society, the International Geoscience and Remote Sensing Symposium 2015 (IGARSS 2015) will be held from Sunday July 26th through Friday July 31th, 2015 at the Convention Center in Milan, Italy. This is the same town of the EXPO 2015 exhibition, whose topic is “Feeding the planet: energy for life”.