Artificial intelligence doesn’t hold a candle to the human capacity for harm.

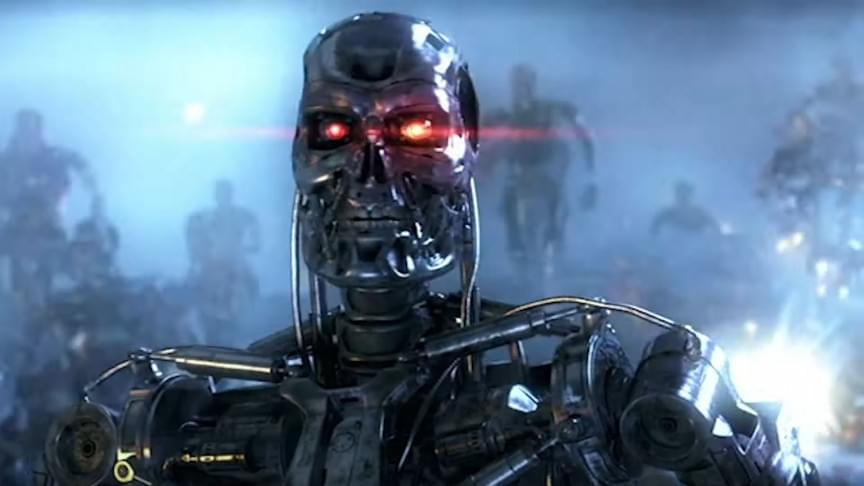

Over the last few years, there has been a lot of talk about the threat of artificial general intelligence (AGI). An AGI is essentially an artificial superintelligence. It is a system that is able to understand — or learn — any intellectual task that a human can. Experts from seemingly every sector of society have spoken out about these kinds of AI systems, depicting them as Terminator-style robots that will run amok and cause massive death and destruction.

Elon Musk, the SpaceX and Tesla CEO, has frequently railed against the creation of AGI and cast such superintelligent systems in apocalyptic terms. At SXSW in 2018, he called digital superintelligences “the single biggest existential crisis that we face and the most pressing one,” and he said these systems will ultimately be more deadly than a nuclear holocaust. The late Stephen Hawking shared these fears, telling the BBC in 2014 that “the development of full artificial intelligence could spell the end of the human race.”

Full Story:

Fears of artificial intelligence run amok make for compelling apocalypse narratives, but the real dangers of artificial intelligence come more from humans than machines.