Most often, we recognize deep learning as the magic behind self-driving cars and facial recognition, but what about its ability to safeguard the quality of the materials that make up these advanced devices? Professor of Materials Science and Engineering Elizabeth Holm and Ph.D. student Bo Lei have adopted computer vision methods for microstructural images that not only require a fraction of the data deep learning typically relies on but can save materials researchers an abundance of time and money.

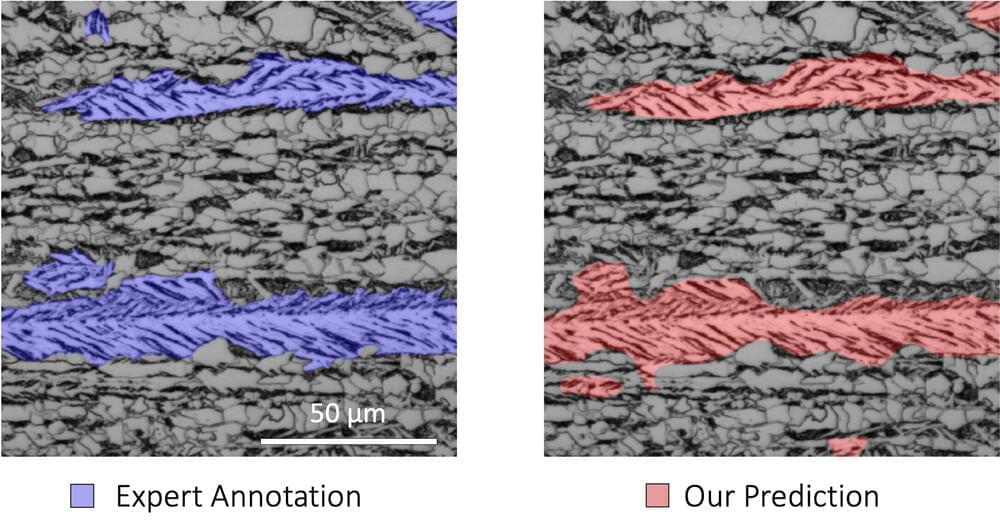

Quality control in materials processing requires the analysis and classification of complex material microstructures. For instance, the properties of some high strength steels depend on the amount of lath-type bainite in the material. However, the process of identifying bainite in microstructural images is time-consuming and expensive as researchers must first use two types of microscopy to take a closer look and then rely on their own expertise to identify bainitic regions. “It’s not like identifying a person crossing the street when you’re driving a car,” Holm explained “It’s very difficult for humans to categorize, so we will benefit a lot from integrating a deep learning approach.”

Their approach is very similar to that of the wider computer-vision community that drives facial recognition. The model is trained on existing material microstructure images to evaluate new images and interpret their classification. While companies like Facebook and Google train their models on millions or billions of images, materials scientists rarely have access to even ten thousand images. Therefore, it was vital that Holm and Lei use a “data-frugal method,” and train their model using only 30–50 microscopy images. “It’s like learning how to read,” Holm explained. “Once you’ve learned the alphabet you can apply that knowledge to any book. We are able to be data-frugal in part because these systems have already been trained on a large database of natural images.”