Google AI Introduces FLAN: An Instruction-Tuned Generalizable Language (NLP) Model To Perform Zero-Shot Tasks

To generate meaningful text, a machine learning model needs a lot of knowledge about the world and should have the ability to abstract them. While language models that have been trained to accomplish this are becoming increasingly capable of acquiring this knowledge automatically as they grow, it is unclear how to unlock this knowledge and apply it to specific real-world activities.

Fine-tuning is one well-established method for doing so. It involves training a pretrained model like BERT or T5 on a labeled dataset to adjust it to a downstream job. However, it has a large number of training instances and stored model weights for each downstream job, which is not always feasible, especially for large models.

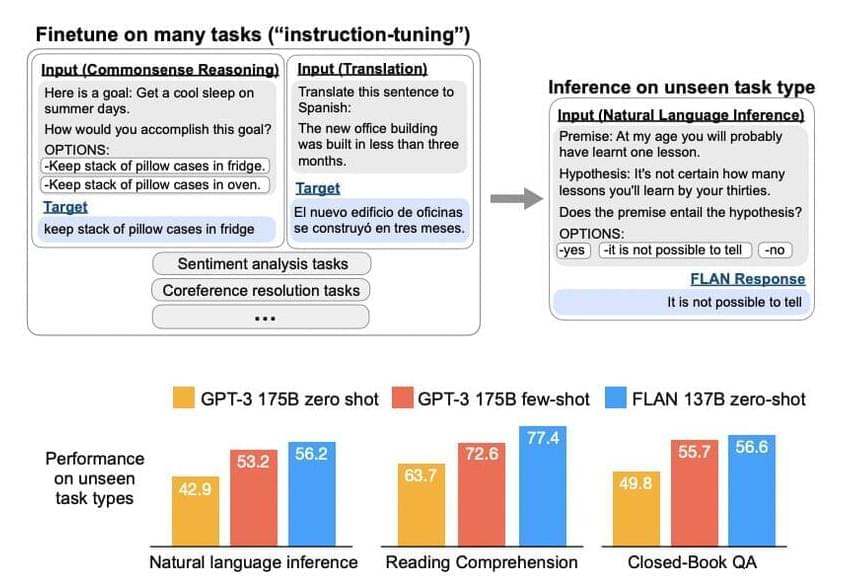

A recent Google study looks into a simple technique known as instruction fine-tuning, sometimes known as instruction tuning. This entails fine-tuning a model to make it more receptive to performing NLP (Natural language processing) tasks in general rather than a specific task.