Deep learning algorithms, such as convolutional neural networks (CNNs), have achieved remarkable results on a variety of tasks, including those that involve recognizing specific people or objects in images. A task that computer scientists have often tried to tackle using deep learning is vision-based human action recognition (HAR), which specifically entails recognizing the actions of humans who have been captured in images or videos.

Researchers at HITEC University and Foundation University Islamabad in Pakistan, Sejong University and Chung-Ang University in South Korea, University of Leicester in the UK, and Prince Sultan University in Saudi Arabia have recently developed a new CNN for recognizing human actions in videos. This CNN, presented in a paper published in Springer Link’s Multimedia Tools and Applications journal, was trained to differentiate between several different human actions, including boxing, clapping, waving, jogging, running and walking.

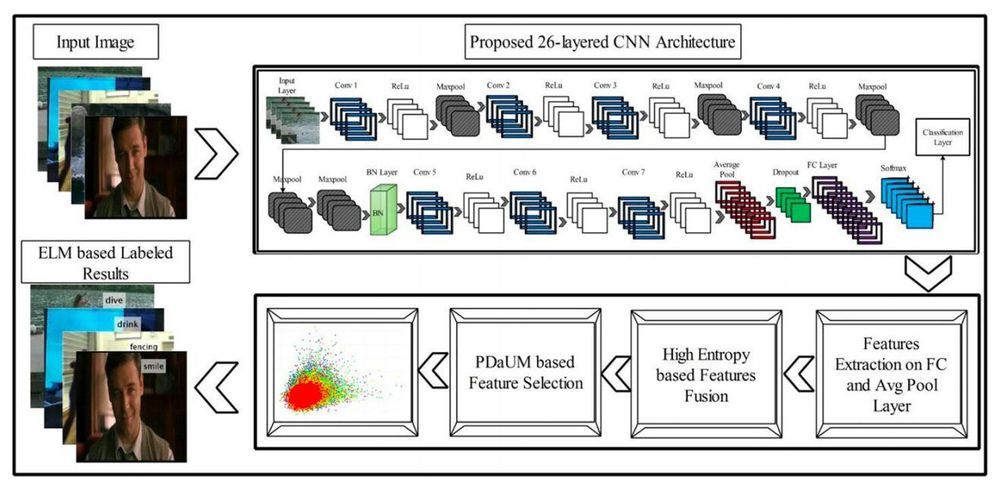

“We designed a new 26-layered convolutional neural network (CNN) architecture for accurate complex action recognition,” the researchers wrote in their paper. “The features are extracted from the global average pooling layer and fully connected (FC) layer and fused by a proposed high entropy-based approach.”