Particle physicists are planning the successor to CERN’s Large Hadron Collider – but how will they deal with the deluge of data from a future machine and the proliferation of theoretical models? Michela Massimi explains why a new scientific methodology called “model independence” could hold the answer.

It’s been an exciting few months for particle physicists. In May more than 600 researchers gathered in Granada, Spain, to discuss the European Particle Physics Strategy, while in June CERN held a meeting in Brussels, Belgium, to debate plans for the Future Circular Collider (FCC). This giant machine – 100 km in circumference and earmarked for the Geneva lab – is just one of several different projects (including those in astroparticle physics and machine learning) that particle physicists are working on to explore the frontiers of high-energy physics.

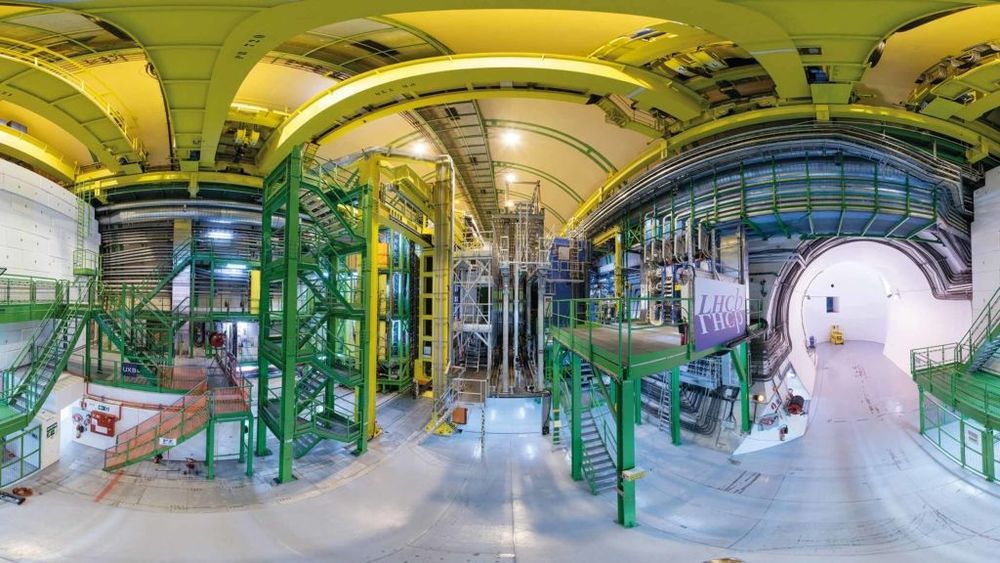

CERN’s Large Hadron Collider (LHC) has been collecting data from vast numbers of proton–proton collisions since 2010 – first at an energy of 8 TeV and then 13 TeV during its second run. These have enabled scientists on the ATLAS and CMS experiments at the LHC to discover the Higgs boson in 2012, while light has also been shed on other vital aspects of the Standard Model of particle physics.