Even as autonomous robots get better at doing things on their own, there will still be plenty of circumstances where humans might need to step in and take control. New software developed by Brown University computer scientists enables users to control robots remotely using virtual reality, which helps users to become immersed in a robot’s surroundings despite being miles away physically.

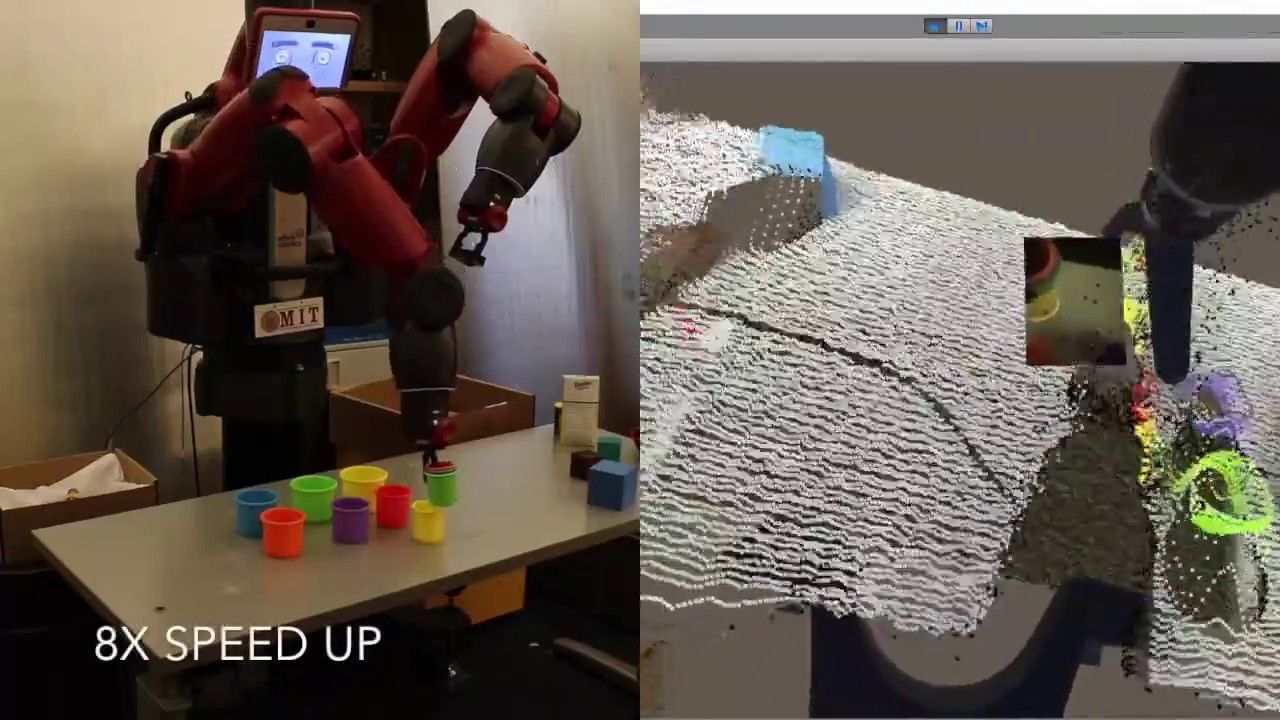

The software connects a robot’s arms and grippers as well as its onboard cameras and sensors to off-the-shelf virtual reality hardware via the internet. Using handheld controllers, users can control the position of the robot’s arms to perform intricate manipulation tasks just by moving their own arms. Users can step into the robot’s metal skin and get a first-person view of the environment, or can walk around the robot to survey the scene in the third person—whichever is easier for accomplishing the task at hand. The data transferred between the robot and the virtual reality unit is compact enough to be sent over the internet with minimal lag, making it possible for users to guide robots from great distances.

“We think this could be useful in any situation where we need some deft manipulation to be done, but where people shouldn’t be,” said David Whitney, a graduate student at Brown who co-led the development of the system. “Three examples we were thinking of specifically were in defusing bombs, working inside a damaged nuclear facility or operating the robotic arm on the International Space Station.”