It’s looking increasingly likely that artificial intelligence (AI) will be the harbinger of the next technological revolution. When it develops to the point wherein it is able to learn, think, and even “feel” without the input of a human – a truly “smart” AI – then everything we know will change, almost overnight.

That’s why it’s so interesting to keep track of major milestones in the development of AIs that exist today, including that of Google’s DeepMind neural network. It’s already besting humanity in the gaming world, and a new in-house study reveals that Google is decidedly unsure whether or not the AI tends to prefer cooperative behaviors over aggressive, competitive ones.

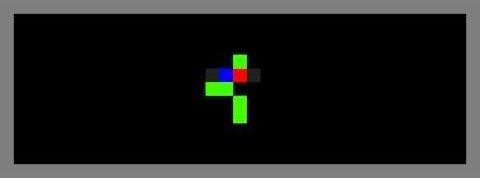

A team of Google acolytes set up two relatively simple scenarios in which to test whether neural networks are more likely to work together or destroy each other when faced with a resource problem. The first situation, entitled “Gathering”, involved two versions of DeepMind – Red and Blue – being given the task of harvesting green “apples” from within a confined space.