Ask a member of Facebook’s growth team what feature played the biggest role in getting the company to a billion daily users, and they’ll likely tell you it was photos. The endless stream of pictures, which users have been able to upload since 2005, a year after Facebook’s launch, makes the social network irresistible to a global audience. It’s difficult to imagine Facebook without photos. Yet for millions of blind and visually impaired people, that’s been the reality for over a decade.

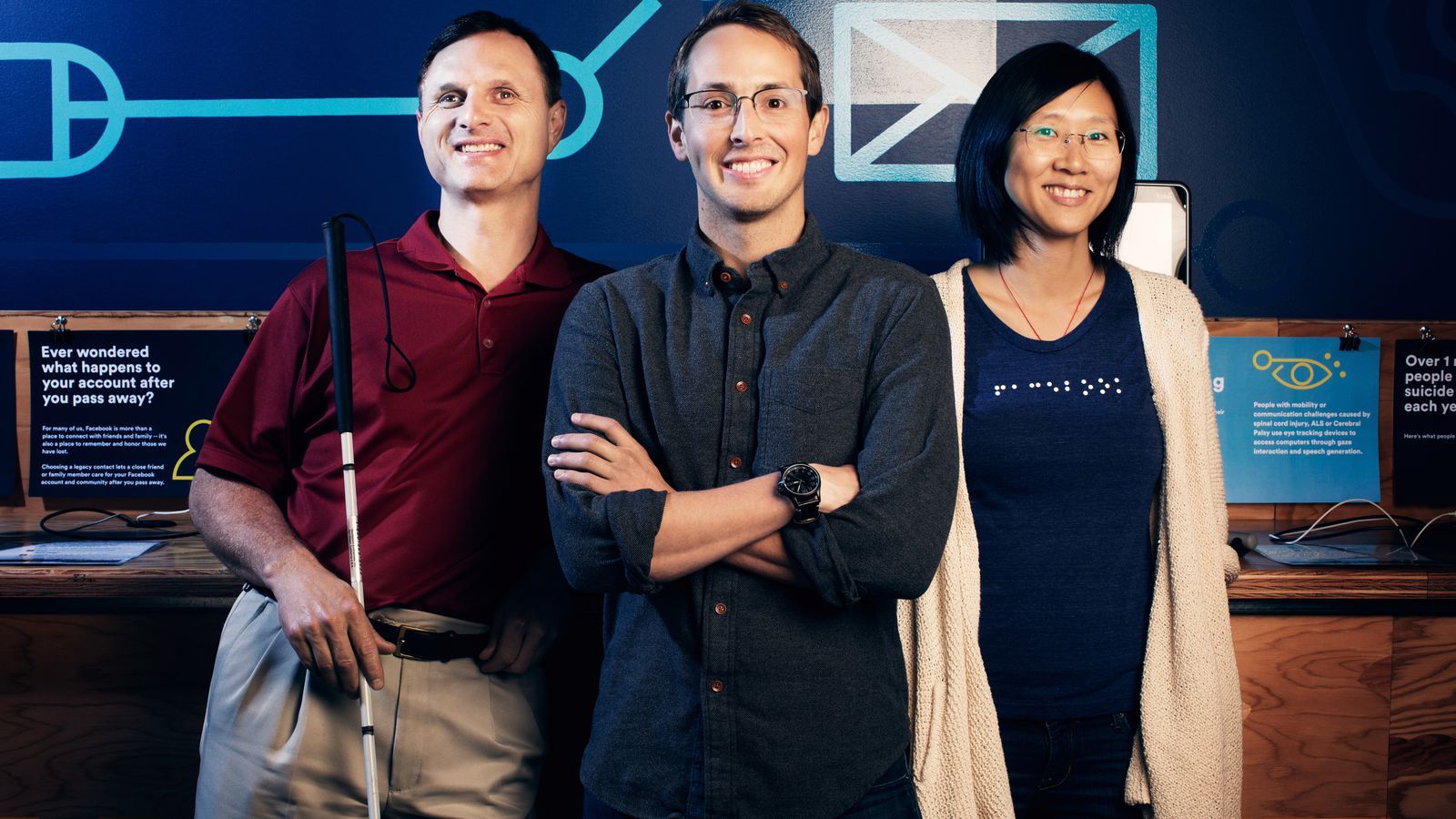

Not anymore. Today Facebook will begin automatically describing the content of photos to blind and visually impaired users. Called “automatic alternative text,” the feature was created by Facebook’s 5-year-old accessibility team. Led by Jeff Wieland, a former user researcher in Facebook’s product group, the team previously built closed captioning for videos and implemented an option to increase the default font size on Facebook for iOS, a feature 10 percent of Facebook users take advantage of.

Automatic alt text, which is coming to iOS today and later to Android and the web, recognizes objects in photos using machine learning. Machine learning helps to build artificial intelligences by using algorithms to make predictions. If you show a piece of software enough pictures of a dog, for example, in time it will be able to identify a dog in a photograph. Automatic alt text identifies things in Facebook photos, then uses the iPhone’s VoiceOver feature to read descriptions of the photos out loud to users. While still in its early stages, the technology can reliably identify concepts in categories including transportation (“car,” “boat,” “airplane”), nature (“snow,” “ocean,” “sunset”), sports (“basketball court”), and food (“sushi”). The technology can also describe people (“baby,” “smiling,” beard”), and identify a selfie.

Read more