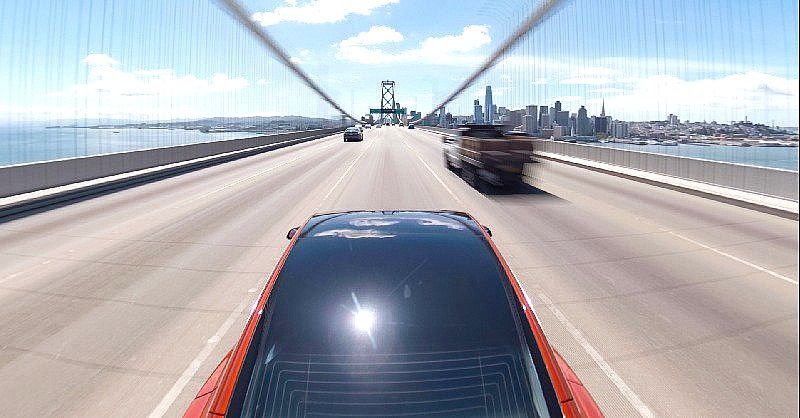

Tesla’s Full Self-Driving suite continues to improve with a recent video showing a Model 3 safely shifting away from a makeshift lane of construction cones while using Navigate on Autopilot.

Tesla owner-enthusiast Jeremy Greenlee was traveling through a highway construction zone in his Model 3. The zone contained a makeshift lane to the vehicle’s left that was made up of construction cones.

In an attempt to avoid the possibility of any collision with the cones from taking place, the vehicle utilized the driver-assist system and automatically shifted one lane to the right. This maneuver successfully removed any risk of coming into contact with the dense construction cones that were to the left of the car, which could have caused hundreds of dollars in cosmetic damage to the vehicle.