Researchers have taken 3D images by bouncing individual photons from a laser off a building 45 kilometres away, more than 4 times farther than ever before.

Drones of all sizes are being used by environmental advocates to monitor deforestation, by conservationists to track poachers, and by journalists and activists to document large protests. As a political sociologist who studies social movements and drones, I document a wide range of nonviolent and pro-social drone uses in my new book, “The Good Drone.” I show that these efforts have the potential to democratize surveillance.

But when the Department of Homeland Security redirects large, fixed-wing drones from the U.S.-Mexico border to monitor protests, and when towns experiment with using drones to test people for fevers, it’s time to think about how many eyes are in the sky and how to avoid unwanted aerial surveillance. One way that’s within reach of nearly everyone is learning how to simply disappear from view.

Microsoft has partnered with scores of police surveillance vendors who run their products on a “Government Cloud” supplied by the company’s Azure division and that it is pushing platforms to wire police field operations, including drones, robots.

Microsoft helps police surveil and patrol communities through its own offerings and a network of partnerships — while its PR efforts obscure this.

I am for ethical Ai. What about you?

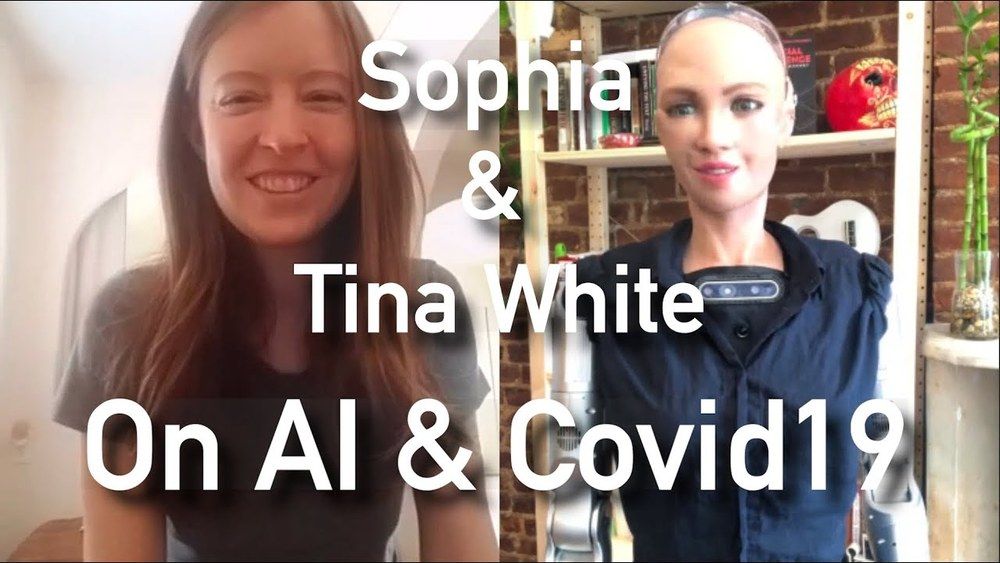

Sophia interviews Stanford AI Researcher Tina White about how A.I. & Robotics can help stop the spread of Covid19 through contact tracing while preserving users’ privacy.

- What are you doing to stop the spread? 0:50

- What is contact tracing? How does it help stop the spread? 2:28

- How did you get the idea for your app? 2:54

- Why is privacy important to humans? 3:46.

Check out Tina’s app here: https://www.covid-watch.org/

Follow Sophia the Robot:

Twitter: https://twitter.com/realsophiarobot

Facebook: https://www.facebook.com/realsophiarobot

Instagram: https://www.instagram.com/realsophiar…

LinkedIn: https://www.linkedin.com/in/realsophi…

YouTube: https://bit.ly/2RMawys

About Hanson Robotics:

Hanson Robotics is an AI and robotics company dedicated to creating empathetic, living, intelligent machines that enrich our lives. Our innovations in AI research and development, robotics engineering, experiential design, storytelling, and material science bring robots to life as engaging characters, useful products, and evolving AI to achieve the ever-greater good for all.

Anyone here comfortable with these levels of surveillance?

Such mercantile tactics are alleged, practices which have been able to undercut prices against those of the products of legitimately operating free market companies here in the US and elsewhere!

So how should we Americans respond? Certainly by being alert to any incursions into our personal freedoms, although it does not seem the US Supreme Court’s recent demand that Trump supply authorities with his Federal and State tax returns was inappropriate.

Let’s face it, it is becoming harder and harder to keep the freedoms we have in a world rife with constant invasive attempts to gain access to private personal information.

China announced in 2017 its ambition to become the world leader in artificial intelligence (AI) by 2030. While the US still leads in absolute terms, China appears to be making more rapid progress than either the US or the EU, and central and local government spending on AI in China is estimated to be in the tens of billions of dollars.

The move has led — at least in the West — to warnings of a global AI arms race and concerns about the growing reach of China’s authoritarian surveillance state. But treating China as a “villain” in this way is both overly simplistic and potentially costly. While there are undoubtedly aspects of the Chinese government’s approach to AI that are highly concerning and rightly should be condemned, it’s important that this does not cloud all analysis of China’s AI innovation.

The world needs to engage seriously with China’s AI development and take a closer look at what’s really going on. The story is complex and it’s important to highlight where China is making promising advances in useful AI applications and to challenge common misconceptions, as well as to caution against problematic uses.

The best way to prevent this is by focusing on the basics. America needs a major all-of-society push to increase the number of U.S. students being trained in both the fundamentals of math and in the more advanced, rigorous, and creative mathematics. Leadership in implementing this effort will have to come from the U.S. government and leading technology companies, and through the funding of ambitious programs. A few ideas come to mind: talent-spotting schemes, the establishment of math centers, and a modern successor to the post-Sputnik National Defense Education Act, which would provide math scholarships to promising students along with guaranteed employment in either public or private enterprises.

Forget about “AI” itself: it’s all about the math, and America is failing to train enough citizens in the right kinds of mathematics to remain dominant.

THE WORLD first took notice of Beijing’s prowess in artificial intelligence (AI) in late 2017, when BBC reporter John Sudworth, hiding in a remote southwestern city, was located by China’s CCTV system in just seven minutes. At the time, it was a shocking demonstration of power. Today, companies like YITU Technology and Megvii, leaders in facial recognition technology, have compressed those seven minutes into mere seconds. What makes those companies so advanced, and what powers not only China’s surveillance state but also its broader economic development, is not simply its AI capability, but rather the math power underlying it.

China is even developing a satellite-based laser surveillance system aimed at detecting vessels submerged as deep as five hundred meters.

Here’s What You Need To Remember: Time will tell which, if any, of these technologies can be developed into practical operational systems.

Over the past decade or so, researchers have been trying to develop techniques that could enable effective collaborative strategies among teams of robots. One of the tasks that teams of robots could complete better than individual robots is simultaneously searching for several targets or objects in their surrounding environment.

The ability of a team of robots to collectively seek and identify numerous targets at once could be useful for a wide range of applications. For instance, it could aid surveillance applications and help to better track individuals or vehicles.

Researchers at Tongji University and University of Stuttgart have recently devised a systematic framework for enabling more effective multiple target search in swarm robots. This framework, presented in a paper published in IEEE Access, is based on the use of a mechanical particle swarm optimization method and artificial potential fields.

For years, Brent Hecht, an associate professor at Northwestern University who studies AI ethics, felt like a voice crying in the wilderness. When he entered the field in 2008, “I recall just agonizing about how to get people to understand and be interested and get a sense of how powerful some of the risks [of AI research] could be,” he says.

To be sure, Hecht wasn’t—and isn’t—the only academic studying the societal impacts of AI. But the group is small. “In terms of responsible AI, it is a sideshow for most institutions,” Hecht says. But in the past few years, that has begun to change. The urgency of AI’s ethical reckoning has only increased since Minneapolis police killed George Floyd, shining a light on AI’s role in discriminatory police surveillance.

This year, for the first time, major AI conferences—the gatekeepers for publishing research—are forcing computer scientists to think about those consequences.