Tardigrades have previously survived in the vacuum of space.

Indestructible water-dwelling creatures called tardigrades will be subject to a series of experiments at the International Space Station to reveal how they survive in extreme environments.

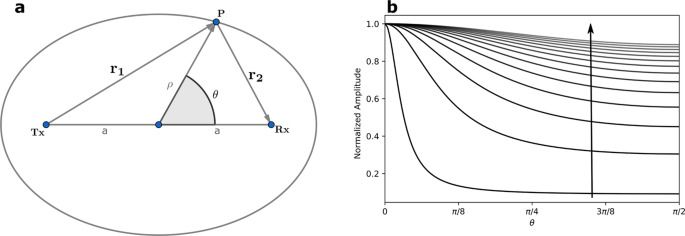

Advanced uses of time in image rendering and reconstruction have been the focus of much scientific research in recent years. The motivation comes from the equivalence between space and time given by the finite speed of light c. This equivalence leads to correlations between the time evolution of electromagnetic fields at different points in space. Applications exploiting such correlations, known as time-of-flight (ToF)1 and light-in-flight (LiF)2 cameras, operate at various regimes from radio3,4 to optical5 frequencies. Time-of-flight imaging focuses on reconstructing a scene by measuring delayed stimulus responses via continuous wave, impulses or pseudo-random binary sequence (PRBS) codes1. Light-in-flight imaging, also known as transient imaging6, explores light transport and detection2,7. The combination of ToF and LiF has recently yielded higher accuracy and detail to the reconstruction process, especially in non-line-of-sight images with the inclusion of higher-order scattering and physical processes such as Rayleigh–Sommerfeld diffraction8 in the modeling. However, these methods require experimental characterization of the scene followed by large computational overheads that produce images at low frame rates in the optical regime. In the radio-frequency (RF) regime, 3D images at frame rates of 30 Hz have been produced with an array of 256 wide-band transceivers3. Microwave imaging has the additional capability of sensing through optically opaque media such as walls. Nonetheless, synthetic aperture radar reconstruction algorithms such as the one proposed in ref. 3 required each transceiver in the array to operate individually thus leaving room for improvements in image frame rates from continuous transmit-receive captures. Constructions using beamforming have similar challenges9 where a narrow focused beam scans a scene using an array of antennas and frequency modulated continuous wave (FMCW) techniques.

In this article, we develop an inverse light transport model10 for microwave signals. The model uses a spatiotemporal mask generated by multiple sources, each emitting different PRBS codes, and a single detector, all operating in continuous synchronous transmit-receive mode. This model allows image reconstructions with capture times of the order of microseconds and no prior scene knowledge. For first-order reflections, the algorithm reduces to a single dot product between the reconstruction matrix and captured signal, and can be executed in a few milliseconds. We demonstrate this algorithm through simulations and measurements performed using realistic scenes in a laboratory setting. We then use the second-order terms of the light transport model to reconstruct scene details not captured by the first-order terms.

We start by estimating the information capacity of the scene and develop the light transport equation for the transient imaging model with arguments borrowed from basic information and electromagnetic field theory. Next, we describe the image reconstruction algorithm as a series of approximations corresponding to multiple scatterings of the spatiotemporal illumination matrix. Specifically, we show that in the first-order approximation, the value of each pixel is the dot product between the captured time series and a unique time signature generated by the spatiotemporal electromagnetic field mask. Next, we show how the second-order approximation generates hidden features not accessible in the first-order image. Finally, we apply the reconstruction algorithm to simulated and experimental data and discuss the performance, strengths, and limitations of this technique.

It’s hard to see more than a handful of stars from Princeton University, because the lights from New York City, Princeton, and Philadelphia prevent our sky from ever getting pitch black, but stargazers who get into more rural areas can see hundreds of naked-eye stars — and a few smudgy objects, too.

The biggest smudge is the Milky Way itself, the billions of stars that make up our spiral galaxy, which we see edge-on. The smaller smudges don’t mean that you need glasses, but that you’re seeing tightly packed groups of stars. One of the best-known of these “clouds” or “clusters” — groups of stars that travel together — is the Pleiades, also known as the Seven Sisters. Clusters are stellar nurseries where thousands of stars are born from clouds of gas and dust and then disperse across the Milky Way.

For centuries, scientists have speculated about whether these clusters always form tight clumps like the Pleiades, spread over only a few dozen lightyears.

Third-party cookie trackers live to fight for another year.

Google is announcing today that it is delaying its plans to phase out third-party cookies in the Chrome browser until 2023, a year or so later than originally planned. Other browsers like Safari and Firefox have already implemented some blocking against third-party tracking cookies, but Chrome is the most-used desktop browser, and so its shift will be more consequential for the ad industry. That’s why the term “cookiepocalypse” has taken hold.

In the blog post announcing the delay, Google says that decision to phase out cookies over a “three month period” in mid-2023 is “subject to our engagement with the United Kingdom’s Competition and Markets Authority (CMA).” In other words, it is pinning part of the delay on its need to work more closely with regulators to come up with new technologies to replace third-party cookies for use in advertising.

Few will shed tears for Google, but it has found itself in a very difficult place as the sole company that dominates multiple industries: search, ads, and browsers. The more Google cuts off third-party tracking, the more it harms other advertising companies and potentially increases its own dominance in the ad space. The less Google cuts off tracking, the more likely it is to come under fire for not protecting user privacy. And no matter what it does, it will come under heavy fire from regulators, privacy advocates, advertisers, publishers, and anybody else with any kind of stake in the web.

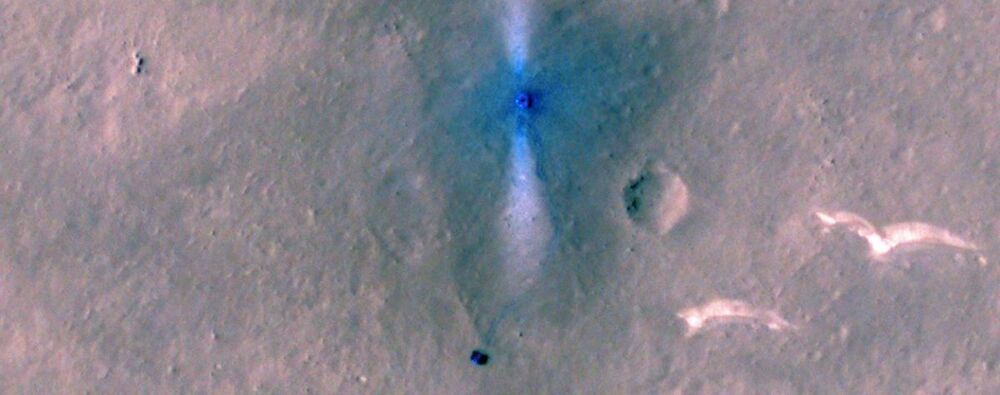

China’s first-ever Mars rover was on the move earlier this month, imagery by a NASA spacecraft shows.

The rover, named Zhurong, is part of Tianwen-1, China’s first fully homegrown Red Planet mission, which arrived in orbit around Mars in February. Zhurong separated from the Tianwen-1 orbiter on May 14 and touched down on the vast plain Utopia Planitia a few hours later.

China’s prime rocket manufacturer has unveiled a roadmap for the country’s future manned Mars exploration missions, which not only includes manned landing missions but also Mars base building.

Wang Xiaojun, head of the state-owned China Academy of Launch Vehicle Technology (CALT), outlined the plans in his speech themed “The Space Transportation System of Human Mars Exploration” at the Global Space Exploration Conference (GLEX 2021) via a virtual link, the academy told the Global Times on Wednesday.

After reviewing the successful mission of the Tianwen-1 probe mission, the country’s first interplanetary exploration that achieved a successful orbiting, landing and roving the Red Planet all in one go, Wang introduced the three-step plan for future Mars expedition.