https://vimeo.com/53577644

Economist Carlota Perez talk about the future of ICT.

https://vimeo.com/53577644

Economist Carlota Perez talk about the future of ICT.

[youtube_sc url=“https://www.youtube.com/watch?v=YhgIY-Vghj4”]

In spite of the popular perception of the state of artificial intelligence, technology has yet to create a robot with the same instincts and adaptability as a human. While humans are born with some natural instincts that have evolved over millions of years, Neuroscientist and Artificial Intelligence Expert Dr. Danko Nikolic believes these same tendencies can be instilled in a robot.

“Our biological children are born with a set of knowledge. They know where to learn, they know where to pay attention. Robots simply can not do that,” Nikolic said. “The problem is you can not program it. There’s a trick we can use called AI Kindergarten. Then we can basically interact with this robot kind of like we do with children in kindergarten, but then make robots learn one level lower, at the level of something called machine genome.”

Programming that machine genome would require all of the innate human knowledge that’s evolved over thousands of years, Nikolic said. Lacking that ability, he said researchers are starting from scratch. While this form of artificial intelligence is still in its embryonic state, it does have some evolutionary advantages that humans didn’t have.

“By using AI Kindergarten, we don’t have to repeat the evolution exactly the way evolution has done it,” Nikolic said. “This experiment has been done already and the knowledge is already stored in our genes, so we can accelerate tremendously. We can skip millions of failed experiments where evolution has failed already.”

Rather than jumping into logic or facial recognition, researchers must still begin with simple things, like basic reflexes and build on top of that, Nikolic said. From there, we can only hope to come close to the intelligence of an insect or small bird.

“I think we can develop robots that would be very much biological, like robots, and they would behave as some kind of lower level intelligence animal, like a cockroach or lesser intelligent birds,” he said. “(The robots) would behave the way (animals) do and they would solve problems the way they do. It would have the flexibility and adaptability that they have and that’s much, much more than what we have today.”

As that machine genome continues to evolve, Nikolic compared the potential manipulation of that genome to the selective breeding that ultimately evolved ferocious wolves into friendly dogs. The results of robotic evolution will be equally benign, and he believes, any attempts to develop so-called “killer robots” won’t happen overnight. Just as it takes roughly 20 years for a child to fully develop into an adult, Nikolic sees an equally long process for artificial intelligence to evolve.

Nikolic cited similar attempts in the past where the manipulation of the genome of biological systems produced a very benign result. Further, he doesn’t foresee researchers creating something dangerous, and given his theory that AI could develops from a core genome, then it would be next to impossible to change the genome of a machine or of a biological system by just changing a few parts.

Going forward, Nikolic still sees a need for caution. Building some form of malevolent artificial intelligence is possible, he said, but the degree of difficulty still makes it unlikely.

“We can not change the genome of machine or human simply by changing a few parts and then having the thing work as we want. Making it mean is much more difficult than developing a nuclear weapon,” Nikolic said. “I think we have things to watch out for, and there should be regulation, but I don’t think this is a place for some major fear… there is no big risk. What we will end up with, I believe, will be a very friendly AI that will care for humans and serve humans and that’s all we will ever use.”

With modern innovations such as artificial intelligence, virtual reality, wi-fi, tablet computing and more, it’s easy for man to look around and say that the human brain is a complex and well-evolved organ. But according to Author, Neuroscientist and Psychologist Gary Marcus, the human mind is actually constructed somewhat haphazardly, and there is still plenty of room for improvement.

“I called my book Kluge, which is an old engineer’s word for a clumsy solution. Think of MacGyver kind of duct tape and rubber bands,” Marcus said. “The thesis of that book is that the human mind is a kluge. I was thinking in terms of how this relates to evolutionary psychology and how our minds have been shaped by evolution.”

Marcus argued that evolution is not perfect, but instead it makes “local maxima,” which are good, but not necessarily the best possible solutions. As a parallel example, he cites the human spine, which allows us to stand upright; however, since it isn’t very well engineered, it also gives us back pain.

“You can imagine a better solution with three legs or branches that would distribute the load better, but we have this lousy solution where our spines are basically like a flag pole supporting 70 percent of our body weight,” Marcus said.

“The reason for that is we’re evolved from tetrapods, which have four limbs and distribute their weight horizontally like a picnic table. As we moved upright, we took what was closest in evolutionary space, which is what took the fewest number of genes in order to give us this new kind of system of standing upright. But it’s not what you would have if you designed it from scratch.”

While Marcus’ book talked about the typical notion in evolutionary psychology that we have evolved to the optimal, he also noted that the human mind works as a function of two pathways, both the optimal performance and our brains’ history. To that end, he sees evolution as a probabilistic process of genes that are nearby, which aren’t necessarily those that are best for a given solution.

“A lot of the book was actually about our memories. The argument I made was that, if you really want a system of brain that does the thing humans do, you would want a kind of memory system that we find in computers, which is called location addressable memory,” Marcus explained.

“With location addressable memory, I’m going to store something in location seven or location eight or nine, and then you’re guaranteed to be able to go back to that thing you want when you want it, which is why computer memory is reliable. Our memory is not even remotely reliable. I can forget what I was going to say or forget where I parked my car. Our memories are nothing even close to the theoretical optimum that a computer shows us.”

Enhancing our minds, and our memories, won’t happen overnight, Marcus said. One might have a “brain like a computer” in theory, but he believes a more evolved, computer-like human brain is thousands of years away.

“There is what I call ‘evolutionary inertia’ that says once something is in place, it’s very hard for evolution to change it. If you change one or two genes, you might have an organism that survives. If you change several hundred, most likely, things are gonna’ break.”

In other words, evolution is the ultimate resourceful engine. Most evolutionary changes are small, since the brain tends to tweak the existing parts rather than start from scratch, which would be a more costly and rather inefficient solution in a survival-of-the-fittest-type world.

Given that genetic science hasn’t worked through a way to rewire the human brain, Marcus poses that better solution toward cognitive enhancement might be found in implants. Rather than generations from now, he believes that advancement could happen in our lifetimes.

“There are now actual cognitive enhancements, if you count motor control substitutes. Neural prostheses are here in limited ways. We know roughly how to make them. There’s a lot of fine detail that needs to be sorted,” Marcus said. “We certainly know how to write computer programs that can translate between interfaces. The big limiting step in improving our memory or enhancing our memory is, we just don’t really understand how information is stored in the brain. I think (a solution for that) is a 50-year project. It’s certainly not a 50,000 year project.”

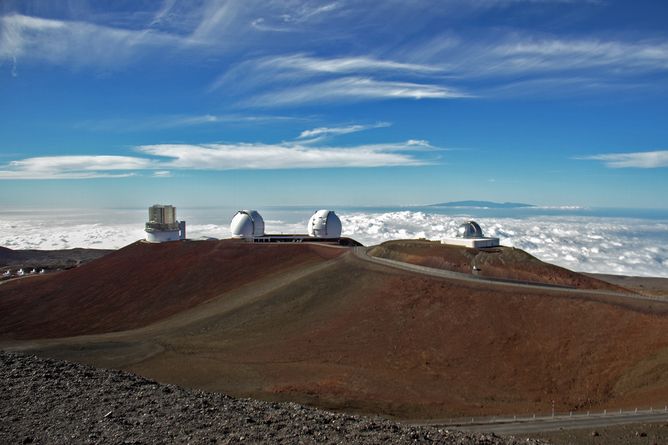

“Should astronomers be allowed to build the TMT on Mauna Kea? This question raises concerns that we, as practising astronomers, see as a reoccurring issue within the scientific community.”

In one of my first articles for Lifeboat,* I provided an experimental methodology for demonstrating (or proving) the instantaneous ‘communication’ between quantum entangled particles. Even though changes to one particle can be provably demonstrated at its far away twin, the very strange experimental results suggested by quantum theory also demonstrate that you cannot use the simultaneity for any purpose. That is, you can provably pass information instantly, but you cannot study the ‘message’ (a change in state at the recipient), until such time as it could have been transmit by a classical radio wave.

Now, scientists have conducted an experiment proving that objects can instantaneously affect each other, regardless o the distance between them. [continue below]

Sorry Einstein.

Quantum Study Suggests ‘Spooky Action’ is RealIn a landmark study, scientists at Delft University of Technology in the Netherlands reported that they had conducted an experiment that they say proved one of the most fundamental claims of quantum theory — that objects separated by great distance can instantaneously affect each other’s behavior.

The finding is another blow to one of the bedrock principles of standard physics known as “locality,” which states that an object is directly influenced only by its immediate surroundings. The Delft study, published Wednesday in the journal Nature, lends further credence to an idea that Einstein famously rejected. He said quantum theory necessitated “spooky action at a distance,” and he refused to accept the notion that the universe could behave in such a strange and apparently random fashion.

* The original Lifeboat article—in which I describe an experimental apparatus in lay terms—was reprinted from my Blog, A Wild Duck.