CERN has revealed plans for a gigantic successor of the giant atom smasher LHC, the biggest machine ever built. Particle physicists will never stop to ask for ever larger big bang machines. But where are the limits for the ordinary society concerning costs and existential risks?

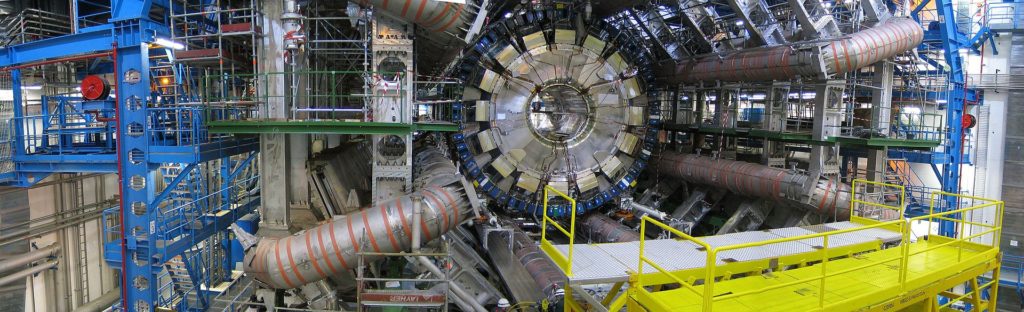

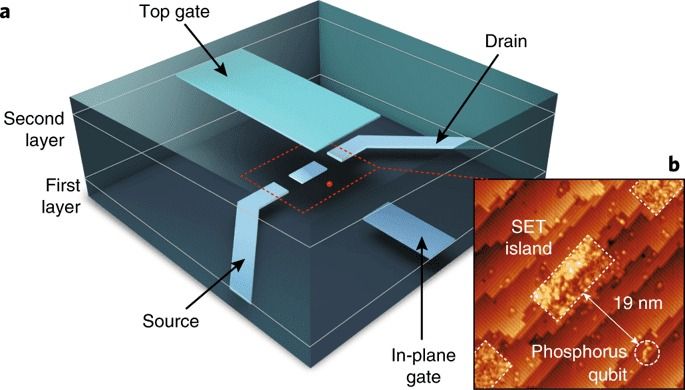

CERN boffins are already conducting a mega experiment at the LHC, a 27km circular particle collider, at the cost of several billion Euros to study conditions of matter as it existed fractions of a second after the big bang and to find the smallest particle possible – but the question is how could they ever know? Now, they pretend to be a little bit upset because they could not find any particles beyond the standard model, which means something they would not expect. To achieve that, particle physicists would like to build an even larger “Future Circular Collider” (FCC) near Geneva, where CERN enjoys extraterritorial status, with a ring of 100km – for about 24 billion Euros.

Experts point out that this research could be as limitless as the universe itself. The UK’s former Chief Scientific Advisor, Prof Sir David King told BBC: “We have to draw a line somewhere otherwise we end up with a collider that is so large that it goes around the equator. And if it doesn’t end there perhaps there will be a request for one that goes to the Moon and back.”

“There is always going to be more deep physics to be conducted with larger and larger colliders. My question is to what extent will the knowledge that we already have be extended to benefit humanity?”

There have been broad discussions about whether high energy nuclear experiments could pose an existential risk sooner or later, for example by producing micro black holes (mBH) or strange matter (strangelets) that could convert ordinary matter into strange matter and that eventually could start an infinite chain reaction from the moment it was stable – theoretically at a mass of around 1000 protons.

CERN has argued that micro black holes eventually could be produced, but they would not be stable and evaporate immediately due to „Hawking radiation“, a theoretical process that has never been observed.

Furthermore, CERN argues that similar high energy particle collisions occur naturally in the universe and in the Earth’s atmosphere, so they could not be dangerous. However, such natural high energy collisions are seldom and they have only been measured rather indirectly. Basically, nature does not set up LHC experiments: For example, the density of such artificial particle collisions never occurs in Earth’s atmosphere. Even if the cosmic ray argument was legitimate: CERN produces as many high energy collisions in an artificial narrow space as occur naturally in more than hundred thousand years in the atmosphere. Physicists look quite puzzled when they recalculate it.

Others argue that a particle collider ring would have to be bigger than the Earth to be dangerous.

A study on “Methodological Challenges for Risks with Low Probabilities and High Stakes” was provided by Lifeboat member Prof Raffaela Hillerbrand et al. Prof Eric Johnson submitted a paper discussing juridical difficulties (lawsuits were not successful or were not accepted respectively) but also the problem of groupthink within scientific communities. More of important contributions to the existential risk debate came from risk assessment experts Wolfgang Kromp and Mark Leggett, from R. Plaga, Eric Penrose, Walter Wagner, Otto Roessler, James Blodgett, Tom Kerwick and many more.

Since these discussions can become very sophisticated, there is also a more general approach (see video): According to present research, there are around 10 billion Earth-like planets alone in our galaxy, the Milky Way. Intelligent life might send radio waves, because they are extremely long lasting, though we have not received any (“Fermi paradox”). Theory postulates that there could be a ”great filter“, something that wipes out intelligent civilizations at a rather early state of their technical development. Let that sink in.

All technical civilizations would start to build particle smashers to find out how the universe works, to get as close as possible to the big bang and to hunt for the smallest particle at bigger and bigger machines. But maybe there is a very unexpected effect lurking at a certain threshold that nobody would ever think of and that theory does not provide. Indeed, this could be a logical candidate for the “great filter”, an explanation for the Fermi paradox. If it was, a disastrous big bang machine eventually is not that big at all. Because if civilizations were to construct a collider of epic dimensions, a lack of resources would have stopped them in most cases.

Finally, the CERN member states will have to decide on the budget and the future course.

The political question behind is: How far are the ordinary citizens paying for that willing to go?

LHC-Critique / LHC-Kritik

Network to discuss the risks at experimental subnuclear particle accelerators

LHC-Critique[at]gmx.com

Particle collider safety newsgroup at Facebook: