To many people, the introduction of the first Macintosh computer and its graphical user interface in 1984 is viewed as the dawn of creative computing. But if you ask Dr. Nick Montfort, a poet, computer scientist, and assistant professor of Digital Media at MIT, he’ll offer a different direction and definition for creative computing and its origins.

Defining Creative

“Creative Computing was the name of a computer magazine that ran from 1974 through 1985. Even before micro-computing there was already this magazine extolling the capabilities of the computer to teach, to help people learn, help people explore and help them do different types of creative work, in literature, the arts, music and so on,” Montfort said.

“It was a time when people had a lot of hope that computing would enable people personally as artists and creators to do work. It was actually a different time than we’re in now. There are a few people working in those areas, but it’s not as widespread as hoped in the late 70’s or early 80s.”

These days, Montfort notes that many people use the term “artificial intelligence” interchangeably with creative computing. While there are some parallels, Montfort said what is classically called AI isn’t the same as computational creativity. The difference, he says, is in the results.

“A lot of the ways in which AI is understood is the ability to achieve a particular known objective,” Montfort said. “In computational creativity, you’re trying to develop a system that will surprise you. If it does something you already knew about then, by definition, it’s not creative.”

Given that, Montfort quickly pointed out that creative computing can still come from known objectives.

“A lot of good creative computer work comes from doing things we already know computers can do well,” he said. “As a simple example, the difference between a computer as a producer of poetic language and person as a producer of poetic language is, the computer can just do it forever. The computer can just keep reproducing and, (with) that capability to bring it together with images to produce a visual display, now you’re able to do something new. There’s no technical accomplishment, but it’s beautiful nonetheless.”

Models of Creativity

As a poet himself, another area of creative computing that Montfort keeps an eye on is the study of models of creativity used to imitate human creativity. While the goal may be to replicate human creativity, Montfort has a greater appreciation for the end results that don’t necessarily appear human-like.

“Even if you’re using a model of human creativity the way it’s done in computational creativity, you don’t have to try to make something human-like, (even though) some people will try to make human-like poetry,” Montfort said. “I’d much rather have a system that is doing something radically different than human artistic practice and making these bizarre combinations than just seeing the results of imitative work.”

To further illustrate his point, Montfort cited a recent computer generated novel contest that yielded some extraordinary, and unusual, results. Those novels were nothing close to what a human might have written, he said, but depending on the eye of the beholder, it at least bodes well for the future.

“A lot of the future of creative computing is individual engagement with creative types of programs,” Montfort said. “That’s not just using drawing programs or other facilities to do work or using prepackaged apps that might assist creatively in the process of composition or creation, but it’s actually going and having people work to code themselves, which they can do with existing programs, modifying them, learning about code and developing their abilities in very informal ways.”

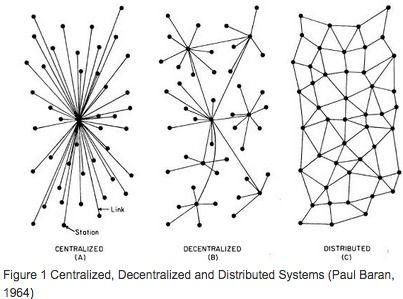

That future of creative computing lies not in industrial creativity or video games, but rather a sharing of information and revisioning of ideas in the multiple hands and minds of connected programmers, Montfort believes.

“One doesn’t have to get a computer science degree or even take a formal class. I think the perspective of free software and open source is very important to the future of creative programming,” Montfort said. “…If people take an academic project and provide their work as free software, that’s great for all sorts of reasons. It allows people to replicate your results, it allows people to build on your research, but also, people might take the work that you’ve done and inflect it in different types of artistic and creative ways.”