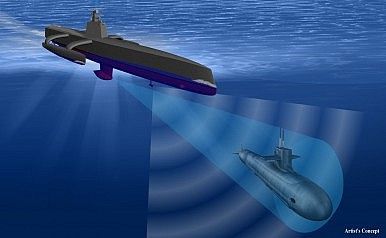

The U.S. Navy’s largest unmanned surface vehicle designed to track Chinese and Russian subs will be christened in April.

The U.S. Navy’s largest unmanned surface vehicle designed to track Chinese and Russian subs will be christened in April.

Honeywell chosen to develop military’s VR technology.

A virtual reality display panel that could replace windows in military ground vehicles is being developed by Honeywell Aerospace for DARPA.

U. Minn & U Central Fla awarded projects to develop technology for the US Military to see through walls.

The emerging tech agency appears to have made at least two awards for that program.

The US military is looking for ways to insert microscopic devices into human brains to help folks communicate with machines, like prosthetic limbs, with their minds. And now, DARPA’s saying scientists have found a way to do just that—without ripping open patients’ skulls.

In the DARPA-funded study, researchers at the University of Melbourne have developed a device that could help people use their brains to control machines. These machines might include technology that helps patients control physical disabilities or neurological disorders. The results were published in the journal Nature Biotechnology.

In the study, the team inserted a paperclip-sized object into the motor cortexes of sheep. (That’s the part of the brain that oversees voluntary movement.) The device is a twist on traditional stents, those teeny tiny tubes that surgeons stick in vessels to improve blood flow.

“Full exploitation of this information is a major challenge,” officials with the Defense Advanced Research Projects Agency (DARPA) wrote in a 2009 brief on “deep learning.”

“Human observation and analysis of [intelligence, surveillance and reconnaissance] assets is essential, but the training of humans is both expensive and time-consuming. Human performance also varies due to individuals’ capabilities and training, fatigue, boredom, and human attentional capacity.”

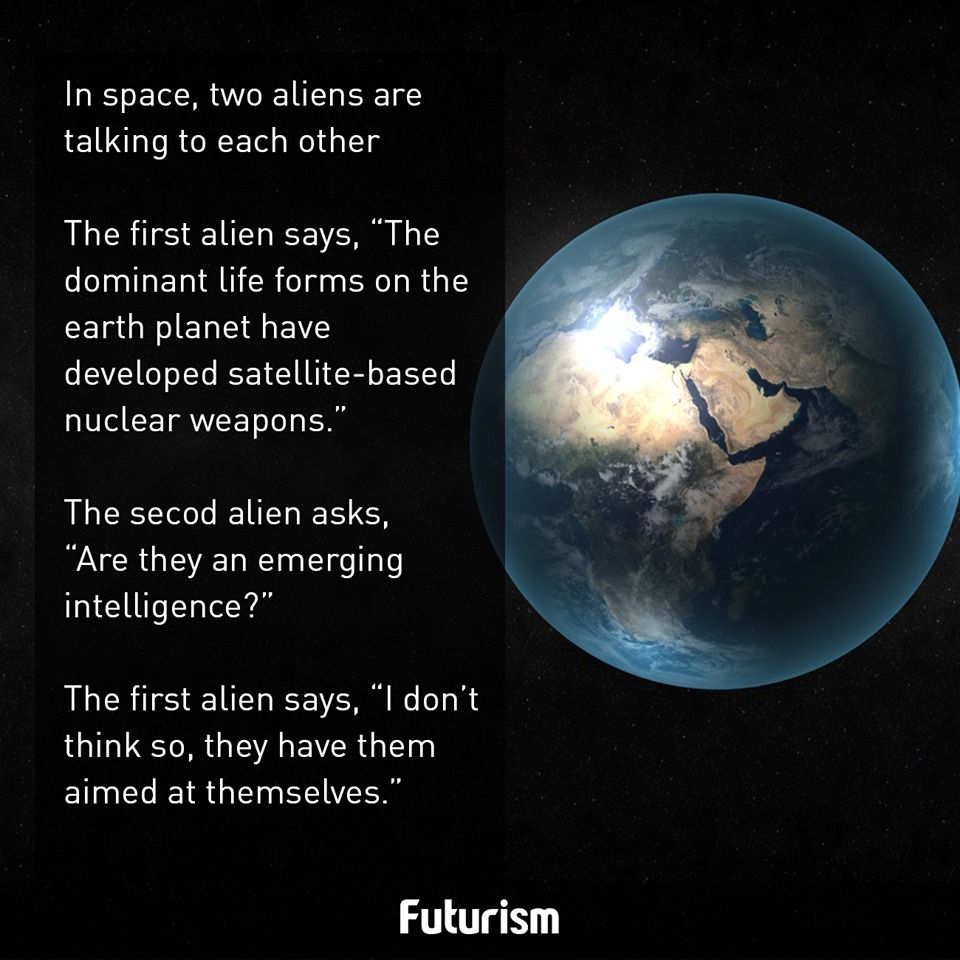

Working with a team of researchers at MIT, DARPA is hoping to take all of that human know-how and shrink it down into processing unit no bigger than your cellphone, using a microchip known as “Eyeriss.” The concept relies on “neural networks;” computerized memory networks based on the workings of the human brain.

More news on DARPA’s new deep learning microchip for the military.

A military-funded breakthrough in microchips opens the door to portable deep learning.

Israel latest technology 2016 unmanned military technology subscribe and thumbs up.

Future of Life Institute illustrate their objection to automated lethal robots:

“Outrage swells within the international community, which demands that whoever is responsible for the atrocity be held accountable. Unfortunately, no one can agree on who that is”

The year is 2020 and intense fighting has once again broken out between Israel and Hamas militants based in Gaza. In response to a series of rocket attacks, Israel rolls out a new version of its Iron Dome air defense system. Designed in a huge collaboration involving defense companies headquartered in the United States, Israel, and India, this third generation of the Iron Dome has the capability to act with unprecedented autonomy and has cutting-edge artificial intelligence technology that allows it to analyze a tactical situation by drawing from information gathered by an array of onboard sensors and a variety of external data sources. Unlike prior generations of the system, the Iron Dome 3.0 is designed not only to intercept and destroy incoming missiles, but also to identify and automatically launch a precise, guided-missile counterattack against the site from where the incoming missile was launched. The day after the new system is deployed, a missile launched by the system strikes a Gaza hospital far removed from any militant activity, killing scores of Palestinian civilians. Outrage swells within the international community, which demands that whoever is responsible for the atrocity be held accountable. Unfortunately, no one can agree on who that is…

Much has been made in recent months and years about the risks associated with the emergence of artificial intelligence (AI) technologies and, with it, the automation of tasks that once were the exclusive province of humans. But legal systems have not yet developed regulations governing the safe development and deployment of AI systems or clear rules governing the assignment of legal responsibility when autonomous AI systems cause harm. Consequently, it is quite possible that many harms caused by autonomous machines will fall into a legal and regulatory vacuum. The prospect of autonomous weapons systems (AWSs) throws these issues into especially sharp relief. AWSs, like all military weapons, are specifically designed to cause harm to human beings—and lethal harm, at that. But applying the laws of armed conflict to attacks initiated by machines is no simple matter.

The core principles of the laws of armed conflict are straightforward enough. Those most important to the AWS debate are: attackers must distinguish between civilians and combatants; they must strike only when it is actually necessary to a legitimate military purpose; and they must refrain from an attack if the likely harm to civilians outweighs the military advantage that would be gained. But what if the attacker is a machine? How can a machine make the seemingly subjective determination regarding whether an attack is militarily necessary? Can an AWS be programmed to quantify whether the anticipated harm to civilians would be “proportionate?” Does the law permit anyone other than a human being to make that kind of determination? Should it?

Cyber is still a challenge for soldiers on the battlefield.

Editor’s Note: This story has been updated to include comment from an industry official.

WASHINGTON — Cyber vulnerabilities continue to plague the Army’s battlefield communications, according to the Pentagon’s top weapons tester, while the service works to harden its network against cyber attacks.

The Army’s Warfighter Information Network-Tactical (WIN-T), the Mid-Tier Networking Vehicular Radio (MNVR), the Joint Battle Command-Platform (JBC-P) and the Rifleman Radio were all cited as having problematic cybersecurity vulnerabilities in a report released Monday by the Pentagon’s Director, Operational Test & Evaluation (DOT&E), J. Michael Gilmore.