Ever been curious how L1 and L2 cache work? We’re glad you asked. Here, we deep dive into the structure and nature of one of computing’s most fundamental designs and innovations.

A NEW scan can predict heart attack risks five years in advance.

Experts say the “game-changing” advance spots problems in one in ten patients currently getting the all-clear.

It scours ordinary CT scans to detect missed warning signs.

Prime Minister Justin Trudeau has proudly declared that Toronto’s Quayside will become “a testbed for new technologies” thanks to its partnership with Google’s urban innovation wing, Sidewalk Labs. But amid new public round tables, key parts of the proposal remain hidden and three government partners have resigned.

In recent days, word about Nvidia’s new Turing architecture started leaking out of the Santa Clara-based company’s headquarters. So it didn’t come as a major surprise that the company today announced during its Siggraph keynote the launch of this new architecture and three new pro-oriented workstation graphics cards in its Quadro family.

Nvidia describes the new Turing architecture as “the greatest leap since the invention of the CUDA GPU in 2006.” That’s a high bar to clear, but there may be a kernel of truth here. These new Quadro RTx chips are the first to feature the company’s new RT Cores. “RT” here stands for ray tracing, a rendering method that basically traces the path of light as it interacts with the objects in a scene. This technique has been around for a very long time (remember POV-Ray on the Amiga?). Traditionally, though, it was always very computationally intensive, though the results tend to look far more realistic. In recent years, ray tracing got a new boost thanks to faster GPUs and support from the likes of Microsoft, which recently added ray tracing support to DirectX.

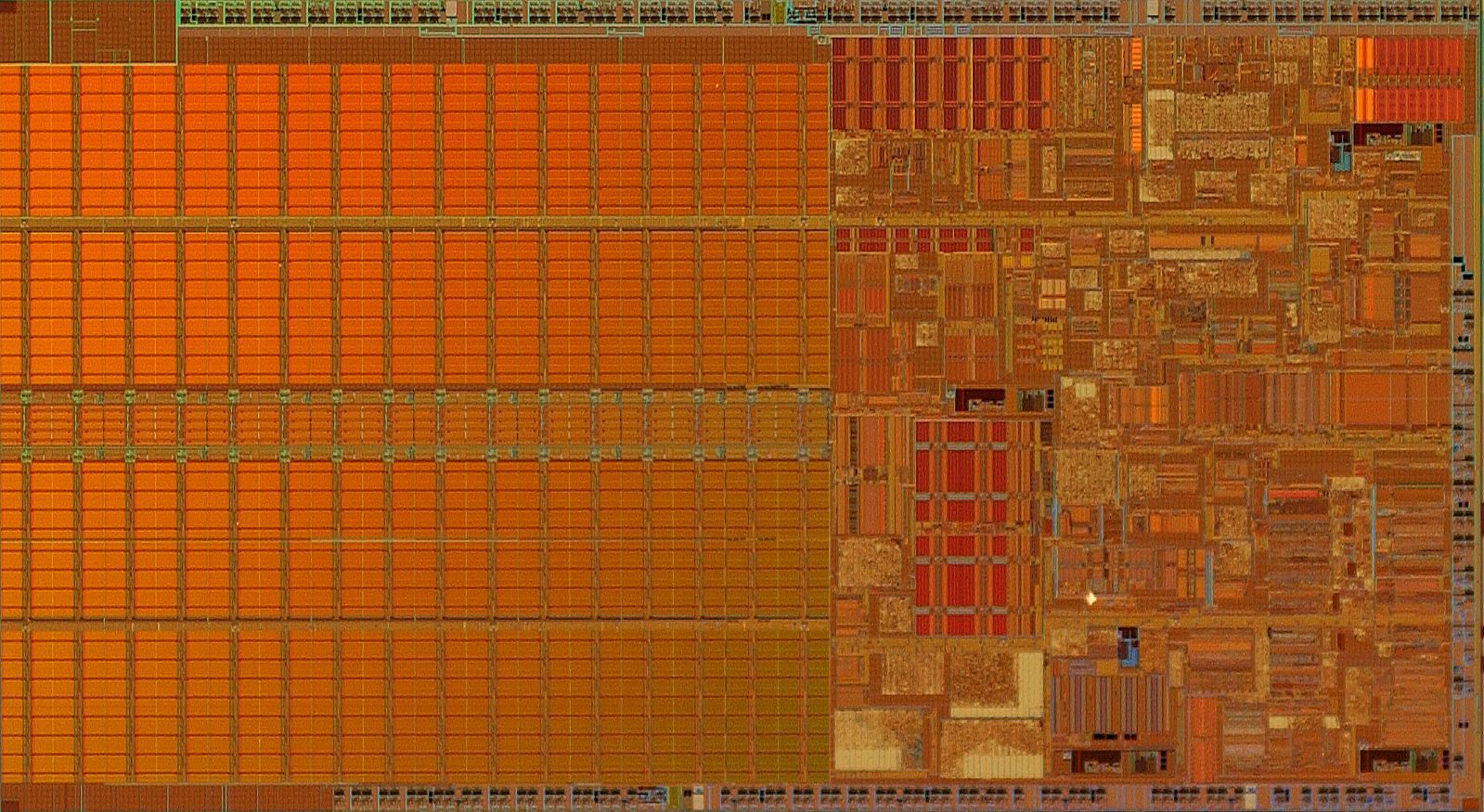

The battle to create the best artificial intelligence chips is underway. Intel is approaching this challenge from its position as a maker of central processing units (CPUs) or the Xeon microprocessors that dominate the datacenter market. Rival Nvidia is attacking from its position as a maker of graphics processing units (GPUs), and both companies are working on solutions that will handle ground-up AI processing.

Nvidia’s GPUs have already grabbed a good chunk of the market for deep learning neural network solutions, such as image recognition — one of the biggest breakthroughs for AI in the past five years. But Intel has tried to position itself through acquisitions of companies such as Nervana, Mobileye, and Movidius. And when Intel bought Nervana for $350 million in 2016, it also picked up Nervana CEO Naveen Rao.

Rao has a background as both a computer architect and a neuroscientist, and he is now vice president and general manager of the Artificial Intelligence Products Group at Intel. He spoke this week at an event where Intel announced that its Xeon CPUs have generated $1 billion in revenue in 2017 for use in AI applications. Rao believes that the overall market for AI chips will reach $8 billion to $10 billion in revenue by 2022.