So long pseudo-random number generator. Quantum mechanics brought us true randomness to power our crypto algorithms, and is strengthening encryption in the cloud, the datacenter, and the Internet of Things.

“Notice for all Mathmaticians” — Are you a mathmatician who loves complex algorithems? If you do, IARPA wants to speak with you.

Last month, the intelligence community’s research arm requested information about training resources that could help artificially intelligent systems get smarter.

It’s more than an effort to build new, more sophisticated algorithms. The Intelligence Advanced Research Projects Activity could actually save money by refining existing algorithms that have been previously discarded by subjecting them to more rigorous training.

Nextgov spoke with Jacob Vogelstein, a program manager at IARPA who specializes in in applied neuroscience, about the program. This conversation has been edited for length and clarity.

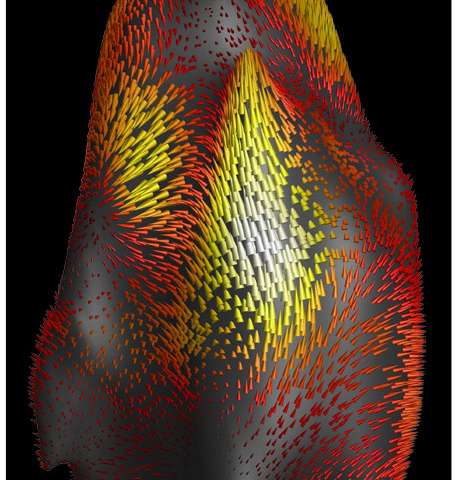

Brown University engineers have developed a new technique to help researchers understand how cells move through complex tissues in the body. They hope the tool will be useful in understanding all kinds of cell movements, from how cancer cells migrate to how immune cells make their way to infection sites.

The technique is described in a paper published in the Proceedings of the National Academy of Sciences.

The traditional method for studying cell movement is called traction force microscopy (TFM). Scientists take images of cells as they move along 2-D surfaces or through 3-D gels that are designed as stand-ins for actual body tissue. By measuring the extent to which cells displace the 2-D surface or the 3-D gel as they move, researchers can calculate the forces generated by the cell. The problem is that in order to do the calculations, the stiffness and other mechanical properties of artificial tissue environment must be known.

A team of Stanford researchers have developed a novel means of teaching artificial intelligence systems how to predict a human’s response to their actions. They’ve given their knowledge base, dubbed Augur, access to online writing community Wattpad and its archive of more than 600,000 stories. This information will enable support vector machines (basically, learning algorithms) to better predict what people do in the face of various stimuli.

“Over many millions of words, these mundane patterns [of people’s reactions] are far more common than their dramatic counterparts,” the team wrote in their study. “Characters in modern fiction turn on the lights after entering rooms; they react to compliments by blushing; they do not answer their phones when they are in meetings.”

In its initial field tests, using an Augur-powered wearable camera, the system correctly identified objects and people 91 percent of the time. It correctly predicted their next move 71 percent of the time.

K-Glass, smart glasses reinforced with augmented reality (AR) that were first developed by the Korea Advanced Institute of Science and Technology (KAIST) in 2014, with the second version released in 2015, is back with an even stronger model. The latest version, which KAIST researchers are calling K-Glass 3, allows users to text a message or type in key words for Internet surfing by offering a virtual keyboard for text and even one for a piano.

Currently, most wearable head-mounted displays (HMDs) suffer from a lack of rich user interfaces, short battery lives, and heavy weight. Some HMDs, such as Google Glass, use a touch panel and voice commands as an interface, but they are considered merely an extension of smartphones and are not optimized for wearable smart glasses. Recently, gaze recognition was proposed for HMDs including K-Glass 2, but gaze is insufficient to realize a natural user interface (UI) and experience (UX), such as user’s gesture recognition, due to its limited interactivity and lengthy gaze-calibration time, which can be up to several minutes.

As a solution, Professor Hoi-Jun Yoo and his team from the Electrical Engineering Department recently developed K-Glass 3 with a low-power natural UI and UX processor to enable convenient typing and screen pointing on HMDs with just bare hands. This processor is composed of a pre-processing core to implement stereo vision, seven deep-learning cores to accelerate real-time scene recognition within 33 milliseconds, and one rendering engine for the display.

Very nice.

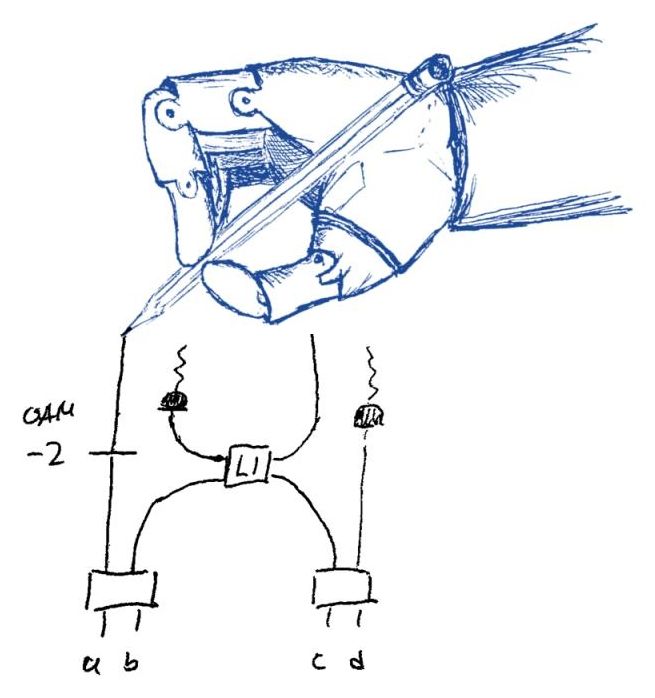

Quantum physicist Mario Krenn and his colleagues in the group of Anton Zeilinger from the Faculty of Physics at the University of Vienna and the Austrian Academy of Sciences have developed an algorithm which designs new useful quantum experiments. As the computer does not rely on human intuition, it finds novel unfamiliar solutions. The research has just been published in the journal Physical Review Letters. The idea was developed when the physicists wanted to create new quantum states in the laboratory, but were unable to conceive of methods to do so. “After many unsuccessful attempts to come up with an experimental implementation, we came to the conclusion that our intuition about these phenomena seems to be wrong. We realized that in the end we were just trying random arrangements of quantum building blocks. And that is what a computer can do as well — but thousands of times faster”, explains Mario Krenn, PhD student in Anton Zeilinger’s group and first author research.

After a few hours of calculation, their algorithm — which they call Melvin — found the recipe to the question they were unable to solve, and its structure surprised them. Zeilinger says: “Suppose I want build an experiment realizing a specific quantum state I am interested in. Then humans intuitively consider setups reflecting the symmetries of the state. Yet Melvin found out that the most simple realization can be asymmetric and therefore counterintuitive. A human would probably never come up with that solution.”

The physicists applied the idea to several other questions and got dozens of new and surprising answers. “The solutions are difficult to understand, but we were able to extract some new experimental tricks we have not thought of before. Some of these computer-designed experiments are being built at the moment in our laboratories”, says Krenn.

Interesting read; however, the author has limited his view to Quantum being only a computing solution when in fact it is much more. Quantum technology does offer faster processing power & better security; but, Quantum offers us Q-Dots which enables us to enrich medicines & other treatments, improves raw materials including fuels, even vegetation.

For the first time we have a science that cuts across all areas of technology, medical & biology, chemistry, manufacturing, etc. No other science has been able to achieve this like Quantum.

Also, the author in statements around being years off has some truth if we’re suggesting 7 yrs then I agree. However, more than 7 years I don’t agree especially with the results we are seeing in Quantum Networking.

Not sure of the author’s own inclusion on some of the Quantum Technology or Q-Dot experiements; however, I do suggest that he should look at Quantum with a broader lens because there is a larger story around Quantum especially in the longer term as well look to improve things like BMI, AI, longevity, resistent materials for space, etc/.

I recently read Seth Lloyd’s A Turing Test for Free Will — conveniently related to the subject of the blog’s last piece, and absolutely engrossing. It’s short, yet it makes a wonderful nuance in the debate over determinism, arguing that predictable functions can still have unpredictable outcomes, known as “free will functions.”

I had thought that the world only needed more funding, organized effort, and goodwill to solve its biggest threats concerning all of humanity, from molecular interactions in fatal diseases to accessible, accurate weather prediction for farmers. But therein lies the rub: to be able to tackle large-scale problems, we must be able to analyze all the data points associated to find meaningful recourses in our efforts. Call it Silicon Valley marketing, but data analysis is important, and fast ways of understanding that data could be the key to faster solution implementation.

Classical computers can’t solve almost all of these complex problems in a reasonable amount of time — the time it takes for algorithms to finish increases exponentially with the size of the dataset, and approximations can run amok.

“Online abuse can be cruel – but for some tech companies it is an existential threat. Can giants such as Facebook use behavioural psychology and persuasive design to tame the trolls?”

Whether in the brain or in code, neural networks are shaping up to be one of the most critical areas of research in both neuroscience and computer science. An increasing amount of attention, funding, and development has been pushed toward technologies that mimic the brain in both hardware and software to create more efficient, high performance systems capable of advanced, fast learning.

One aspect of all the efforts toward more scalable, efficient, and practical neural networks and deep learning frameworks we have been tracking here at The Next Platform is how such systems might be implemented in research and enterprise over the next ten years. One of the missing elements, at least based on the conversations that make their way into various pieces here, for such eventual end users is reducing the complexity of the training process for neural networks to make them more practically useful–and without all of the computational overhead and specialized systems training requires now. Crucial then, is a whittling down of how neural networks are trained and implemented. And not surprisingly, the key answers lie in the brain, and specifically, functions in the brain and how it “trains” its own network that are still not completely understood, even by top neuroscientists.

In many senses, neural networks, cognitive hardware and software, and advances in new chip architectures are shaping up to be the next important platform. But there are still some fundamental gaps in knowledge about our own brains versus what has been developed in software to mimic them that are holding research at bay. Accordingly, the Intelligence Advanced Research Projects Activity (IARPA) in the U.S. is getting behind an effort spearheaded by Tai Sing Lee, a computer science professor at Carnegie Mellon University’s Center for the Neural Basis of Cognition, and researchers at Johns Hopkins University, among others, to make new connections between the brain’s neural function and how those same processes might map to neural networks and other computational frameworks. The project called the Machine Intelligence from Cortical Networks (MICRONS).

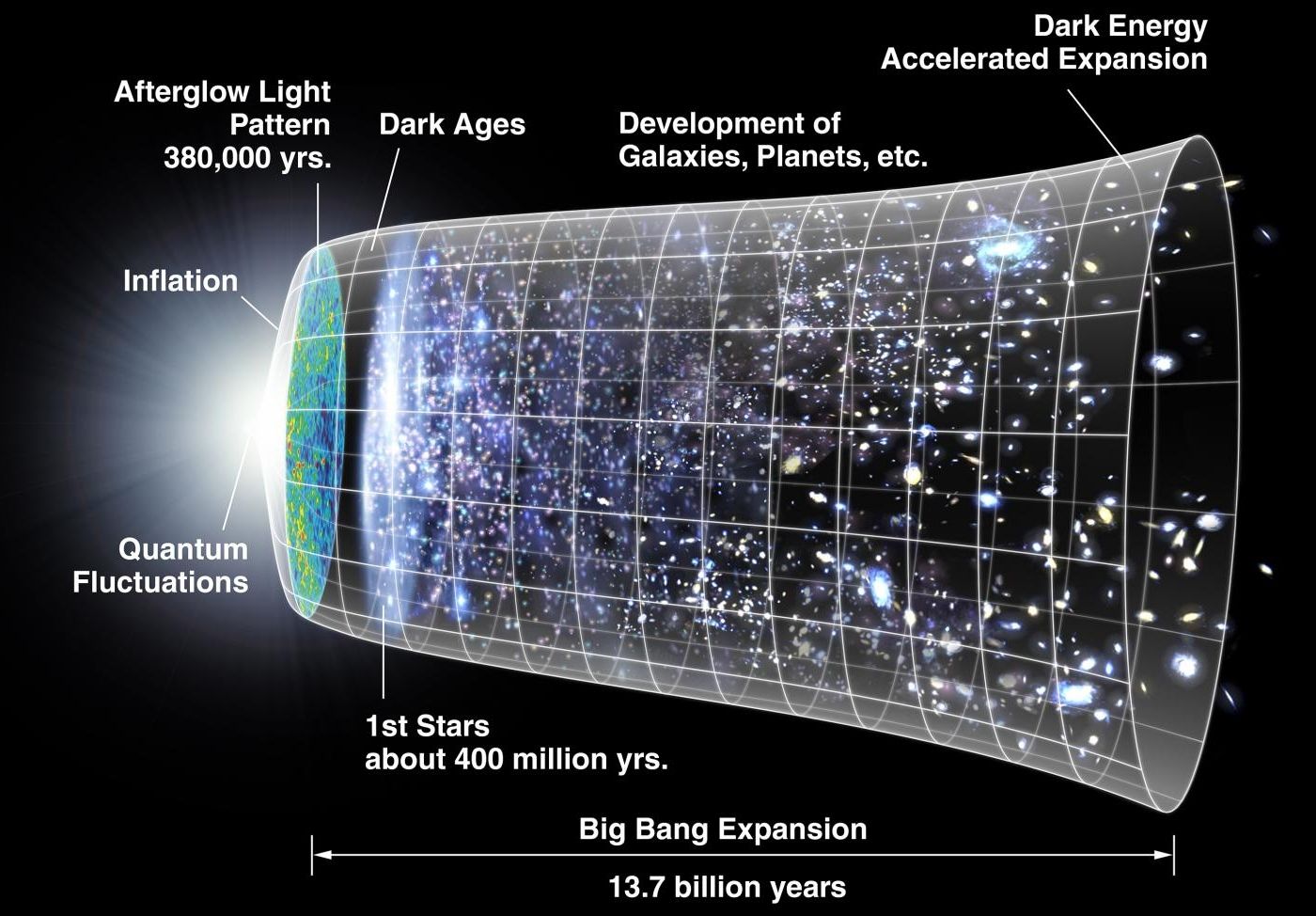

New equation proves no “Big Bang” theory and no beginning either as well as no singularity.

(Phys.org) —The universe may have existed forever, according to a new model that applies quantum correction terms to complement Einstein’s theory of general relativity. The model may also account for dark matter and dark energy, resolving multiple problems at once.

The widely accepted age of the universe, as estimated by general relativity, is 13.8 billion years. In the beginning, everything in existence is thought to have occupied a single infinitely dense point, or singularity. Only after this point began to expand in a “Big Bang” did the universe officially begin.

Although the Big Bang singularity arises directly and unavoidably from the mathematics of general relativity, some scientists see it as problematic because the math can explain only what happened immediately after—not at or before—the singularity.