In 1610, Galileo redesigned the telescope and discovered Jupiter’s four largest moons. Nearly 400 years later, NASA’s Hubble Space Telescope used its powerful optics to look deep into space—enabling scientists to pin down the age of the universe.

Suffice it to say that getting a better look at things produces major scientific advances.

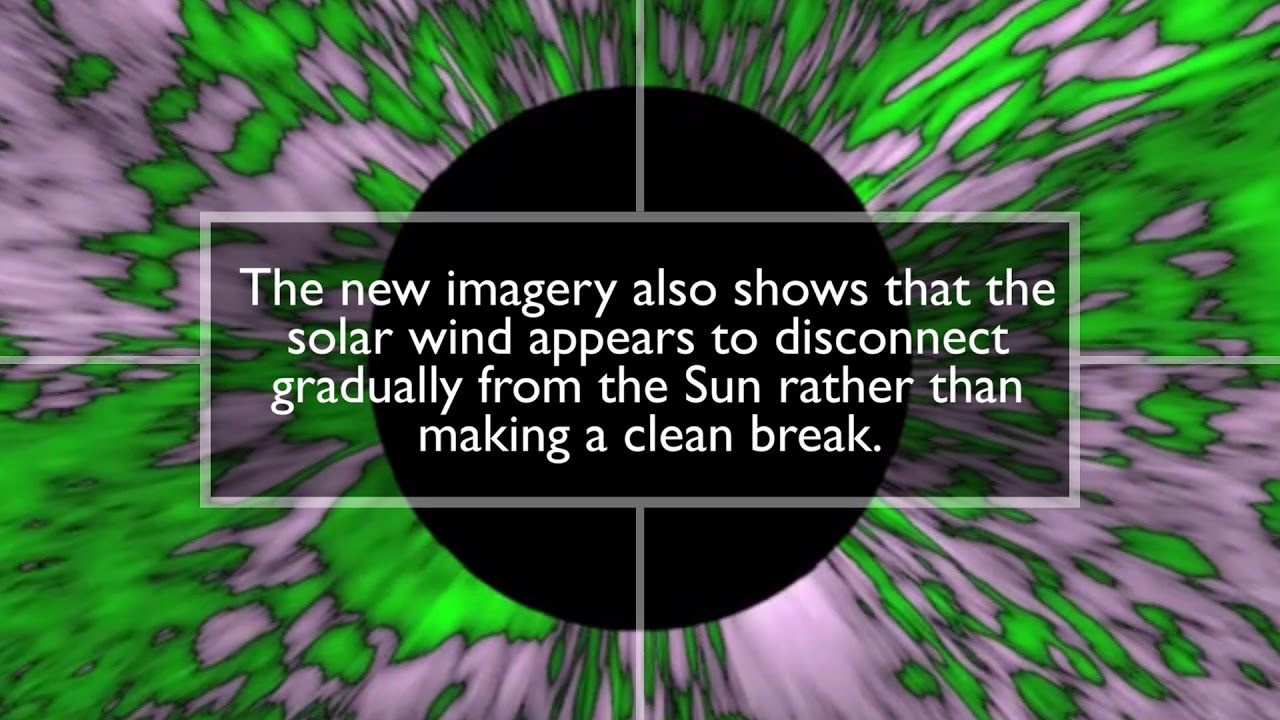

In a paper published on July 18 in The Astrophysical Journal, a team of scientists led by Craig DeForest—solar physicist at Southwest Research Institute’s branch in Boulder, Colorado—demonstrate that this historical trend still holds. Using advanced algorithms and data-cleaning techniques, the team discovered never-before-detected, fine-grained structures in the outer corona—the Sun’s million-degree atmosphere—by analyzing images taken by NASA’s STEREO spacecraft. The new results also provide foreshadowing of what might be seen by NASA’s Parker Solar Probe, which after its launch in the summer 2018 will orbit directly through that region.