George Church is pushing the boundaries of science!

Harvard biologist George Church wants to reverse aging, reanimate a mammoth, and build an entire human genome from scratch. What makes him tick?

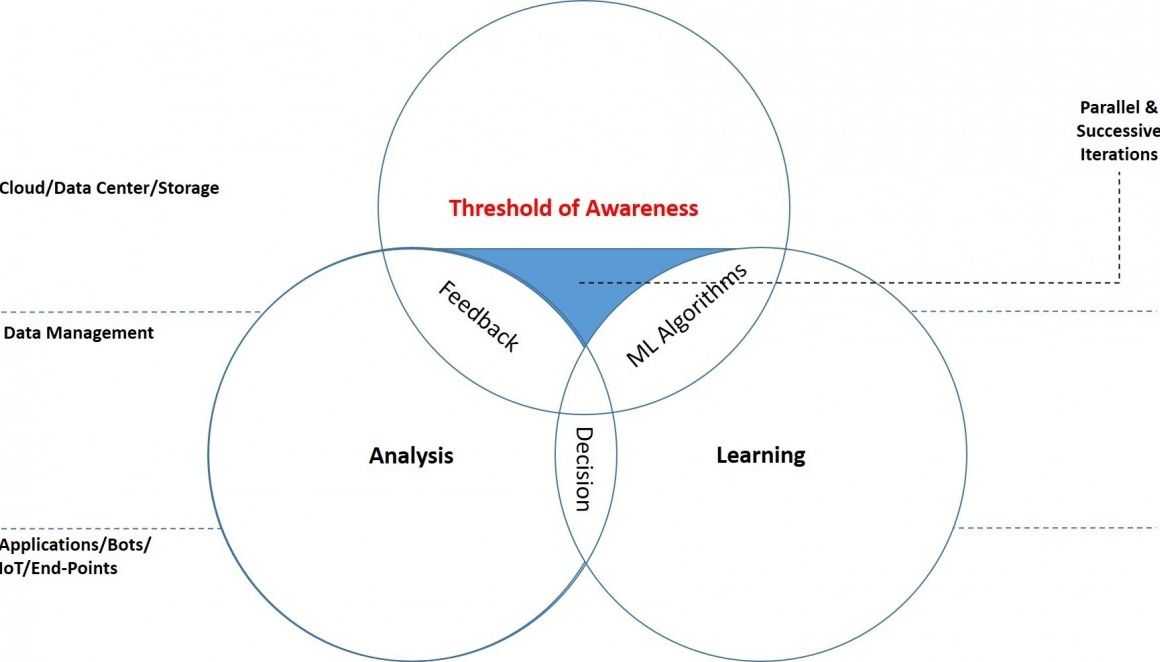

The troubling piece of this article is that the article leaves out how the underlying technology will need to change in order for AI to truly intertwine with humans. AI in the existing infrastructure and digital technology with no support of BMI, microbots, etc. will not evolve us to Singularity by itself and without changes to the existing digital landscape.

As artificial intelligence continuously evolves, the future of AI is also becoming more significantly challenging to perceive and comprehend for humans.

(Source: Jimmy Pike & Christopher Wilder ©Moor Insights & Strategy)

Microsoft and Facebook recently announced how they plan to drive leadership in the digital transformation and cloud market: bots. As more companies—especially application vendors—begin to drive solutions that incorporate machine learning, natural language, artificial intelligence, and structured and unstructured data, bots will increase in relevance and value. Google’s Cloud Platform (GCP) and Microsoft see considerable opportunities to enable vendors and developers to build solutions that can see, hear and learn as well as applications that can recognize and learn from a user’s intent, emotions, face, language and voice.

Bots are not new, but they have evolved over time through the evolution of artificial intelligence (AI), machine learning, ubiquitous connectivity and increases in data processing speeds. In the mid-1990s, the first commercial bots were developed. For example, I was on the ground floor for the first commercial chat providers called ichat. As with many disruptive media-based technologies, as was the case with ichat, early adopters tend to be the darker side of the entertainment industry. These companies saw a way to get website visitors to stay online longer by having someone to interact with their guests at 3AM. Out of this necessity, chatbots were developed. Thankfully, the technology has evolved and now bots are mainstream and are being used to helping people perform simple tasks and interact with service providers.

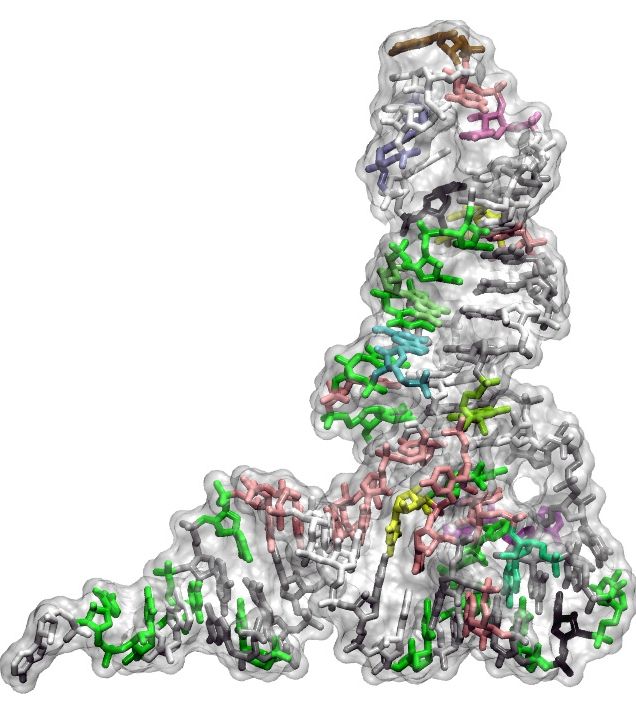

A study performed at IRB Barcelona offers an explanation as to why the genetic code stopped growing 3,000 million years ago. This is attributed to the structure of transfer RNAs—the key molecules in the translation of genes into proteins. The genetic code is limited to 20 amino acids—the building blocks of proteins—the maximum number that prevents systematic mutations, which are fatal for life. The discovery could have applications in synthetic biology.

Nature is constantly evolving—its limits determined only by variations that threaten the viability of species. Research into the origin and expansion of the genetic code are fundamental to explain the evolution of life. In Science Advances, a team of biologists specialised in this field explain a limitation that put the brakes on the further development of the genetic code, which is the universal set of rules that all organisms on Earth use to translate genetic sequences of nucleic acids (DNA and RNA) into the amino acid sequences that comprise the proteins that undertake cell functions.

Headed by ICREA researcher Lluís Ribas de Pouplana at the Institute for Research in Biomedicine (IRB Barcelona) and in collaboration with Fyodor A. Kondrashov, at the Centre for Genomic Regulation (CRG) and Modesto Orozco, from IRB Barcelona, the team of scientists has demonstrated that the genetic code evolved to include a maximum of 20 amino acids and that it was unable to grow further because of a functional limitation of transfer RNAs—the molecules that serve as interpreters between the language of genes and that of proteins. This halt in the increase in the complexity of life happened more than 3,000 million years ago, before the separate evolution of bacteria, eukaryotes and archaebacteria, as all organisms use the same code to produce proteins from genetic information.

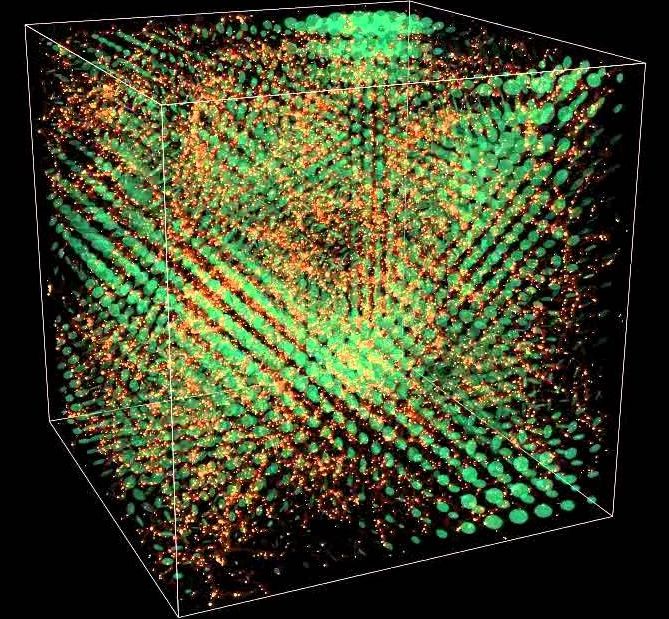

Quicker time to discovery. That’s what scientists focused on quantum chemistry are looking for. According to Bert de Jong, Computational Chemistry, Materials and Climate Group Lead, Computational Research Division, Lawrence Berkeley National Lab (LBNL), “I’m a computational chemist working extensively with experimentalists doing interdisciplinary research. To shorten time to scientific discovery, I need to be able to run simulations at near-real-time, or at least overnight, to drive or guide the next experiments.” Changes must be made in the HPC software used in quantum chemistry research to take advantage of advanced HPC systems to meet the research needs of scientists both today and in the future.

NWChem is a widely used open source software computational chemistry package that includes both quantum chemical and molecular dynamics functionality. The NWChem project started around the mid-1990s, and the code was designed from the beginning to take advantage of parallel computer systems. NWChem is actively developed by a consortium of developers and maintained by the Environmental Molecular Sciences Laboratory (EMSL) located at the Pacific Northwest National Laboratory (PNNL) in Washington State. NWChem aims to provide its users with computational chemistry tools that are scalable both in their ability to treat large scientific computational chemistry problems efficiently, and in their use of available parallel computing resources from high-performance parallel supercomputers to conventional workstation clusters.

“Rapid evolution of the computational hardware also requires significant effort geared toward the modernization of the code to meet current research needs,” states Karol Kowalski, Capability Lead for NWChem Development at PNNL.

Another amazing female pioneer in STEM and she was a NASA chief astronomer to boot!

A former chief astronomer at NASA will discuss the evolution of the universe from the Big Bang to black holes during a lecture on Thursday, March 24.

It’s the opening of the 19th Annual Dick Smyser Community Lecture Series.

It will feature Nancy Grace Roman, former chief of the NASA Astronomy and Relativity Programs in the Office of Space Science.

It’s been 20 years of PlayStation and the graphics have always been on the cutting edge. Join Gameranx on a trip down memory lane and watch the progression from innovation to powerhouse!

★ Gameranx Facebook: https://www.facebook.com/gameranx

★Subscribe for more: https://www.youtube.com/gameranxTV

An interstellar precursor mission has been discussed as a priority for science for over 30 years. It would improve our knowledge of the interstellar environment and address fundamental questions of astrophysics, from the origin of matter to the evolution of the Galaxy. A precursor mission would involve an initial exploration probe and aim to test technological capabilities for future large-scale missions. With this survey we intend to identify potential backers and gauge the public’s interest in such a mission.

This survey is conducted by the International Space University (www.isunet.edu) in collaboration with the Initiative for Interstellar Studies (www.I4IS.org). Your data will not be shared with any other organisation.