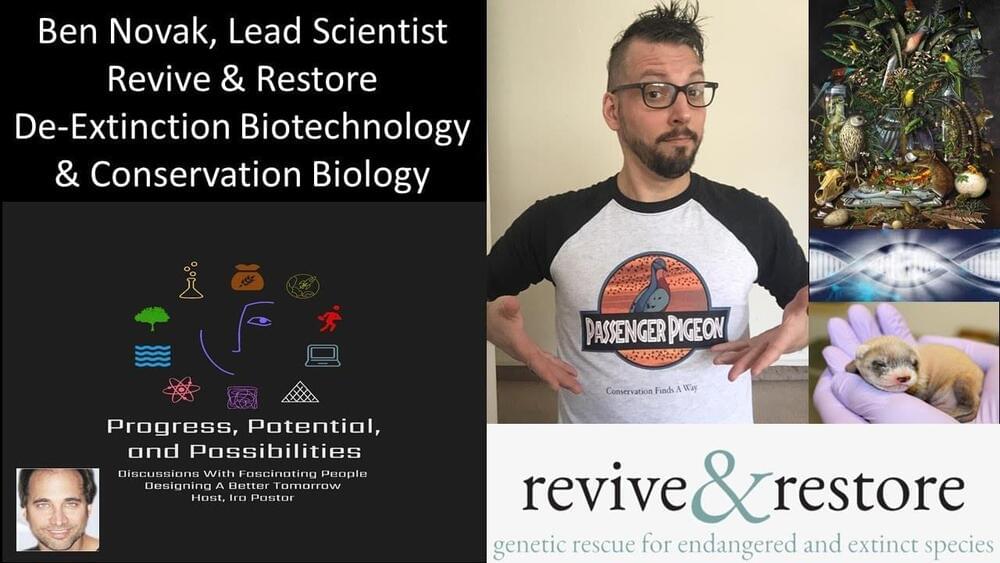

“De-Extinction” Biotechnology & Conservation Biology — Ben Novak, Lead Scientist Revive & Restore

Ben Novak is Lead Scientist, at Revive & Restore (https://reviverestore.org/), a California-based non-profit that works to bring biotechnology to conservation biology with the mission to enhance biodiversity through the genetic rescue of endangered and extinct animals (https://reviverestore.org/what-we-do/ted-talk/).

Ben collaboratively pioneers new tools for genetic rescue and de-extinction, helps shape the genetic rescue efforts of Revive & Restore, and leads its flagship project, The Great Passenger Pigeon Comeback, working with collaborators and partners to restore the ecology of the Passenger Pigeon to the eastern North American forests. Ben uses his training in ecology and ancient-DNA lab work to contribute, hands-on, to the sequencing of the extinct Passenger Pigeon genome and to study important aspects of its natural history (https://www.youtube.com/watch?v=pK2UlLsHkus&t=1s).

Ben’s mission in leading the Great Passenger Pigeon Comeback is to set the standard for de-extinction protocols and considerations in the lab and field. His 2018 review article, “De-extinction,” in the journal Genes, helped to define this new term. More recently, his treatment, “Building Ethical De-Extinction Programs—Considerations of Animal Welfare in Genetic Rescue” was published in December 2019 in The Routledge Handbook of Animal Ethics: 1st Edition.

Ben’s work at Revive & Restore also includes extensive education and outreach, the co-convening of seminal workshops, and helping to develop the Avian and Black-footed Ferret Genetic Rescue programs included in the Revive & Restore Catalyst Science Fund.

Ben graduated from Montana State University studying Ecology and Evolution. He later trained in Paleogenomics at the McMaster University Ancient DNA Centre in Ontario. This is where he began his study of passenger pigeon DNA, which then contributed to his Master’s thesis in Ecology and Evolutionary Biology at the University of California Santa Cruz. This work also formed the foundational science for de-extinction.

Ben also worked at the Australian Animal Health Laboratory–CSIRO (Commonwealth Scientific and Industrial Research Organisation) to advance genetic engineering protocols for the pigeon.