AI squad mates. Called this a few years ago. It’s too annoying getting strangers to join up on some online task for a game.

Who wouldn’t want an A.I.to sit there and play backseat gamer? That’s exactly what looks to be happening thanks to a recently revealed Sony patent. The patent is for an automated Artificial Intelligence (A.I.) control mode specifically designed to perform certain tasks, including playing a game while the player is away.

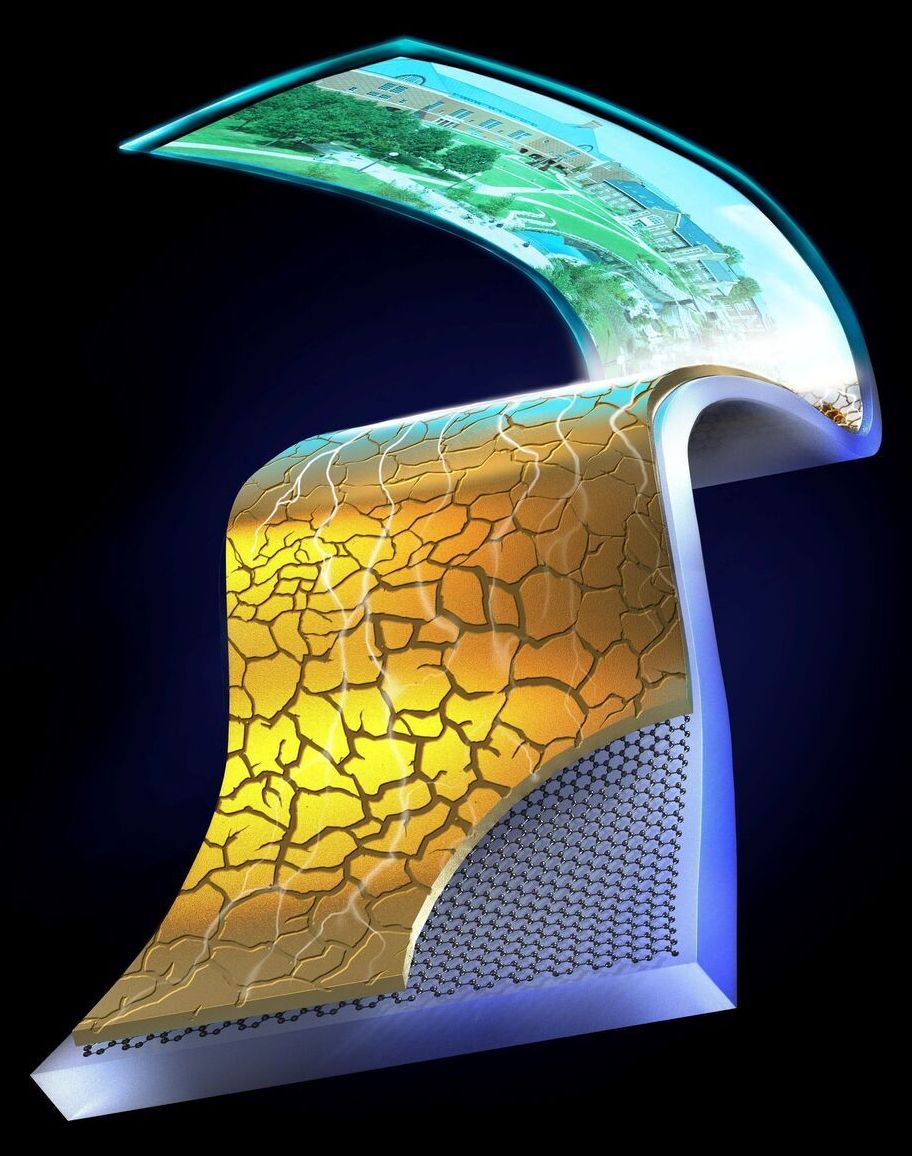

In the patent, as spotted by SegmentNext, it’s detailed that this A.I. will involve assigning a default gameplay profile to the user. This profile will include a compendium of information detailing the player’s gaming habits, play styles, and decision-making processes while sitting down for a new adventure. This knowledge can then be harnessed to simulate the player’s gaming habits, even when said gamer is away from their platform of choice.

“The method includes monitoring a plurality of game plays of the user playing a plurality of gaming applications,” reads the patent itself. “The method includes generating a user gameplay profile of the user by adjusting the default gameplay style based on the plurality of game plays, wherein the user gameplay profile incudes a user gameplay style customized to the user. The method includes controlling an instance of a first gaming application based on the user gameplay style of the user gameplay profile.”