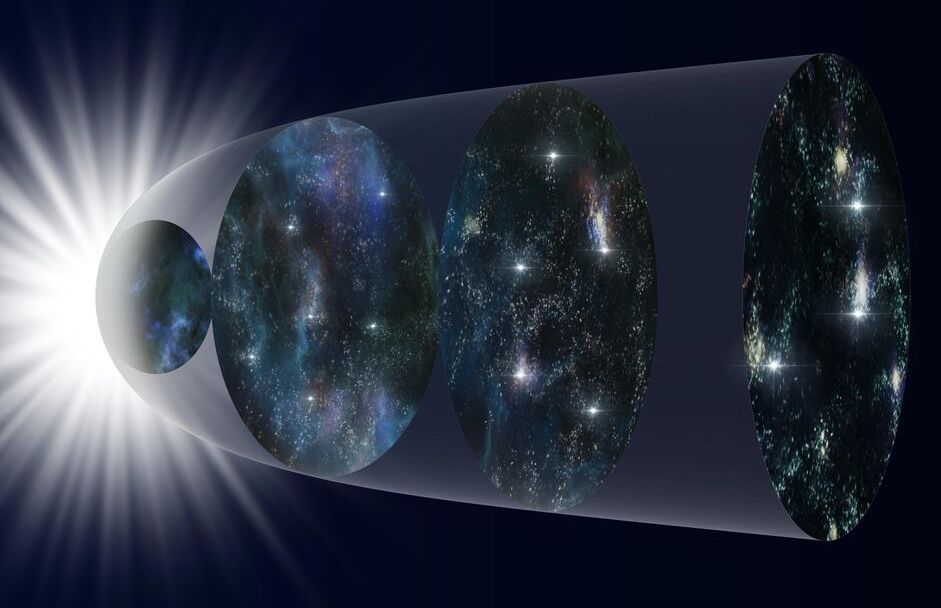

What does quark-gluon plasma—the hot soup of elementary particles formed a few microseconds after the Big Bang—have in common with tap water? Scientists say it’s the way it flows.

A new study, published today in the journal SciPost Physics, has highlighted the surprising similarities between quark-gluon plasma, the first matter thought to have filled the early Universe, and water that comes from our tap.

The ratio between the viscosity of a fluid, the measure of how runny it is, and its density, decides how it flows. Whilst both the viscosity and density of quark-gluon plasma are about 16 orders of magnitude larger than in water, the researchers found that the ratio between the viscosity and density of the two types of fluids are the same. This suggests that one of the most exotic states of matter known to exist in our universe would flow out of your tap in much the same way as water.