Rarely does scientific software spark such sensational headlines. “One of biology’s biggest mysteries ‘largely solved’ by AI”, declared the BBC. Forbes called it “the most important achievement in AI — ever”. The buzz over the November 2020 debut of AlphaFold2, Google DeepMind’s (AI) system for predicting the 3D structure of proteins, has only intensified since the tool was made freely available in July.

The excitement relates to the software’s potential to solve one of biology’s thorniest problems — predicting the functional, folded structure of a protein molecule from its linear amino-acid sequence, right down to the position of each atom in 3D space. The underlying physicochemical rules for how proteins form their 3D structures remain too complicated for humans to parse, so this ‘protein-folding problem’ has remained unsolved for decades.

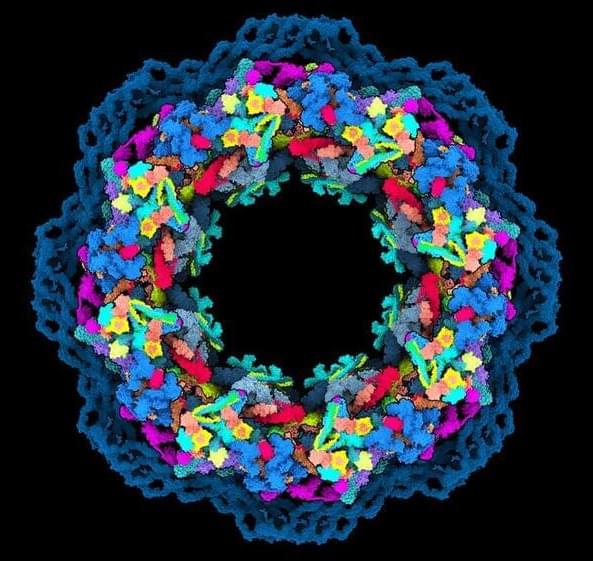

Researchers have worked out the structures of around 160,000 proteins from all kingdoms of life. They have been using experimental techniques, such as X-ray crystallography and cryo-electron microscopy (cryo-EM), and then depositing their 3D information in the Protein Data Bank. Computational biologists have made steady gains in developing software that complements these methods, and have correctly predicted the 3D shapes of some molecules from well-studied protein families.