Artificial intelligence is already helping determine your future – whether it’s your Netflix viewing preferences, your suitability for a mortgage or your compatibility with a prospective employer. But can we agree, at least for now, that having an AI determine your guilt or innocence in a court of law is a step too far?

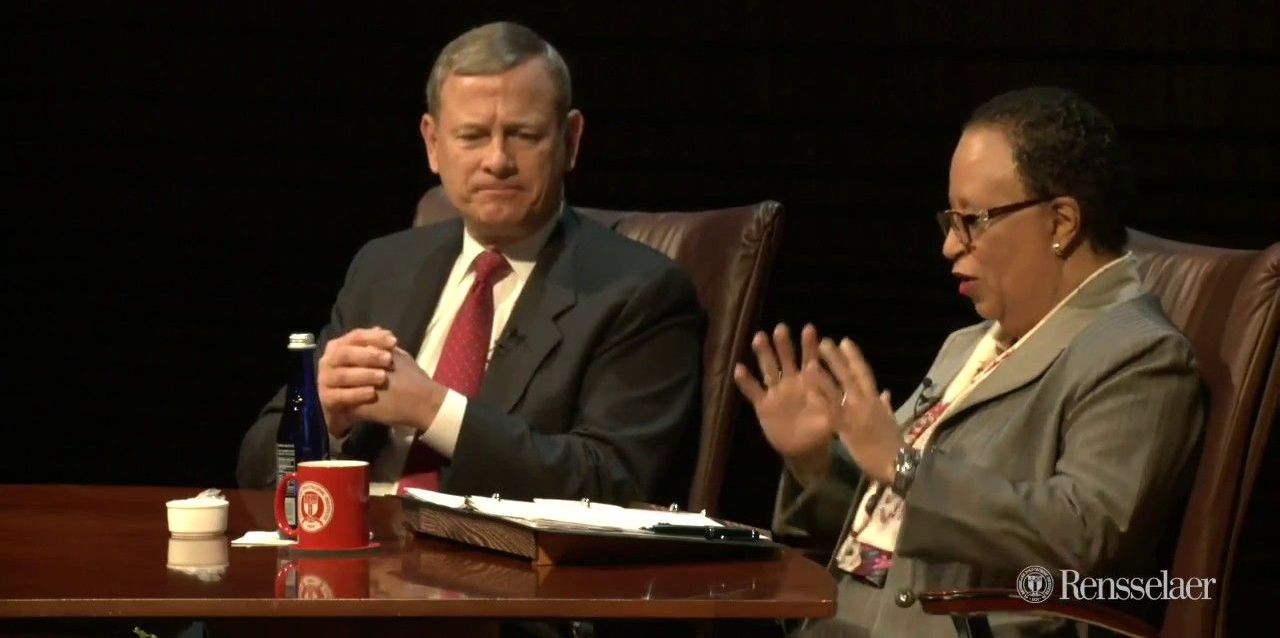

Worryingly, it seems this may already be happening. When American Chief Justice John Roberts recently attended an event, he was asked whether he could forsee a day “when smart machines, driven with artificial intelligences, will assist with courtroom fact finding or, more controversially even, judicial decision making”. He responded: “It’s a day that’s here and it’s putting a significant strain on how the judiciary goes about doing things”.

Roberts might have been referring to the recent case of Eric Loomis, who was sentenced to six years in prison at least in part by the recommendation of a private company’s secret proprietary software. Loomis, who has a criminal history and was sentenced for having fled the police in a stolen car, now asserts that his right to due process was violated as neither he nor his representatives were able to scrutinise or challenge the algorithm behind the recommendation.