Hundreds of millions of years of evolution have produced a variety of life-forms, each intelligent in its own fashion. Each species has evolved to develop innate skills, learning capacities, and a physical form that ensures survival in its environment.

But despite being inspired by nature and evolution, the field of artificial intelligence has largely focused on creating the elements of intelligence separately and fusing them together after the development process. While this approach has yielded great results, it has also limited the flexibility of AI agents in some of the basic skills found in even the simplest life-forms.

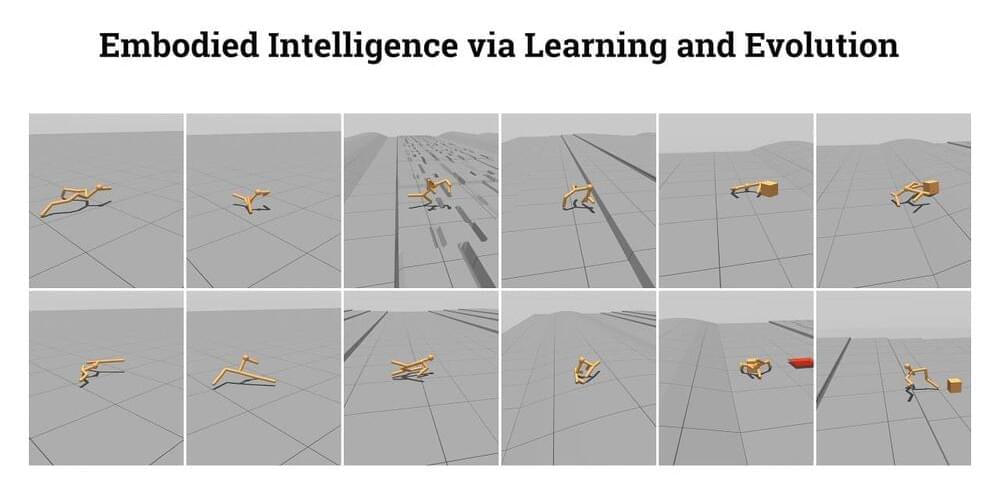

In a new paper published in the scientific journal Nature, AI researchers at Stanford University present a new technique that can help take steps toward overcoming some of these limits. Called “deep evolutionary reinforcement learning,” or DERL, the new technique uses a complex virtual environment and reinforcement learning to create virtual agents that can evolve both in their physical structure and learning capacities. The findings can have important implications for the future of AI and robotics research.