An outstanding idea, because for one there has been a video/ TV show/ movie, etc… showing every conceivable action a human can do; and secondly the AI could watch all of these at super high speeds.

Predicting what someone is about to do next based on their body language comes naturally to humans but not so for computers. When we meet another person, they might greet us with a hello, handshake, or even a fist bump. We may not know which gesture will be used, but we can read the situation and respond appropriately.

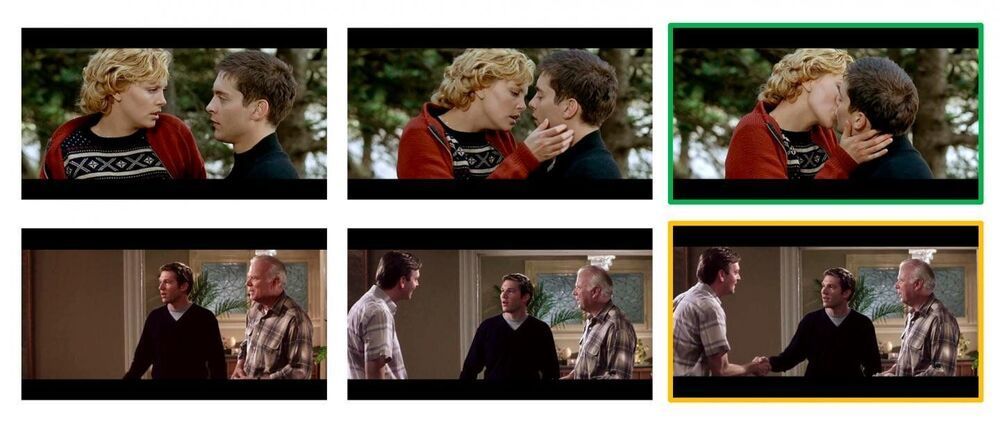

In a new study, Columbia Engineering researchers unveil a computer vision technique for giving machines a more intuitive sense for what will happen next by leveraging higher-level associations between people, animals, and objects.

“Our algorithm is a step toward machines being able to make better predictions about human behavior, and thus better coordinate their actions with ours,” said Carl Vondrick, assistant professor of computer science at Columbia, who directed the study, which was presented at the International Conference on Computer Vision and Pattern Recognition on June 24, 2021. “Our results open a number of possibilities for human-robot collaboration, autonomous vehicles, and assistive technology.”