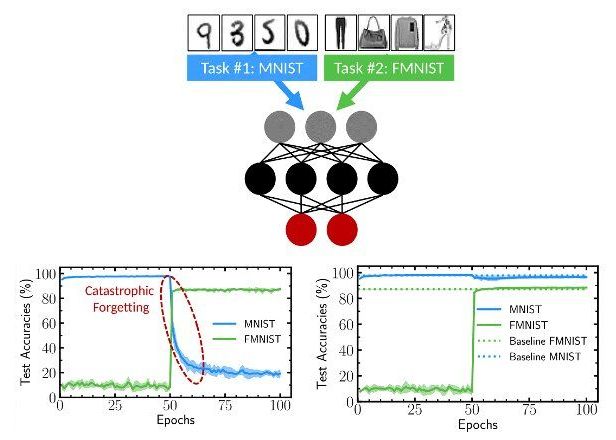

Deep neural networks have achieved highly promising results on several tasks, including image and text classification. Nonetheless, many of these computational methods are prone to what is known as catastrophic forgetting, which essentially means that when they are trained on a new task, they tend to rapidly forget how to complete tasks they were trained to complete in the past.

Researchers at Université Paris-Saclay-CNRS recently introduced a new technique to alleviate forgetting in binarized neural networks. This technique, presented in a paper published in Nature Communications, is inspired by the idea of synaptic metaplasticity, the process through which synapses (junctions between two nerve cells) adapt and change over time in response to experiences.

“My group had been working on binarized neural networks for a few years,” Damien Querlioz, one of the researchers who carried out the study, told TechXplore. “These are a highly simplified form of deep neural networks, the flagship method of modern artificial intelligence, which can perform complex tasks with reduced memory requirements and energy consumption. In parallel, Axel, then a first-year Ph.D. student in our group, started to work on the synaptic metaplasticity models introduced in 2005 by Stefano Fusi.”