In recent years, videogame developers and computer scientists have been trying to devise techniques that can make gaming experiences increasingly immersive, engaging and realistic. These include methods to automatically create videogame characters inspired by real people.

Most existing methods to create and customize videogame characters require players to adjust the features of their character’s face manually, in order to recreate their own face or the faces of other people. More recently, some developers have tried to develop methods that can automatically customize a character’s face by analyzing images of real people’s faces. However, these methods are not always effective and do not always reproduce the faces they analyze in realistic ways.

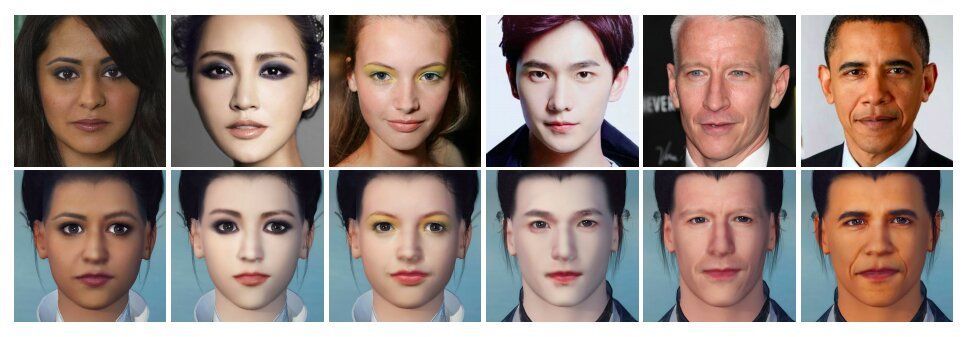

Researchers at Netease Fuxi AI Lab and University of Michigan have recently created MeInGame, a deep learning technique that can automatically generate character faces by analyzing a single portrait of a person’s face. This technique, presented in a paper pre-published on arXiv, can be easily integrated into most existing 3D videogames.