Brain-computer interfaces (BCIs) are tools that can connect the human brain with an electronic device, typically using electroencephalography (EEG). In recent years, advances in machine learning (ML) have enabled the development of more advanced BCI spellers, devices that allow people to communicate with computers using their thoughts.

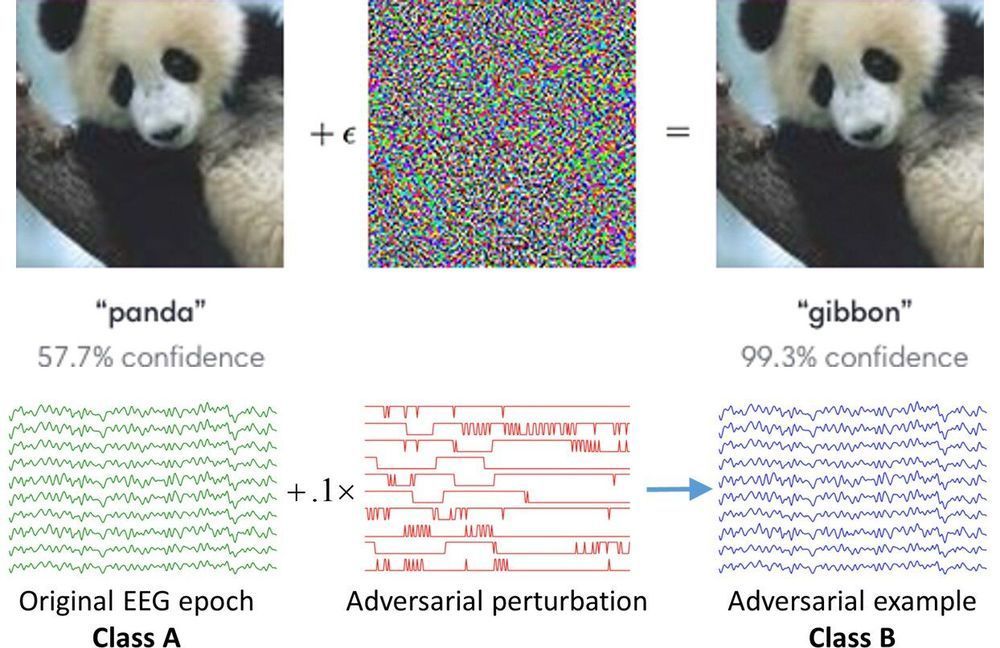

So far, most studies in this area have focused on developing BCI classifiers that are faster and more reliable, rather than investigating their possible security vulnerabilities. Recent research, however, suggests that machine learning algorithms can sometimes be fooled by attackers, whether they are used in computer vision, speech recognition, or other domains. This is often done using adversarial examples, which are tiny perturbations in data that are indistinguishable by humans.

Researchers at Huazhong University of Science and Technology have recently carried out a study investigating the security of EEG-based BCI spellers, and more specifically, how they are affected by adversarial perturbations. Their paper, pre-published on arXiv, suggests that BCI spellers are fooled by these perturbations and are thus highly vulnerable to adversarial attacks.