Analog machine learning hardware offers a promising alternative to digital counterparts as a more energy efficient and faster platform. Wave physics based on acoustics and optics is a natural candidate to build analog processors for time-varying signals. In a new report on Science Advances Tyler W. Hughes and a research team in the departments of Applied Physics and Electrical Engineering at Stanford University, California, identified mapping between the dynamics of wave physics and computation in recurrent neural networks.

The map indicated the possibility of training physical wave systems to learn complex features in temporal data using standard training techniques used for neural networks. As proof of principle, they demonstrated an inverse-designed, inhomogeneous medium to perform English vowel classification based on raw audio signals as their waveforms scattered and propagated through it. The scientists achieved performance comparable to a standard digital implementation of a recurrent neural network. The findings will pave the way for a new class of analog machine learning platforms for fast and efficient information processing within its native domain.

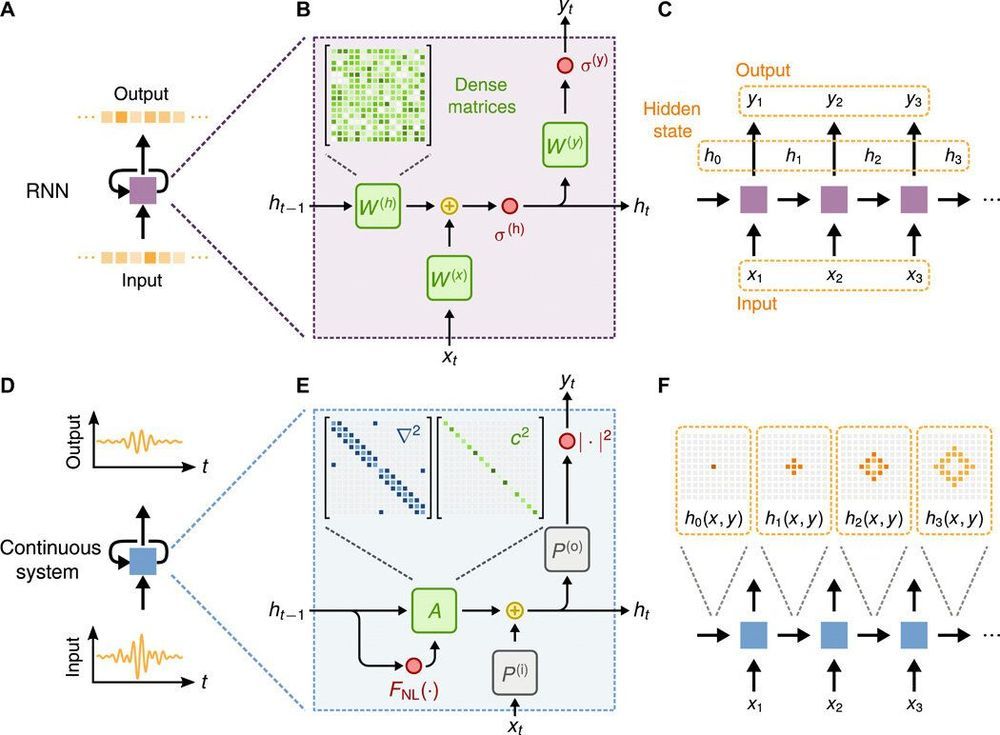

The recurrent neural network (RNN) is an important machine learning model widely used to perform tasks including natural language processing and time series prediction. The team trained wave-based physical systems to function as an RNN and passively process signals and information in their native domain without analog-to-digital conversion. The work resulted in a substantial gain in speed and reduced power consumption. In the present framework, instead of implementing circuits to deliberately route signals back to the input, the recurrence relationship occurred naturally in the time dynamics of the physics itself. The device provided the memory capacity for information processing based on the waves as they propagated through space.